15-463 Computational

Photography

Final Project: Feature Matching &

Automatically Stitching 360 Panoramas

Hassan Rom

mrom at andrew.cmu.edu

Introduction

The goal of our final project is to extend project 4 to automatically construct

360 panoramas from images in a directory supplied by the user. The program we

have developed can be categorized into two parts. The first part of the

program constructs correspondence between all pairs of input images and the

second part of the program, uses the correspondence from the first part to

construct a panorama.

Building the Graph

The user will provide the program the path to the directory where the images

are located. For each image in the directory, the program will build a set of

feature descriptors. Using these feature descriptors, the program tries to

identify correspondence between all pairs of input images. The program

represents image correspondence with a graph where a node in graph

represents an image and an edge from node a to node b represents the

correspondence from image a to image b.

The figure above is an example of a correspondence graph. It's a bit hard to see, but

there are 5 groups of images. The program succeeded in identifying 2 panoramas

out of 3.

Constructing the Panorama

To construct the panorama, we do a breadth first traversal of the graph

starting from a node specified by the user. As we traverse the graph, we

incrementally build the panorama by stitching the panorama we have so far with

the image corresponding to the current node we are at.

Results

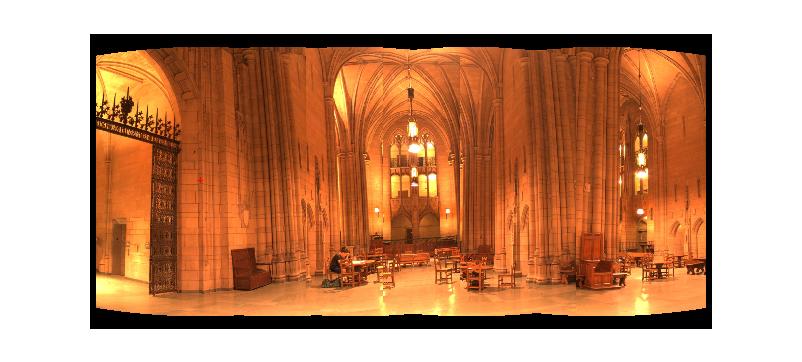

The panorama above is constructed from the same set of pictures as in project

4. If you look closely, there are noticable seams/discontinuities between two

pair of overlapping images. One possible reason why this is so is the

estimated focal length is not accurate which would make it harder for us to

align the two images. Another possible reason is the two images we want to

stitch might have different exposure levels. Note that we purposely did not

apply any feathering/blending algorithms when stitching two images so that

we can easily see glitches in our program.

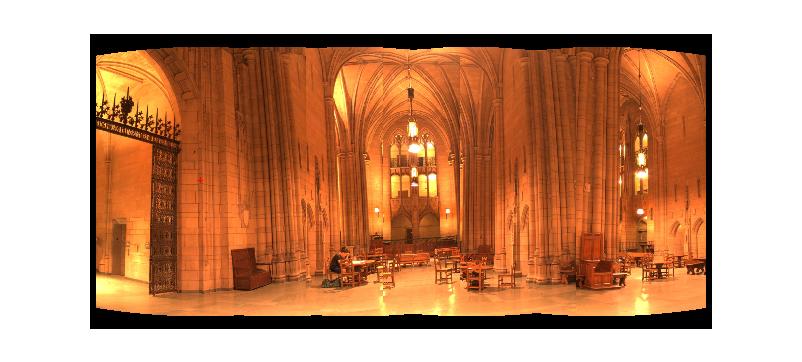

Another example(images taken from Richard Szeliski's Computer Vision class)

which didn't work as well as the previous panorama.

The program assumes that the focal length is the same for all the images, but

in some of the images below, you can see that's not the case.

Conclusion

It turned out the project was harder than we initially thought. There were

several problems that is worth mentioning.

Firstly, it is very hard to get an accurate estimate of the focal length.

An accurate estimate of the focal length is important to minimize error when

estimating the rotation matrix of the Homography.

Secondly, the whole program is complex and it is clear that the program

consists of several independent modules. A module that handles stitching two

images is one example, and a module that generates feature descriptors is

another. Each of these modules has many different implementation with its own

set of pros and cons. Having a clear understanding of the problem and

available solutions would definitely help in getting the correct design of the

program the first time.

Lastly, we made the mistake of going straight to spherical panoramas first as opposed to

cylindrical panoramas. It seems to us that cylindrical panoramas are much

easier than spherical panoramas since aligning two images in the cylindrical

image space is translational but in spherical image space, moving an image along the

latitude would also require warping of the image.

What worked? What didn't?

For the most part, our program is able to correctly identify correspondence

between images in a directory although it would be nice if we could compare

our current implementation with different kinds of feature descriptors, SIFT for

example.

As of now, unfortunately, our program is not able to construct spherical

panoramas. Also, we have yet to implement any feathering/blending algorithms

when stitching the two images.

Future Work

Despite all the hardships, we liked this project. While working on this

project, there were some unanswered questions that we would like to look into

more.

How can we use the graph representation to choose which image should be at the

center of the panorama?

Can the graph help us to choose an optimal path such that it would minimize

the total error?

Can we use the graph to construct a virtual world similar to Quicktime VR on

the fly?

In general, what are the useful properties of the graph?