Playing in the Gradient Domain

Project 2

This project was coded using MatLab, a powerful matrix based, mathematical programming language that allows for fairly flexible image manipulation. In addition it is a powerhouse for crunching through systems of equations, which is really useful for this project.

Each part of the project was fairly separate from the others, all were related to the gradient domain; however, each was a different manipulation of the domain.

TOY PROBLEM

The purpose of this section was to get used to the gradient domain and how to reconstruct images after manipulating the gradients. So the big part of this section was the framework behind the gradient crunching.

If you were to write out what a gradient is you would come up with the following:

pi - pj = pij_gradient

where pj is an adjacent pixel to pi. If we collect all of the gradients from the source image, and we wanted to put them back in to the original image we would just reverse the process and compute the output. So:

vi - vj = pij_gradient

Where vi correlates to pi from before, and vj correlates to pj before. The computationally large thing that needs to happen is that this has to be done for every pixel, in both the y direction and the x direction. A nifty way to compute this is to use two rather large matrices to solve for a number of linear equations. If you think about the above:

vi-vj = pi - pj as (Ai)*vi - (Aj)*vj = pi-pj

you can write it in the following form:

A*v = b

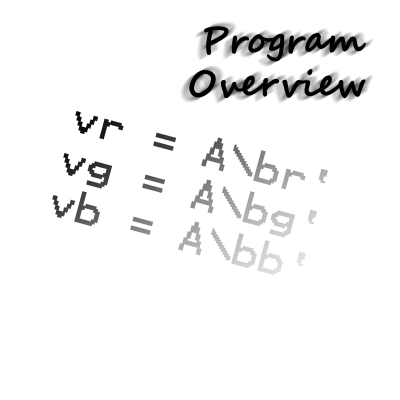

where A is a sparse matrix containing the coefficients of v, and b is a matrix contains the gradients from the source image. Given this notation you can use the nifty v = A\b function to solve for v. Another way to visualize what is going on (this would be an example of a 3x3 picture, you can imagine how large it would be for a full image!):

v1 v2 v3 v4 v5 v6 v7 v8 v9

[1 0 0 -1 0 0 0 0 0] [ -.125]

A : [0 0 0 1 0 0 -1 0 0] * v = b:[.546]

[0 1 0 0 -1 0 0 0 0] [.108]

[0 0 0 0 1 0 0 -1 0] [-.087]

... ...

This would go on for 2*(sizex*sizey) total entries. The b vector correlates to the gradients, the v vector correlates to the output pixel intensity, and the A matrix correlates to how they relate to each other. It should also be noted that you will need one reference point, this will be the one pixel that all of the other pixels will be calculated off of. So you would end up having the following equation added to the end of the above set:

v(1,1) = p(1,1) or A: [1 0 0 0 0 0 0 0 0] and b:[p(1,1)]

Taking the above idea, you can see that every pixel is defined by its relation to the pixels around it. This means that unlike the RGB space, where when you change the intensity of pixel, its neighbor does not change, in the gradient domain the changing of one data point can affect the entire image. Extending this thought also leads to the computational intensity of the operation. For a given image, lets say 200x200 pixels, you will end up solving 80,000 equations for 40,000 unknowns. This comes in to play later in part 3.

Poisson Blending

For part 2 I was implementing something called Poisson blending. This process is much easier described, than when written down as math. The basic concept is that when you have an source image that you want to encode in to a target image, you can make use of the gradient domain to get seamless blending.

The algorithm can be described in this way:

For the area outside of the area you are blending, this is really simple. The output pixel is just the pixel from the target image, nothing fancy, just duplicated on to the output.

For the area you are blending, the border pixels are determined by a nifty equation:

vi = (si-sj)+tj

Where vi is the output pixel, si is the corresponding pixel from the source (or thing you are adding to the target) and sj and tj are the neighboring pixel from the source and the target respectively. It is important to note, that these pixels are known in the overall system of equations.

Then for the rest of the pixels inside of the target area, their gradients are found. Based on these gradients and the pixels from above, an output image is created, similarly to the toy problem (but only over the mask of the source image). This image is added to the target image, and viola! You have a blended image.

Mixed Blending

This is more or less a modification to the above, Poisson blending. Inside of the source area, when finding the gradients to use, you pull from both the source and the target. You take whatever gradient is the largest and use that instead. This very basic change, can have drastic and profound changes to the output. This allows for things like background texture to show up through source images (look at the graffiti examples), this also allows for things with gaps to let the background through (example: the boat tattoo).

Non-Photorealistic Rendering

This problem came down to code optimization. The concept behind the problem is not that hard. I have an option to pick threshold valued based on color channels or total averages, and an input for a threshold value. After the gradients are calculated, a loop is run that compares all of the gradients to the threshold, if they are above the threshold, then they are ignored. However, if the pixel values fall below a certain point then they are instead set to zero. This has the effect of normalizing the image. It makes the colors more consistent, and removes all small amounts of noise (like skin texture, or lighting affects).

All of that is fairly straightforward after you get the toy problem done, and after the Poisson blending. The problem is computational complexity. To render any sort of image takes quite a long while... there is a lot of processing that goes on.

So when I started, rendering a color image that was 308 pixels by 231 pixels took 6 minutes and 9 seconds. Once I finished stream lining the algorithm it took 2 seconds. For a bigger image: 460:350, the old way did not finish in 25 mins however the streamlined version took 6 seconds.

I was able to achieve this through a fairly in depth analysis of how matlab uses the sparse matrix form, and looking at how to vectorize the problem.

So the first choke point was calculating the gradients. For calculating the x and y gradients, I had nested loops that were used to cover all of the pixels. In the center of these loops I was calculating the coefficients of A, and also the gradients for b. After talking this over with Ronit, and Matt (thanks again guys) I was able to use the a gradient kernel and use a convolution to find the gradients. This seemed like it was going to fix all of my problems! But alas, this didn't end up taking that much time.

The true problem was in the sparse matrix. If you were like me and did not previously know what a sparse matrix is: it is a matrix that is primarily zeros. That is it. Matlab uses a linked list data type to store this, so really all it is storing is the value and then how many zeros are between it and the next data point. This considerably cuts down the size of the gigantic images that I was using. So back to the problem: inside of these loops there were two assignments to the sparse matrix. Turns out index access to a sparse matrix takes forever! So now how to solve this... the sparse data form is very useful in the v = A\b operator, matlab has optimized this quite well. And for images larger than 50x50 a normal matrix ends up being far to large to fit in to memory. So a sparse is necessary.

My answer came in the form of how to form sparse matrices. Turns out you can create a sparse matrix with the coordinates and the values of the data points you are going to fill it with. So inside of the loops I was just creating a list of coordinates and values (1 or -1) and then at the end of my loop I was able to construct the sparse matrix from these values. Now you might ask, what about the other data you need to add to A? Well, matlab can cat two sparse matrices together very quickly, so this is not really a problem!

This solution, in code, looks a lot more convoluted, but effectively conserves memory and computation to give a fairly good optimization. The current "choke point" is in the v = A\b calculation, which for a color image has to happen 3 times.

Project Reflection

I certainly learned a ton working on this project. Pulling out the algorithm from the project description was difficult to put it lightly (and the paper was worse). Thanks to Ronit, and her particular care in re-defining what were were attempting, everything seemed to make more sense. Anther point of struggle was the optimization, it was just a lot of work. The previous way of figuring out the gradients was fairly forgiving in terms of size of matrices, as long as things were fairly similar it would work out. But with the optimization you need to be exact in your matrix sizes. This caused a little bit of a headache trying to debug, but was a rather enjoyable outcome.

Future work:

The next thing that I would work on would be making the NPR algorithm visually better. This could possibly be done by adding black lines around the edges (see the Gradient Shop site for examples). Other things would be to add more usability to the blending. At the moment any (effective) resizing or rotation had to be done outside of the program (I used photoshop to get the scales right). I don't think it would be to hard to code a matlab GUI to make this process more user friendly.

Things that I blended well with:

- Coming up with Fun Examples (thanks for letting me use your picture, Mom!)

- Dominating the Optimization for NPR

- After spending so much time working on the Poisson, finally seeing it work

Gradient mismatches:

- Rough time beating my head against the Poisson equation

- Waiting for large images to run (dang... 7 minutes... that is forever!)

- Not enough time to see amazing things (I guess that is a good thing)