QoS for databases

People

|

|

Motivation

There is a strong economic motivation for online retailers (e.g. Amazon) to

provide lower response times to their more important, ``big-spending'' clients,

so as not to lose these clients. It is likewise financially desirable to

offer certain clients Service Level Agreements (SLAs) to guarantee them certain

QoS goals, such as high throughput or low mean response time.

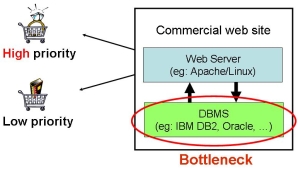

Because the dominant time associated with serving a dynamic web request is the time

spent at the backend database/storage system (see Figure 1),

it is important that the QoS be applied to the backend database/storage system to

control the time spent there.

Unfortunately, most commercial database

management systems (DBMS), which lie at the heart of e-commerce applications,

do not provide effective transaction prioritization for transactional,

web-driven workloads.

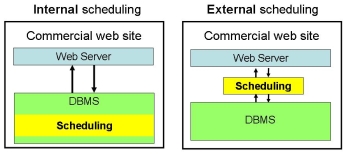

Figure 1: Internal versus external database scheduling.

In this project we investigate two different approaches for achieving service differentiation

for database transactions, internal scheduling (see Figure 1 (left)) and external scheduling (see Figure 1 (right)).

Results

Database internal scheduling for effective transaction prioritization

Effective transaction prioritization depends on two questions: what is the bottleneck resource in

the DBMS where priority scheduling should be applied; and what is the right scheduling policy for this resource?

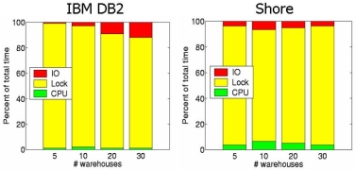

In our work in~\cite{McWherter04}, we perform a detailed bottleneck analysis of resource usage

for several common DBMS under transactional workloads and

a range of configurations. We also implement and evaluate the performance of several

standard prioritization policies.

We find that, while most commercial tools focus on CPU scheduling,

the most significant improvements for high-priority transactions

are often achieved by lock scheduling. The reason is, that for

for the common case of TPC-C like workloads running on DBMS with two-phase locking,

the critical bottleneck resource is lock waiting. Figure 2 below illustrates this finding with two sample results from our

bottleneck analysis for TPC-C running on IBM DB2 (left) and Shore (right) and varying database size.

Figure 2: Resource breakdown for one sample TPC-C setup on

IBM DB2 (left) and Shore (right).

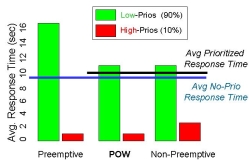

We also propose a novel solution to a key question in lock scheduling: whether to allow preemption or not.

While preemptive policies allow high-priority transactions to

reduce lock waiting time by killing other lock holders, rollbacks and re-executions may be too

costly. Non-preemptive policies avoid these overheads, but high-priority

transactions may wait for low-priority transactions to complete

before making progress. We provide a detailed statistical analysis of

locking in transactional workloads under several lock prioritization

policies. Based on the results, we propose and implement a new policy,

POW, that provides all the benefits of preemptive prioritization without

its penalties.

Figure 3: The performance of POW for low priority transactions (left)

and high priority transactions (right), compared with other commonly used policies.

POW provides significantly better priority differentiation than other policies.

Several other researchers have followed up on this work.

Some apply similar ideas to web requests [Zhou06],

or propose to improve upon our solution through the use of query progress indicators~\cite{Luo06}

or by making scheduling adaptive to workload changes [Zhang04,Zhang05]. Others

have provided lock scheduling policies with theoretical guarantees [Guerraoui05]

or I/O scheduling that can be used in combination with our priority mechanisms [Hall].

Providing QoS from outside the database system

The goal of this proposal is to design a new approach for service differentiation

in backend servers which is portable across different DBMS, storage devices, caches, and other

backend servers.

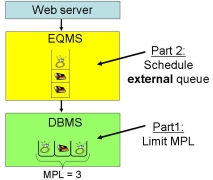

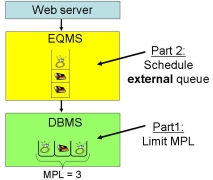

We develop an External QoS Management System (EQMS),

that sits between the web server (or the application server) and the backend server

(see Figure 4).

The key idea of the EQMS is to maintain

an upper limit on the number of transactions executing

simultaneously within the DBMS (called the Multi-Programming Limit, or

``MPL''), as shown in Figure 2. If a transaction

arrives and finds MPL number of transactions already in the DBMS, the arriving

transaction is held back in an external queue (no transactions are

dropped). QoS targets are then achieved by scheduling the external queue.

Response time for a transaction includes both waiting time

in the external queue (queuing time) and time spent within the DBMS

(execution time).

Figure 4: The EQMS sits between the web server and the database backend. The diamonds represent high priority ("important") clients with high value purchases.

The books represent low priority clients with low value purchases.

This approach of encapsulating QoS functionality in the EQMS outside the database has

many advantages: it doesn't require any changes to the DBMS and hence is portable across DBMS

and is marketable as an independent black-box device.

The approach is also effective across different workloads and changing workloads, since the

scheduling isn't tied to a particular bottleneck resource inside the DBMS.

Building the EQMS involves two key challenges.

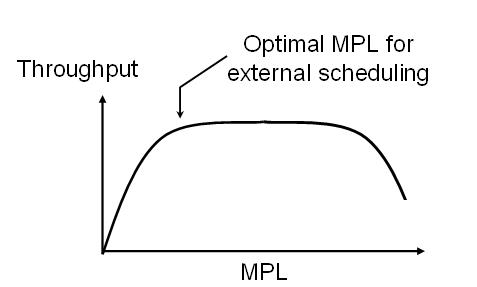

- Choosing the MPL: The performance of the EQMS depends critically on the particular multiprogramming

limit used (the MPL), i.e. the number of transactions allowed into

the database. If the MPL is too low, throughput will suffer, since

not all DBMS resources will be utilized. On the other hand, if the

MPL is too high, there is insufficient control on scheduling. The

question of how to adjust the MPL to achieve both goals simultaneously

is an open problem, not just for databases but in system design in

general.

Figure 5: The optimal MPL for external scheduling is high

enough to ensure good throughput and low enough to provide scheduling control.

In our work, we first investigate the question of whether there

exists an MPL that is low

enough to provide good service differentiation, while not harming throughput or

overall mean response time.

We find, using benchmark driven workloads, that such an MPL does in

fact exist.

Then we develop a feedback based controller, augmented by queueing theoretic

models for automatically adjusting the MPL.

We show, across a large variety of transactional workloads, that using this MPL we can lower

mean response times for high-priority

requests by an order of magnitude with only small penalty to low-priority requests.

These results are comparable to the best results achieved by scheduling internal DBMS

resources.

The results have been verified across 17 different workloads (including I/O-bound,

CPU bound and lock-bound workloads), database sizes ranging from 300MB to 2GB, and three different

database systems.

- Scheduling to achieve QoS targets:

A second key component of the EQMS is the scheduler which decides

on the order in which transactions are dispatched to the DBMS such that

the associated QoS targets are met.

The scheduler allows for an arbitrary number of different QoS classes,

where the classes can differ in their arrival rates, transaction types, etc.

Each class is associated with one or more QoS targets for (per-class) mean

response time, percentiles of response time, variability in response

time, best effort, or any combination thereof.

The core idea behind the scheduler is that,

by maintaining a low MPL, we obtain a better estimate of a

transaction's execution time within the DBMS, and hence we are able

to maintain accurate estimates of the per-class mean execution times.

This in turn gives us an upper bound on the queueing time for a transaction, which

can be used by the scheduler in order to ensure that QoS targets are

met. The actual algorithms that we use are more complex and rely on

queueing analysis in order to meet a more diverse set of QoS targets, and behave

in a self-adaptive manner.

In our experimental evaluation, we find that per-class mean response time targets

are typically met with an error of less than 5%. Furthermore, the percentile

targets are usually met with an error of less than 1%. We also find that the

EQMS is able to reduce the squared coefficient of variation (variability) for

each class from 2.3 to 0.11 for TPC-C type workloads, and from 15 to 0.19 for TPC-W

type workloads. Lastly, we find that the EQMS adapts quickly to changes in load

and works equally well across all workloads studied.

The results have been verified across 4 different workloads (including I/O-bound,

CPU bound and lock-bound workloads), database sizes ranging from 300MB to 2GB, and 2 different

database systems.

Impact

Several other researchers have followed up on our internal scheduling work.

Some apply similar ideas to web requests [Zhou06],

or propose to improve upon our solution through the use of query progress indicators [Luo06]

or by making scheduling adaptive to workload changes [Zhang04,Zhang05]. Others

have provided lock scheduling policies with theoretical guarantees [Guerraoui05]

or I/O scheduling that can be used in combination with our priority mechanisms [Hall].

The work on external scheduling forms the bases for a patent being filed by IBM.

Publications

The work on this project has resulted in four conference publications.

A journal version describing our work is in preparation.

- How to determine a good multi-programming level for external scheduling.

Bianca Schroeder, Mor Harchol-Balter, Arun Iyengar, Erich Nahum and Adam Wierman.

Appeared in the 22nd International Conference on Data Engineering (ICDE 2006).

Abstract / PDF

- Achieving class-based QoS for transactional workloads.

Bianca Schroeder, Mor Harchol-Balter, Arun Iyengar, Erich Nahum.

A short (3-page) version appeared as a poster paper in the 22nd International Conference on Data Engineering (ICDE 2006).

Abstract / PDF. A full version of the paper can be found here (PDF).

- Improving Preemptive Prioritization via Statistical Characterization of OLTP Locking.

David McWherter, Bianca Schroeder, Anastassia Ailamaki, and Mor Harchol-Balter.

Appeared in the 21nd International Conference on Data Engineering (ICDE 2005).

Abstract / PDF

- Priority Mechanisms for OLTP and Transactional Web Applications.

David McWherter, Bianca Schroeder, Anastassia Ailamaki, and Mor Harchol-Balter.

Appeared in the 20nd International Conference on Data Engineering (ICDE 2004).

Abstract / PDF

Related projects

We have also worked on a number of other projects that are closely related to this project:

Acknowledgements

We thank the TTC for funding this project, in cooperation with the IBM corporation. We are also

grateful for the continued support of other companies, including Network Appliance, Seagate Technology, Vercell Laboratories,

and Laurel Networks.

For questions and comments regarding this page, please contact Bianca Schroeder.

|