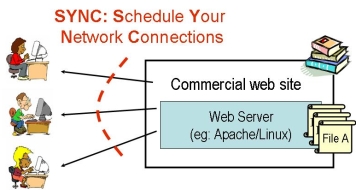

Scheduling in web servers: The SYNC project

MotivationA Web site's success depends on their ability to provide fast service to their users. Even short periods of slowdown or service interruption can not only send a customer to the `` just a click away'' competitor, but also reflect negatively on the corporate image as a whole. The goal of this project is to improve the user-perceived performance of static requests at a busy Web site. The approach we take does not require buying more hardware or any other costly system upgrades. The main idea is to schedule the existing resources better among requests so as to improve overall mean performance. Traditional Web servers simply time-share among all incoming requests, although scheduling theory suggests that scheduling jobs in the order of Shortest-Remaining-Processing-Time (SRPT) is optimal. The reason that SRPT is not used in practice is fear of starvation: when analyzed under the M/M/1 queue, SRPT significantly penalizes long jobs.

Results

How favoring short Web requests can improve performance of allAs part of this project we present an efficient kernel-level implementation of SRPT for static Web workloads. We show in an extensive experimental evaluation that SRPT-based scheduling can greatly reduce mean response times at a busy Web server.

Figure 1 above shows the mean response time of our new SRPT web server compared to a standard FAIR web server under a trace-based workload as a function of system load. We find that for high load, the SRPT server improves mean response times by nearly a factor of ten compared to a standard FAIR server. Most importantly, we find that these improvements in mean response time do not come at the expense of hurting requests for large files . Figure 2 shows the response time under the SRPT and the FAIR server as a function of the request size (percentile of the request size distribution). We find that even the requests for the largest file in the trace-based workload are not penalized. We argue that this is because Web file sizes exhibit heavy-tails, instead of following an exponential distribution as assumed in the traditional M/M/1 model.

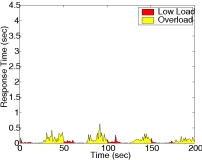

Improving system performance under transient overload conditionsWeb traffic is known to be bursty and hard to predict, and hence even well-provisioned servers can experience transient periods of overload. During overload the number of connections at a server grows rapidly, leading to long response times. We propose a solution for improving Web server performance under overload based on SRPT (Shortest-Remaining-Processing-Time) scheduling. The key idea is that a traditional server, by time-sharing among all requests, is slowing down every request in the system, causing a large connection buildup. On the other hand, SRPT-based scheduling minimizes the number of connections at a server by always working on the connection with the smallest amount of work left. We show in extensive experiments that SRPT-based scheduling significantly improves both server stability and client experience during transient overload conditions.

For example, Figure 3 above shows the response times under the standard FAIR server (left) and the SRPT server (right) for a sample workload exhibiting transient periods of overload (areas colored yellow in the graph). While the response times under the FAIR server grow dramatically during the transient overload periods, the response times under the SRPT server remain relatively low. Moreover, we show that due to the heavy-tailed property of Web workloads even requests for large files are not significantly penalized under SRPT.

Impact

The main paper from this work [TOCS03] and its tech-report have been cited more than 100 times

and have motivated a number of follow-up papers, including

generalizations and optimizations [Deb05, Gong04 ,Murta03,Rawat03,Yang04,Zhou06] and work on new policies [Friedman03, Rai03],

all favoring short jobs.

It has also inspired a new area of theoretical research on fairness of scheduling

policies [Bansal00, Raz04, Wierman03, Wierman05a, Wierman05b].

The work on scheduling under overload has won the best paper award at the International Teletraffic Congress in

2003 and resulted in a recent journal paper [TOIT06].

Publications

The work on this project has resulted in several conference and journal publications, which are listed below.

Related projects

We have also worked on a number of other projects that are closely related to this project:

AcknowledgementsWe thank the TTC for funding this project, in cooperation with the IBM corporation. We are also grateful for the continued support of other companies, including Network Appliance, Seagate Technology, Vercell Laboratories, and Laurel Networks. For questions and comments regarding this page, please contact Bianca Schroeder.

|