Description

Extracting a rich representation of an environment from visual sensor readings can benefit many tasks in robotics, e.g., path planning, mapping, and object manipulation. While important progress has been made, it remains a difficult problem to effectively parse entire scenes, i.e., to recognize semantic objects, man-made structures, and landforms. This process requires not only recognizing individual entities but also understanding the contextual relations among them.

The prevalent approach to encode such relationships is to use a joint probabilistic or energy-based model which enables one to naturally write down these interactions. Unfortunately, performing exact inference over these expressive models is often intractable and instead we can only approximate the solutions. While there exists a set of sophisticated approximate inference techniques to choose from, the combination of learning and approximate inference for these expressive models is still poorly understood in theory and limited in practice. Furthermore, using approximate inference on any learned model often leads to suboptimal predictions due to the inherent approximations.

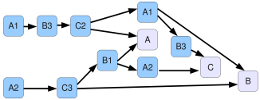

As we ultimately care about predicting the correct labeling of a scene, and not necessarily learning a joint model of the data, this work proposes to instead view the approximate inference process as a modular procedure that is directly trained in order to produce a correct labeling of the scene. Inspired by early hierarchical models in the computer vision literature for scene parsing, the proposed inference procedure is structured to incorporate both feature descriptors and contextual cues computed at multiple resolutions within the scene. We demonstrate that this inference machine framework for parsing scenes via iterated predictions offers the best of both worlds: state-of-the-art classification accuracy and computational efficiency when processing images and/or unorganized 3-D point clouds.

Updated Results (As of April 26, 2013)

The performance on the Stanford Background dataset is:

- Overall pixel accuracy: 81.6%

- Average per-class accuracy: 71.8%

- Using simple F-H segmentation to create a 4-level hierarchy

- Iterating up and down the hierarchy, as in the below ICRA 2011 paper

- Using feature descriptors provided by Ladicky 2011

- Using vector quantization described by Coates 2011

- Using multi-output regression trees (instead of 1 per class) during boosting

- Segmentations: 0.095

- Features: 0.462

- Inference: 0.037

Videos

Datasets

- Stanford Background Dataset

- MSRC Object Class Recognition

- Geometric Surface Context

- CMU/VMR Oakland 3-D Scenes

- CMU/VMR Urban Image+Laser Dataset (

1.1 GB )

Code

The original naive Matlab implementation of the ECCV 2010 paper: [code]

Presentations

- ECCV 2010 talk: [pptx] [pdf]

- ICRA 2011 talk: [pptx] [pdf]

- CVPR 2011 poster: [pdf]

- ECCV 2012 poster: [pdf]

- ECCV 2014 talk and poster: [link]

References

|

Stacked Hierarchical Labeling

ECCV 2010 [pdf] [project page] [bibtex] See the project page for updated results! |

|

Learning Message-Passing Inference Machines for Structured Prediction CVPR 2011 [pdf] [project page] [bibtex] |

|

3-D Scene Analysis via Sequenced Predictions over Points and Regions ICRA 2011 [pdf] [project page] [bibtex] |

|

Co-inference for Multi-modal Scene Analysis

ECCV 2012 [pdf] [project page] [bibtex] |

|

Pose Machines: Articulated Pose Estimation via Inference Machines J. A. Bagnell, Y. Sheikh ECCV 2014 [pdf] [project page] [bibtex] |

|

Inference Machines: Parsing Scenes via Iterated Predictions PhD Thesis, Carnegie Mellon University 2013 [pdf] [bibtex] |

Funding

- QinetiQ North America Robotics Fellowship

- ONR MURI grant N00014-09-1-1052, Reasoning in Reduced Information Spaces

- Collaborative Technology Alliance Program, Cooperative Agreement W911NF-10-2-0016