Gradient Networks

Explicit Shape Matching Without Extracting Edges

People

Martial Hebert

Description

Recognizing specific object instances in images of natural scenes is crucial for many applications ranging from robotic manipulation to visual image search and augmented reality. In particular, many objects in daily living environments lack texture and are primarily defined by their shape. Even though many shape matching approaches work well when objects are un-occluded, their performance decrease rapidly in natural scenes where occlusions are common. This sensitivity to occlusions arises because these methods are either heavily dependent on repeatable contour extraction or only consider information very locally. Other methods such as HOG only consider coarse gradient statistics and lose many fine grained details needed for instance detection. The main contribution of this paper is to bypass the brittle contour extraction stage and increase the robustness of explicit shape matching under occlusions by capturing contour connectivity directly on low-level image gradients. For each image pixel, our algorithm estimates the probability that it matches a template shape. The problem is formulated as traversing paths in a gradient network and is inspired by the edge extraction method of GradientShop (Bhat et al. 2010).

|

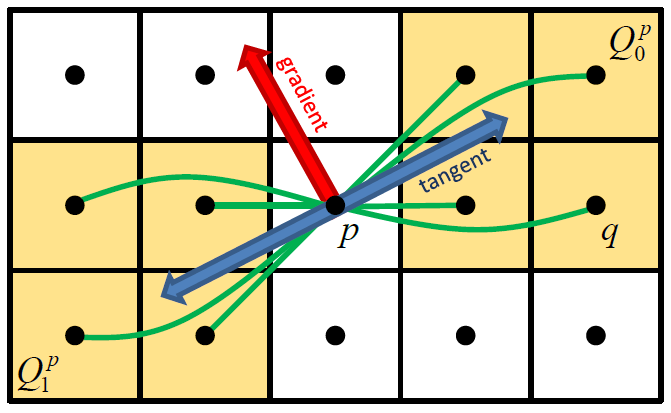

| Figure 1. Gradient network. Each node is a pixel in the image. We create a network (green) by connecting each pixel p with its 8 neighbors in the direction of the local tangent. |

|

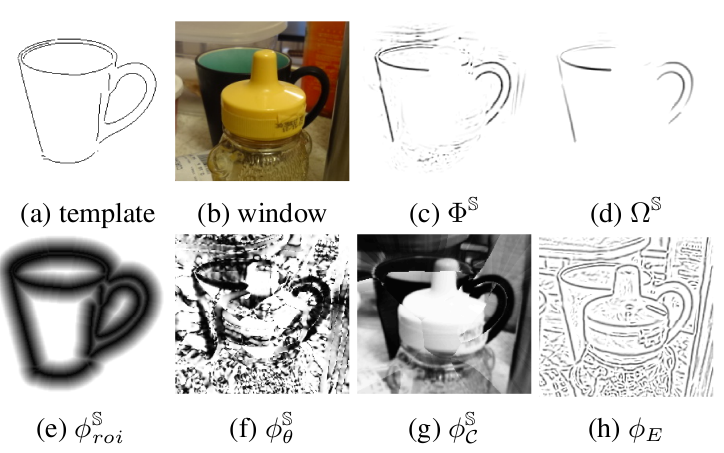

| Figure 2. Illustration of algorithm. Given (a) the template and (b) the image window, we compute (c) the local shape potential and apply the message passing algorithm to produce (d) the shape similarity. The local shape potential is composed of the (e) region of interest, (f) orientation, (g) color, and (h) edge potentials. |

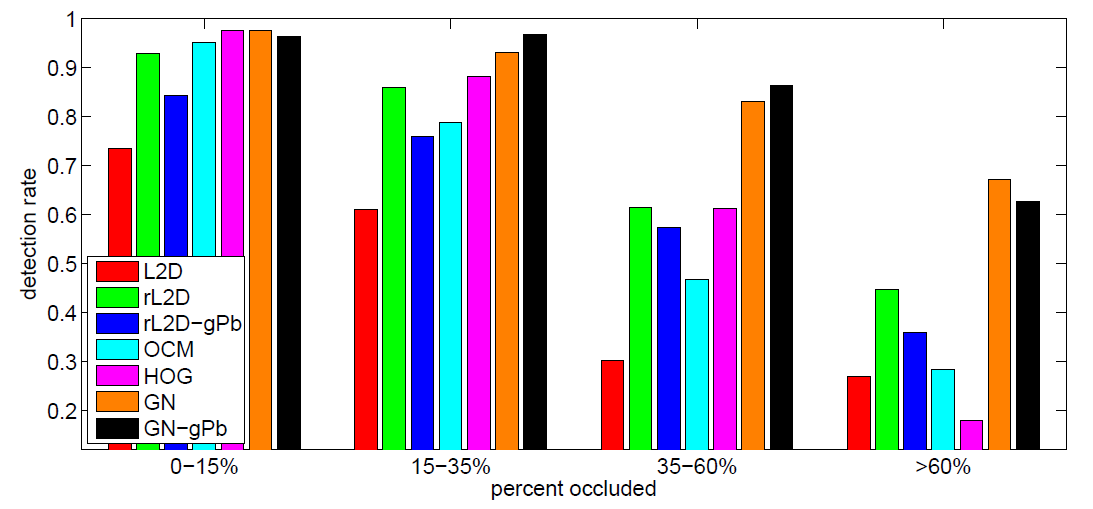

We validate our method's performance in shape-based object instance detection on the challenging CMU Kitchen Occlusion Dataset. The dataset contains 1600 images of 8 texture-less objects in real, cluttered environments and is split evenly into two parts; 800 for a single view of an object and 800 for multiple views of an object. The single-view part contains ground truth labels of the occlusions and contains roughly equal amounts of partial occlusion (1-35%) and heavy occlusions (35-80%). We demonstrate significant improvement over state-of-the-art methods in shape matching and object instance detection especially when objects are under severe occlusions.

|

| Figure 3. Example of shape matching using Gradient Networks. From left to right, we show: 1) template, 2) window, 3) local shape potential, and 4) probability that each pixel matches the template. |

|

| Figure 4. Detection rate under different occlusion levels. The GN and GN-gPb methods are more robust to occlusions than other state-of-the-art shape matching methods. |

Dataset

The CMU Kitchen Occlusion Dataset (CMU_KO8) containing 1600 images of 8 objects under severe occlusions in cluttered household environments with ground truth. [ZIP 213MB]References

[1] Edward Hsiao and Martial Hebert. Gradient Networks: Explicit Shape Matching Without Extracting Edges. AAAI Conference on Artificial Intelligence (AAAI) , July, 2013.

Funding

This material is based upon work partially supported by the National Science Foundation under Grant No. EEC-0540865.