Temporal Segmentation of Human Behavior

People

Abstract

Temporal segmentation of human motion into actions is a crucial step for understanding and building computational models of human motion. Several issues contribute to the challenge of this task. These include the large variability in the temporal scale and periodicity of human actions, as well as the exponential nature of all possible movement combinations. We formulate the temporal segmentation problem as an extension of standard clustering algorithms. In particular, this paper proposes Aligned Cluster Analysis (ACA), a robust method to temporally segment streams of motion capture data into actions. ACA extends standard kernel k-means clustering in two ways: (1) the cluster means contain a variable number of features, and (2) a dynamic time warping (DTW) kernel is used to achieve temporal invariance. Experimental results, reported on synthetic data, the Carnegie Mellon Motion Capture database and several action databases, demonstrate its effectiveness.

Citation

|

Feng Zhou, Fernando de la Torre and Jessica K. Hodgins,

"Hierarchical Aligned Cluster Analysis for Temporal Segmentation of Human Motion", submitted to IEEE Transactions on Pattern Analysis & Machine Intelligence (PAMI), 2010. |

|

Feng Zhou, Fernando de la Torre and Jessica K. Hodgins,

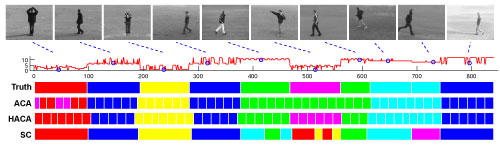

"Unsupervised Temporal Segmentation of Human Activities in Video", Workshop on Temporal Segmentation in conjunction with Advances in Neural Information Processing Systems (NIPSW), 2009. [PDF] [Bibtex] |

|

Feng Zhou, Fernando de la Torre and Jessica K. Hodgins,

"Aligned Cluster Analysis for Temporal Segmentation of Human Motion", International Conference on Automatic Face and Gesture Recognition (FG), 2008. [PDF] [Poster] [Bibtex] |

Code

-

The code is available here.

Results

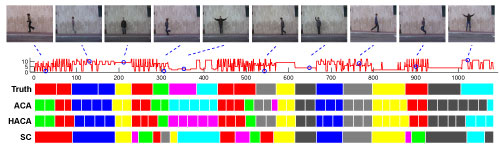

CMU Motion Capture Database-

Videos: [Ground Truth] [ACA] [HACA] [GMM] - More results are available here.

Acknowledgements and Funding

-

This work was partially supported by the

National Science Foundation under Grant No. EEEC-0540865.

The data used in this project was obtained from mocap.cs.cmu.edu.

The database was created with funding from NSF EIA-0196217.

Copyright notice

| Human Sensing Lab |