Emphatic Visual Speech Synthesis

People

- Javier Melenchon

- Elisa Martinez

- Fernando de la Torre

- Jose A. Montero

Abstract

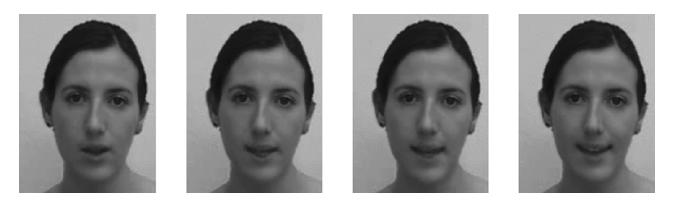

The synthesis of talking heads has been a flourishing research area over the last few years. Since human beings have an uncanny ability to read people's faces, most related applications (e.g., advertising, video-teleconferencing) require absolutely realistic photometric and behavioral synthesis of faces. This paper proposes a person-specific facial synthesis framework that allows high realism and includes a novel way to control visual emphasis (e.g., level of exaggeration of visible articulatory movements of the vocal tract). There are three main contributions: a geodesic interpolation with visual unit selection, a parameterization of visual emphasis, and the design of minimum size corpora. Perceptual tests with human subjects reveal high realism properties, achieving similar perceptual scores as real samples. Furthermore, the visual emphasis level and two communication styles show a statistical interaction relationship.

Citation

|

Javier Melenchon, Elisa Martinez, Fernando de la Torre, and Jose A. Montero

"Emphatic Visual Speech Synthesis," In Audio, Speech, and Language Processing, IEEE Transactions on, vol.17, no.3, pp.459-468, March 2009 [PDF] [BibTex] |

Acknowledgements and Funding

This work was supported in part by the Spanish Ministry of Education and Science under Grant TEC2006-08043/TCM. The associate editor coordinating the review of this manuscript and approving it for publication was Dr. Helen Meng.

Copyright notice

| Human Sensing Lab |