Bundle Adjustment Without Correspondences

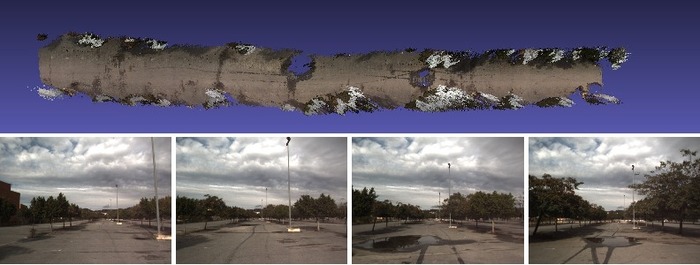

We propose a novel algorithm for the joint refinement of structure and motion parameters from image data directly without relying on fixed and known correspondences. In contrast to traditional bundle adjustment (BA) where the optimal parameters are determined by minimizing the reprojection error using tracked features, the proposed algorithm relies on maximizing the photometric consistency and estimates the correspondences implicitly. Since the proposed algorithm does not require correspondences, its application is not limited to corner-like structure; any pixel with nonvanishing gradient could be used in the estimation process. Furthermore, we demonstrate the feasibility of refining the motion and structure parameters simultaneously using the photometric in unconstrained scenes and without requiring restrictive assumptions such as planarity. The proposed algorithm is evaluated on range of challenging outdoor datasets, and it is shown to improve upon the accuracy of the state-of-the-art VSLAM methods obtained using the minimization of the reprojection error using traditional BA as well as loop closure. Additional details on ArXiV and Research Gate

Keywords: vision-based SLAM, precise refinement of pose and structure without correspondences.

People: Dr. Brett Browning (Robotics Institute), and Dr. Simon Lucey (Robotics Institute)

Vision in the Dark using Dense Alignment of Binary Descriptors

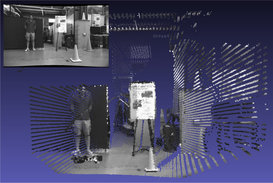

In this work, we address the question of robustness under degraded imaging conditions. For instance, we ask: can we perform robust pose estimation in near darkness?

We develop a direct camera tracking approach using binary feature descriptors. Click here for additional details, code and data.

The approach has been tested using 8DOF planar homography tracking, affine template alignment as well as visual odometry. The approach runs faster than real-time and can handle challenging illumination conditions.

Keywords: robust pose estimation, template tracking, challenging imagery, low light, real-time, direct VSLAM, binary descriptors, Lucas-Kanade.

People: Dr. Brett Browning (Robotics Institute), and Dr. Simon Lucey (Robotics Institute)

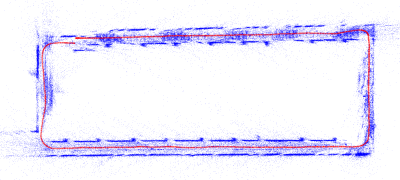

Direct Stereo Visual Odometry

Visual Odometry (VO) is the problem of estimating the relative change in pose between two cameras sharing a common field of view. We developed a robust and efficient algorithm from stereo data by using pixel intensities, and functions of pixel intensities, to enable real-time robust pose estimation. In addition to robustness, the environment is reconstructed in 3D with enough density for effective robotic perception and interaction with the world.

Research questions and challenges include:

- How to enhance the robustness of vision-only pose estimation when the pipeline of keypoint localization and matching is unreliable?

- How to achieve real-time performance using a single CPU core?

Keywords: dense reconstruction, stereo, visual odometry, visual SLAM (VSLAM), real-time, pose estimation.

People: Dr. Brett Browning (Robotics Institute), and Dr. Simon Lucey (Robotics Institute)

Continuous-time 3D Mapping

Scanning sensors acquire measurements incrementally one scan at a time. For instance, a rolling shutter camera acquires an image one line of pixels at time. Hence, for accurate measurements, the sensor must remain stationary, or move slowly, such that its motion is not faster than its scanning rate.

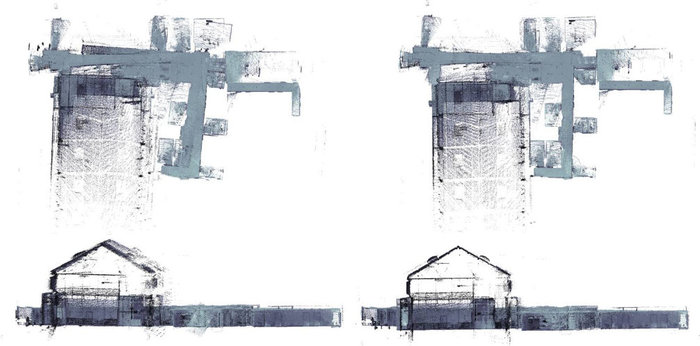

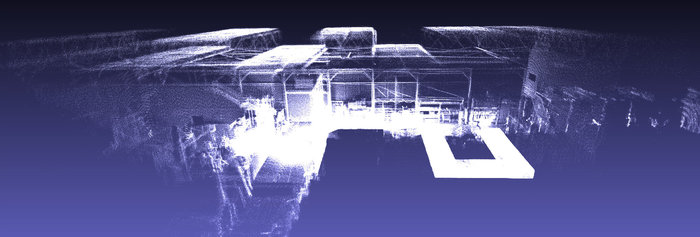

A particularly challenging scanning sensor is actuated lidar as its scanning rate is almost always slower than the robot motion. We developed a 3D mapping system that formulates the motion of the sensor in continuous trajectory space. The system is bootstrapped from visual odometry inputs using a rigidly mounted camera. Additional information can be found here.

Keywords: Actuated LIDAR, continuous-time SLAM, continuous 3D trajectory estimation, B-Splines.

People: Dr. Brett Browning (Robotics Institute), L. Douglas Baker (NREC). Sensing assembly was provided by Carnegie Robotics.

Actuated LIDAR and Camera Assembly Perception

LIDAR is an accurate source of distance measurements, which is particularly useful in robot perception. By actuating a 2D scanning LIDAR's, we sweep a 3D volume in space. This is actuation techniques is commonly used in robotics for two main reasons. One, it is cheaper to build these scanning sensors. The other, scanning pattern and field of view are highly customizable. In order for an actuated LIDAR to be accurate, we must determinate the offset between the center of rotation of the LIDAR's spinning mirror to the center of rotation of the actuation mechanism. We call this problem calibration of the internal parameters, or the kinematics chain. To this end, we have developed a calibration algorithms that is

- Fully automated

- Does not require calibration targets.

- Makes minimal assumptions about the environment, and hence can be used in the field.

LIDAR, however, does not capture color information of the environment. By attaching a camera to the sensing assembly, we are able to obtained 3D colorized point clouds, which are useful for perception tasks. In order to colorize the LIDAR points we must determine a rigid body transformation that maps 3D points to their projections onto the image. This is particularly challenging as this calibration procedure must make use of long range LIDAR measurements to reduce bias. To address this problem, we developed a calibration target based on the image of a circle. The image of a circle with a known radius allows us to determine its center in 3-space as well as its supporting plane normal at long range. This information is then used to obtained the calibration parameters using a nonlinear optimization procedure.

Keywords: Actuated LIDAR, LIDAR camera calibration, internal parameters calibration, sensing assembly kinematics chain.

People: Dr. Brett Browning (Robotics Institute), L. Douglas Baker (NREC). Sensing assembly was provided by Carnegie Robotics.

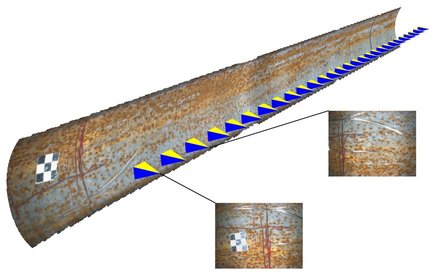

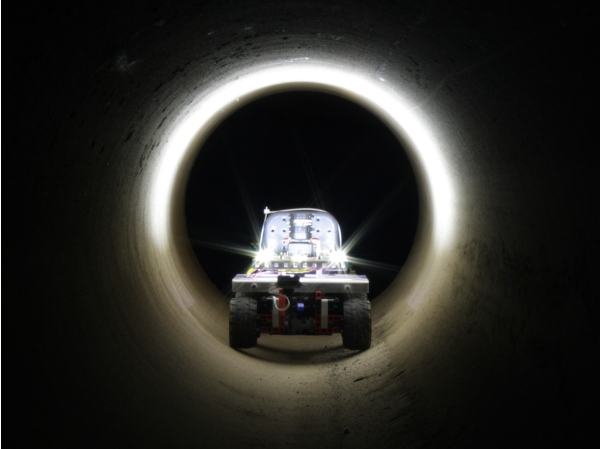

LPV: LNG Pipe Vision

Accurate assessment of pipe corrosion rates is critical to the safety and productivity in Liquid Natural Gas (LNG) plants. Due to the growing complexity of LNG plants, safety, the time consuming task of manual pipe inspection, and cost, current inspection techniques can not cover every pipe in the pipe network Often, inspectors resort to extrapolation which predicts corrosion rates instead of acquiring direct estimates. In this work, we investigate the use of computer and machine vision techniques for building accurate 3D models of pipe surface with sub-millimeter accuracy. Specifically, a pipe crawler robot can carry a camera, or a set of cameras, that capture images of the internal pipe surface. Given those images we can to create 3D models that are reliable and repeatable for the task of corrosion inspection and change detection.

Some challenges and research questions we are trying to address include:

- How to choose and control lighting inside the pipe?

- What are the best camera systems suited for the task of reliable 3D reconstruction in pipe (monocular, stereo, omnidirectional, a ring of cameras, etc)?

- How to exploit the known geometry of a pipe to achieve sub-millimeter accuracy (for 3D reconstruction and visual odometry) that might not be possible in general environments?

Keywords: 3D reconstruction, stereo, visual odometry, registration, change detection.

People: Dr. Brett Browning (Robotics Institute), PI, Dr. Peter Rander Co-PI (NREC), Dr. Peter Hansen, Carnegie Mellon Qatar

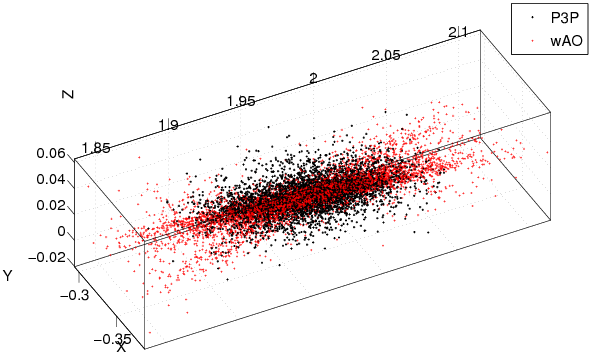

Visual Odometry and Pose Estimation

Visual odometry (VO), or motion estimation from imagery, has become the method

of choice for motion estimation for robots in many situations. The low cost,

high information content, and passive nature of cameras make them ideal for a

variety of applications. Two main approaches to visual odometry exist: (1)

methods that derive from the state estimation (EKF, Particle filters, etc) from

robotics and (2) Structure-from-Motion (SFM) based methods common in the

computer vision literature. In this work, we are interested in SFM-based

methods as they are relatively more extensible and stable.

Visual odometry (VO), or motion estimation from imagery, has become the method

of choice for motion estimation for robots in many situations. The low cost,

high information content, and passive nature of cameras make them ideal for a

variety of applications. Two main approaches to visual odometry exist: (1)

methods that derive from the state estimation (EKF, Particle filters, etc) from

robotics and (2) Structure-from-Motion (SFM) based methods common in the

computer vision literature. In this work, we are interested in SFM-based

methods as they are relatively more extensible and stable.

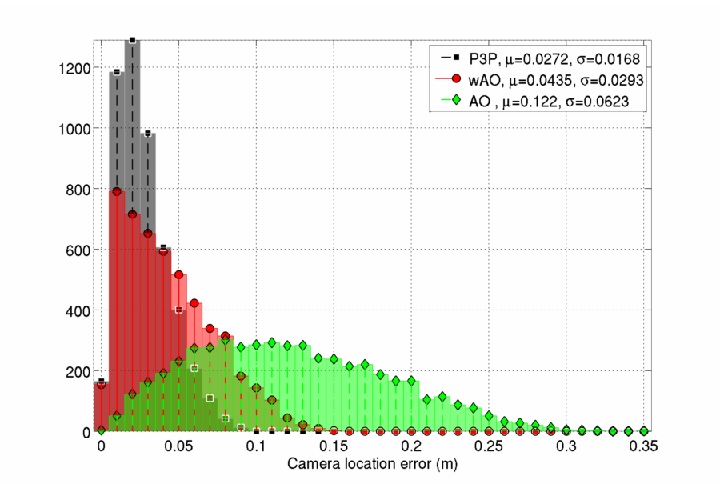

SFM-based stereo VO pipeline consists of three main steps: (1) feature extraction, 3D triangulation and tracking, (2) pose estimation from tracked features, and (3) non-linear refinement of motion and structure parameters. Pose estimation is at the core of SFM-based VO as pose estimation methods need to tolerate feature tracking and extraction errors. Furthermore, estimated pose needs to be accurate enough for non-linear refinement methods to converge. In this work, we conducted a large empirical study to investigate several classes of pose estimation algorithms, evaluate them on simulated data, as well as indoor and outdoor data set. The aim is give insight into the different algorithms and the main factors that affect the accuracy of pose estimation methods from stereo imagery.

Keywords: Stereo visual odometry, Pose estimation, Perspective-N-Points (PnP), Absolute Orientation (AO)

People: Dr. Brett Browning (Robotics Institute), Dr. Bernardine Dias (Robotics Institute)

Technology for Developing Communities

Despite the importance of literacy to most aspects of life, underserved communities continue to suffer from low literacy rates; especially for globally prevalent languages such as English. In the summer of 2009, I participated in iSTEP (innovative Student Technology ExPerience), TechBridgeWorld summer research internship program. One of iSTEP projects was to create and evaluate culturally-relevant educational technology and games for child literacy. I was the iSTEP 2009 Technical Lead for the Literacy Tools project working with primary school teachers and students at the Mlimani Primary School in Dar es Salaam, Tanzania.

I took the lead on technical development and testing for the project which resulted in an interactive mobile phone game which quizzes students on English grammar and an online Content Authoring Tool which allows teachers to create their own questions and answers for the game. The project has since expanded to other user groups (middle school deaf and hard-of-hearing students, migrant workers and university students) in different countries (the United States, Qatar and Bangladesh, respectively) and has become a program at TechBridgeWorld, where it is formally known as TechCaFE (Technology for Customizable and Fun Education).

Keywords: ICTD, English Literacy, Mobile Phones

People: Rotimi Abimbola, Hatem Alismail, Sarah Belousov, Beatrice Dias, Freddie Dias, M. Bernardine Dias, Imran Fanaswala, Bradley Hall, Daniel Nuffer, Ermine A. Teves, Jessica Thurston, Anthony Velázquez