Scalable Regularized Tomography

X-ray computerized tomography (CT) and other related imaging

modalities, such as positron emission tomography (PET), are

notorious for their excessive computational demands. While the

earliest algorithms in tomography, such as filtered

backprojection (FBP), are now trivial in two-dimensions and

scalable in three-dimensions due to their ``embarassingly

parallel'' nature, the more noise-resistant probabilistic

tomography methods are still prohibitive, especially in

three-dimensions and at high resolutions. In this project we are

studying a new class of algorithms in which probabilistic

tomography becomes ``embarassingly parallel'' as well.

To understand how, recall that tomographs are produced by

converting observed projections into an image. For example, in

X-ray CT, X-ray beams directed at a patient are

attenuated to varying degrees by the different materials within the body.

On the opposite side of the patient, the attenuated beams are detected as

with an array of measurements called a projection. Such

projections are produced at many different angles around the patient.

In reality these measurements are noisy, and the relative noise level even

depends on the amount of attenuation. In particular, projections through

dense materials such as bone and especially metal have lower

signal-to-noise ratio than projections through only flesh or water. Coping

with the large and spatially-varying fluctuations in the number of detected

photons often requires a probabilistic smoothing technique (also known as

regularization) to improve the image. The basic idea of regularization is

to infer a smooth image whose simulated projections approximate the

observed (but noisy) projections. The difficulty is that the standard

regularization specifies the two basic constraints in different

domains: (1) smoothness is an image property specified in the

image domain, while (2) closeness to the data is determined in the

projection domain. Enforcing these constraints computationally

requires a large sparse matrix-vector multiplication (projection or

backprojection) to convert between the two domains during processing. This

multiplication places heavy demands on system memory and memory bandwidth,

and requires significant network usage when parallelized (a global

summation).

The novelty in our formulation of regularized tomography is in the

analytical conversion of the smoothness constraint itself from the

image to the projection domain, before any computations begin. Thus the

most difficult part of the computation takes place entirely in the

projection domain. The standard (and expensive) practice of

numerically iterating between the image and projection domains is avoided.

Another benefit of the conversion of the smoothness

constraint to the projection domain is the decoupling of a large system of

regularization equations into many small systems of simpler regularization

equations. The difficult computation of regularized tomography

becomes ``embarassingly parallel'', so that latency tolerant and ideally

scalable parallel computations are possible, as our results

show in 2-d.

Demonstration

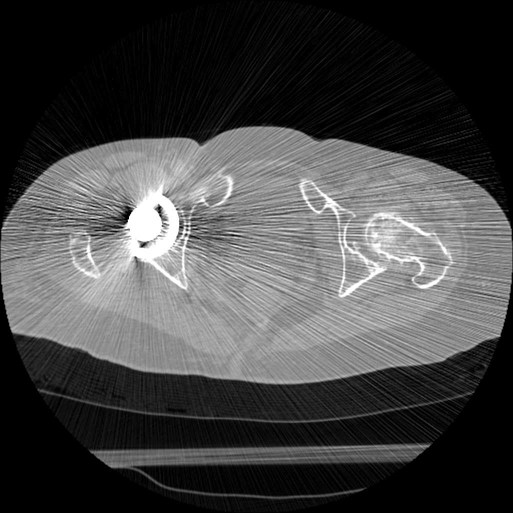

Before applying scalable decoupled regularization (click on

image to zoom):

This is a CT scan of a human hip with a metal hip implant shown

on the left. Observe the streak artifacts emanating from the

implant.

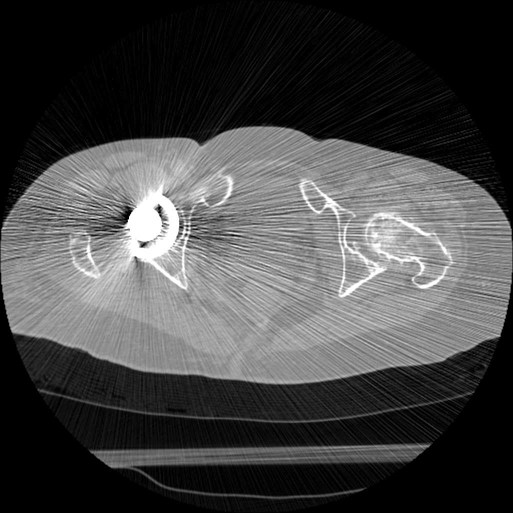

After applying scalable decoupled regularization (click on

image to zoom):

This is the result of our algorithm. Note that the streaking has been

reduced without a significant increase in blur.

Funding

This material is based upon work supported by the National

Science Foundation under Grant No. 0305719. Any opinions,

findings, and conclusions or recommendations expressed in this

material are those of the author(s) and do not necessarily

reflect the views of the National Science Foundation.