To automatically and accurately locate a person's body parts in an image is an important and challenging vision problem. The solution to this problem could be employed in a wide range of applications, such as visual surveillance, human-computer interface, performance measurement of athletes, virtual reality, and teleconference. Although humans can easily estimate the locations of the body parts from a single image, this problem is inherently difficult for a computer. The difficulty stems from the number of degrees of freedom in the human body, self-occlusion, clothing, and ambiguities in the recovery of the 3D pose of a person from a 2D image, etc.

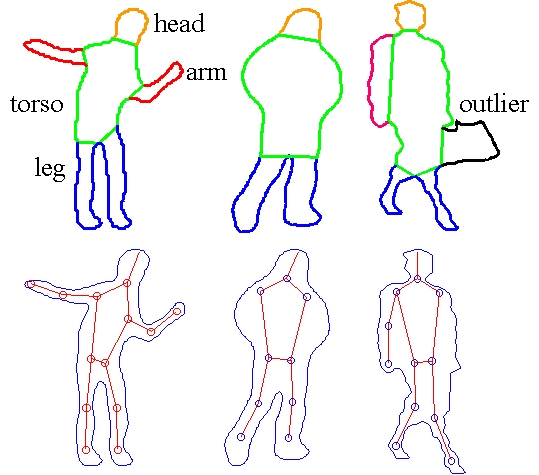

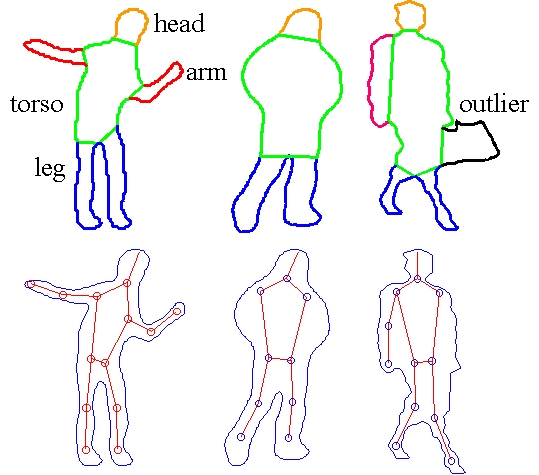

In this work, I developed a TRS (the abbreviation of translation, rotation, and scaling)-invariant probabilistic model to encode the shapes of the body parts, and a TRS-invariant similarity measure to label the body parts. A recursive context reasoning (RCR) algorithm is introduced to integrate the human model and the identified body parts to predict the shapes and locations of the parts missed by the contour detector. Therefore, body part localization is improved iteratively.

Body part labeling and joint localization:

3D pose recovery:

L. Zhao, C. Thorpe, "Recursive Context Reasoning for Human Detection and Part Identification", IEEE Workshop on Human Modeling, Analysis, and Synthesis, Hilton Head Island, June 16, 2000.