Project 5: Spectral Processing

Download all project-related files here.

Due Nov 7 at 11:59 p.m.

1. Short Questions

As always, show your work where applicable. Please include your answers for this section in ANDREWID-p5-answers.txt.

- Granular Synthesis

What is the typical range of durations used in granular synthesis, in milliseconds? Explain in one or two sentences why this range is best.

- Linear predictive coding

- Briefly explain why LPC requires much less data than the original signal.

- When making the voiced/unvoiced decision, if the amplitude of the residual is high in comparison to the amplitude of the original input signal, would the original signal probably be voiced or unvoiced? Briefly explain why in 2-3 sentences.

- Short-time Fourier Transform

- If the sample rate is 48KHz and the FFT window size is 2048 samples, what is the frequency resolution (bin size) of the spectrum?

- What is the purpose of the window function in the FFT?

- Psychoacoustics

- What is the relationship between the amplitude of a signal and its perceived loudness, for any given frequency?

- Linear

- Logarithmic

- Inverse

- No relationship

- What is the relationship between pitch and fundamental frequency?

- Linear

- Logarithmic

- Inverse

- No relationship

- According to Figure 23.2 (Roads p. 1057), if we sweep a sine tone, with a constant amplitude from A5 to A3, how will the loudness (perceived amplitude) in Phons change

- Convolution

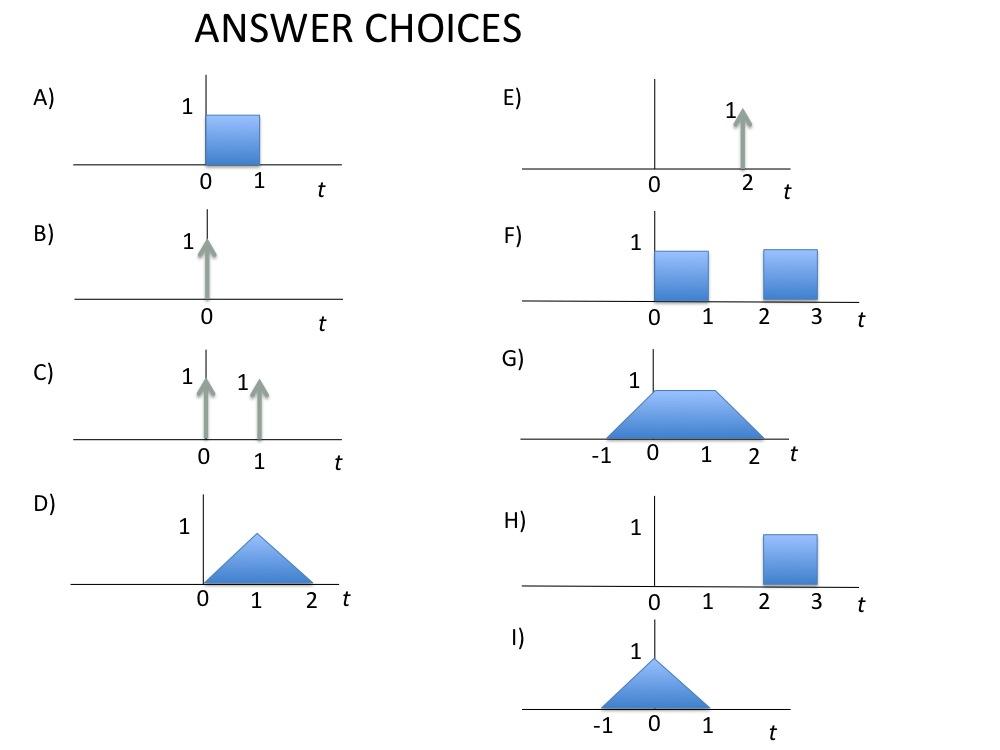

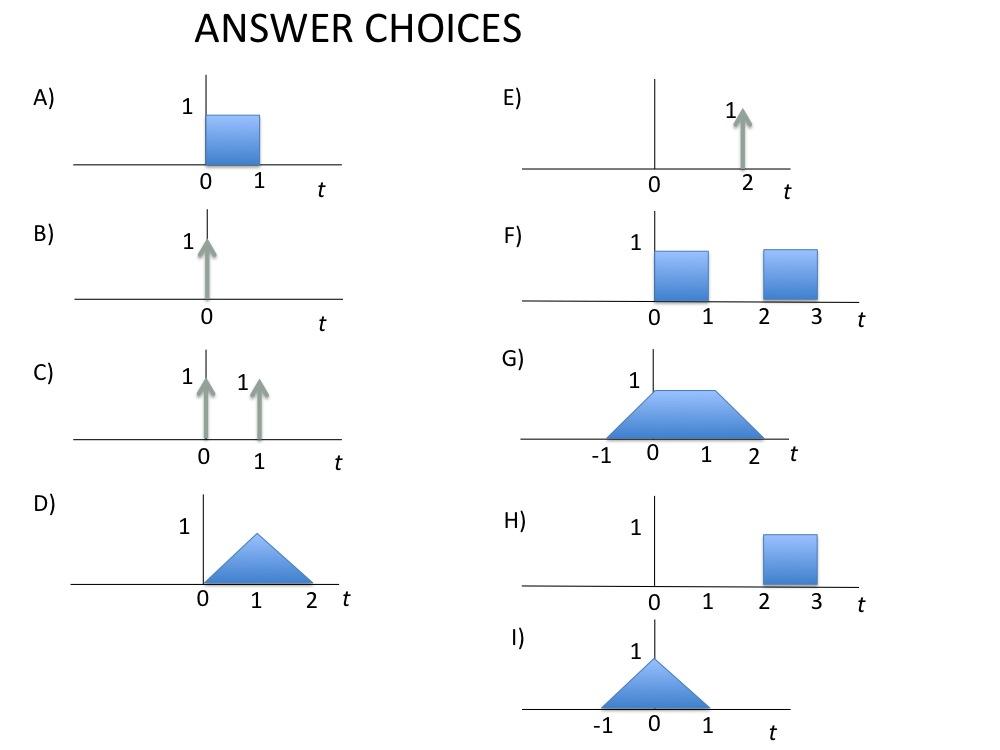

For each pair X, Y below, compute the convolution X*Y. The convolution will be one of the graphs labeled A through I below; tell us which graph represents the convolution for each X and Y pair. Note that the up-arrow represents an instantaneous impulse, also called a delta function. [^1] Convolution with an impulse shifts a function by the time of the impulse

- Vocal Sounds

The following images are spectra for "ah" (as in father), "ee" (as in bee), and "oh" (as in no). Identify the correct vowel label for each spectrum and explain your reasoning. (The horizontal axis is kHz.) You should find a chart of vowel sounds and their formant frequencies in the textbook or on the web.

- Filters

What type of filter should you use for each of the following applications? Choose from low-pass, high-pass, band-pass, notch, and "not possible".

- Remove a pitched noise around 1423Hz in a recording

- Filter a voice signal to 200Hz to 1900Hz in order to transfer through the telephone network

- Remove the vocal part from a recording

- Remove wind rumble from a recording of birdsong

- Antialiasing

2. Sampling

Find a sound to loop from freesound.org. You should look for a sustained musical tone (anything with pitch and amplitude changes will be harder to loop). Save it as ANDREWID-p5-sample-src.wav. Your goal is to find loop start and end points so that you can play a sustained tone using Nyquist's sampler() function. Make a sound file with two 4-second-long tones (assuming that your loop is much less than 4 seconds long). The tones should have different pitches.

Hints: Recall that sampler() takes three parameters: pitch, modulation, and sample. pitch works like osc(). modulation works like fmosc(). Please use const(0, 4), which means "zero frequency modulation for 4 seconds." (Or you can use const(0, 1) and stretch everything by 4.) The sample is a list:

(_sound pitch-step loop-start_)

where sound is your sample. If you read sound from a file with s-read(), you can use the dur: keyword to control when the sound ends, which is the end time of the loop. pitch-step is the nominal pitch of the sample, e.g., if you simply play the sample as a file and it has the pitch F4, then use F4. loop-start is the time from the beginning of the file (in seconds) where the loop begins. You will have to listen carefully with headphones and possibly study the waveform visually with Audacity to find good loop points. Your sound should NOT have an obvious buzz or clicks due to the loop, nor should it be obviously a loop - it should like a sustained musical note.

Multiply your output by a suitable envelope and encapsulate your sampler and envelope in a function. Remember that the envelope should not fade in because that would impose a new onset to your sample, which should already have an onset built-in. To get an envelope that starts immediately, use a breakpoint at time 0, e.g., pwl(0, 1, …, 4).

Your final output, named ANDREWID-p5-sample.wav, should be generated in Nyquist, e.g.,

play seq(my-sampler(pitch: c4), my-sampler(pitch: f4)) \~ 4

Turn in your source code as ANDREWID-p5-sample.sal.

3. Composition

In this assignment you will be experimenting with spectral processing. Spectral processing means manipulating data in the spectral domain, where sound is represented as a sequence of overlapping grains, and each grain is represented as a weighted sum of sines and cosines of different frequency components.

Warmup Exercise

We have provided you with the spectral-process.sal file to help you get started. See that file for documentation on examples of spectral processing.

Your task is to invert the lower portion of each spectral frame so that low frequencies become high, and high frequencies become low. We're going to ask you to implement a very specific algorithm so that you can tell by ear if your code is working.

You can copy and modify example 3 in spectral-process.sal. Example 3 randomizes the phase in the spectral frames, so you will have to modify the code to do spectral inversion. The spectral inversion algorithm swaps the lower frequencies in the spectrum and works as follows:

Swap frame[1] with frame[35] and frame[2] with frame[36]. frame[1] and frame[2] represent the real and imaginary parts of the lowest non-DC frequency. frame[35] and frame[36] represent the real and imaginary parts of some other frequency. Then swap frame[3] with frame[33] and frame[4] with frame[34]. Again, we're swapping the complex numbers starting at indexes 3 and 33. Next, we swap 5 and 31, etc. The reason for not swapping the entire spectrum is that there's not much musical information above 10kHz. We do not want to move all the activity from 0 to 4kHz up to 18-22kHz where it would be mostly inaudible. The choice to swap exactly 36 coefficients is arbitrary. The formula for the result is:

new frame[1 + i * 2] = old frame[35 - i * 2] and

new frame[2 + i * 2] = old frame[36 - i * 2], for i = 0, 1, … 17

otherwise, new frame[j] = old frame[j]

In your implementation, you can modify frame in place, but be careful about swapping so as not to overwrite a value that you will need later.

Other parameters are important: The fft-dur should equal 1024 / 44100.0 and skip-period should equal 256 / 44100.0, so spectral frames will overlap by 75%. The analysis should use a Hann window. The file to process is rpd-cello.wav, which is included in the Project 5 package.

If you implement all this correctly, the first half of your result will sound like invert.wav, which is included in the Project 5 package. We only included the first half of the expected result.

Turn in your code as ANDREWID-p5-invert.sal and your output sound as ANDREWID-p5-invert.wav.

Cross-synthesis

Example 4 in spectral-process.sal gives an example of spectral cross-synthesis. The idea here is to multiply the amplitude spectrum of one sound by the complex spectrum of the other. When one sound is a voice, vocal qualities are imparted to the other sound. Your task is to find a voice sample and a non-voice sample and combine them with cross-synthesis.

You may use the example 4 code as a starting point, but you should experiment with parameters to get the best effect. In particular, the len parameter controls the FFT size (it should be a power of 2). Modify at least one parameter to achieve an interesting effect. Larger values give more frequency resolution and sonic detail, but smaller values, by failing to resolve individual partials, sometimes work better to represent the overall spectral shape of vowel sounds. Furthermore, it matters which signal is used for phase and which is reduced to only amplitude information. Speech is more intelligible with phase information, but you might prefer less intelligibility.

You will find several sound examples on which to impose speech in example 4, but you should find your own sound to modulate. Generally, noisy signals, rich chords or low, bright tones are best - a simple tone doesn't have enough frequencies to modify with a complex vocal spectrum. Also, signals that do not change rapidly will be less confusing, e.g., a sustained orchestra chord is better than a piano passage with lots of notes.

Turn in your code as ANDREWID-p5-cross.sal and your two input sounds as ANDREWID-p5-cross1.wav and ANDREWID-p5-cross2.wav.

Composition

Create between 30 and 60 seconds of music using spectral processing. You can use any techniques you wish, and you may use an audio editor for finishing touches, but your piece should clearly feature spectral processing.

You may use cross-synthesis from the previous section, but you may also use other, techniques including spectral inversion or any of the examples in spectral-process.sal. While you may use example code, you should strive to find unique sounds and unique parameter settings to avoid sounding like you merely added sounds to existing code and turned the crank. For example, you might combine time-stretching in example 2 with other examples, and you can experiment with FFT sizes and other parameters rather than simply reusing the parameters in the examples.

Hint: While experimenting, process small bits of sound, e.g., 5 seconds, until you find some good techniques and parameters. Doing signal processing in SAL outside of unit generators (e.g., spectral processing) is very slow. With longer sounds, remember that after the sound is computed, the Replay button can be used to play the saved sound from a file, so even if the computation is not real-time, you can still go back and replay it without stops and starts.

Turn in your code in comp/ANDREWID-p5-comp.sal and your final output composition as comp/ANDREWID-p5-comp.wav. In comp/ANDREWID-p5-comp.txt, describe the spectral processing in your piece and your motivation or inspiration behind your composition. Finally, include your source sounds in comp/origin/.

4. Audacity plugin

Create a Nyquist plugin for Audacity using SAL syntax. Your plugin can do anything you want, but it should have at least 2 parameters that can be set by the user in the Audacity-generated dialog box. You can read about creating a plugin at the online documentation. Notice in the documentation that there is an optional header line that looks like this:

;info "text"

Use this to make your plugin self-documenting. When we run your plugin, the dialog box should explain (1) what the plugin computes, (2) what the parameters do, (3) suggested parameter settings (the default settings should be reasonable too), (4) anything else you would like your users to know.

Turn in your plugin code in ANDREWID-p5-plugin.ny.

Hand-in

Please hand in a zip file containing the following files (in the top level, not inside an extra directory):

- Answers for questions in part 1:

ANDREWID-p5-answers.txt

- Source code, input sound, and output sound for part 2:

ANDREWID-p5-sample.sal, ANDREWID-p5-sample-src.wav, and ANDREWID-p5-sample.wav

- Source code and output for spectrum inversion:

ANDREWID-p5-invert.sal and ANDREWID-p5-invert.wav

- Source code and output for cross-synthesis:

ANDREWID-p5-cross.sal and ANDREWID-p5-cross.wav

- Composition files: all of these should be in the comp/ folder:

- Short description:

ANDREWID-p5-comp.txt

- Composition code:

ANDREWID-p5-comp.sal

- Any external source files you used in your composition: in the

origin/ folder

- Composition sound file:

ANDREWID-p5-comp.wav

- Nyquist Plugin:

ANDREWID-p5-plugin.ny.

[^1]: We’ve described and used these informally, but if they are new to you, the delta function is zero everywhere except at one point, and at that one point the value is infinite such that the integral is 1. Formally, it is the limit of a rectangle that is ε wide and 1/ε high.