|

RAPTOR: Robots, Agents & People Team: Operation Rescue

Carnegie Mellon University and University of Pittsburgh

Pittsburgh, Pennsylvania

Our goal is to develop a team of heterogeneous robots to aid humans in exploring a disaster site. We are currently targeting the Robocup Rescue domain. In 2003, we used the PERs to place third at the U.S. Open. Since then, we have outfitted the PERs with faster motors to enable them to cover more space in the time limits and expanded our robot team to include a Pioneer P3AT and a Tarantula robot, which we use for stair climbing. We have also developed a high fidelity simulator for the search and rescue domain with a kinematically correct model of the PER.

Related Publications:

Human-Robot Teaming for Search and Rescue, IEEE Pervasive Computing, 2005

RoboCup2004 - US Open

|

|

Visual Odometry

Intel Research Pittsburgh and CMU Robotics Institute

Pittsburgh, Pennsylvania

Related Publications:

A Robust Visual Odometry and Precipice Detection System Using Consumer-grade Monocular Vision, ICRA2005

Techniques for Evaluating Optical Flow for Visual Odometry in Extreme Terrain, IROS2004

Visual Odometry Using Commodity Optical Flow, Intelligent Systems Demonstrations at AAAI 2004

|

|

Robot Photographer

Intel Research Pittsburgh and CMU Robotics Institute

Pittsburgh, Pennsylvania

Related Publications:

Leveraging Limited Autonomous Mobility to Frame Attractive Group Photos, ICRA2005

|

|

HAMEX project

NASA / Goddard Space Flight Center

Greenbelt, Maryland

The HAMEX project is a Director Discretionary Funded (DDF) effort to explore the use of Personal Digital Assistants (PDAs) to enhance education about space science and exploration for kids. The HAMEX activity began 5 years ago, and has culminated with the HAMEX Visitor's Center exhibit. Students come to the GSFC visitor's center and run through a 30 minute game using the Carnegie Mellon PER robot to simulate an actual Martian rover mission.

|

|

Adaptive Sensor Fleet - Terrestrial Robotics

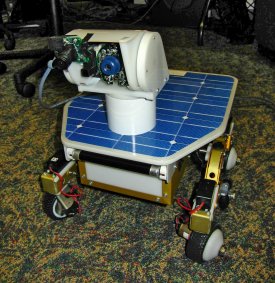

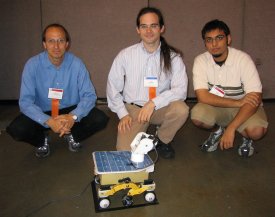

MERS: Multi-Purpose Exoterrain for Robotic Studies

NASA / Goddard Space Flight Center

Greenbelt, Maryland

The Multipurpose Exoterrain for Robotic Studies (MERS) is a testbed environment created by NASA Goddard Space Flight Center (GSFC) researchers to perform testing relative to surface exploration of the Moon, Mars, and beyond. The MERS testbed includes a semi-realistic environment for physical testing of robots, sensors, and advanced exploration concepts; three Personal Exploration Rovers; and a robot locator system for up to ten robots.

|

|

|

Autumnal Quiltmaker

CMU; College of Fine Art; School of Art

Pittsburgh, Pennsylvania

Both a technologist and an artist, Ian Ingram's work takes a variety of forms: mechatronic installations, conceptual proposals, interactive animations, and artworks that are meant to be released into the wild. In Autumnal Quiltmaker, Ian is using the PER vehicle developed by Illah Nourbakhsh's lab as the star of a robotic backyard quiltmaking party. In the Fall of 2005, PER will spend whole days meandering around neighbourhood lawns cluttered with bright and colourful leaves. In the morning, passersby on their way to work will ponder the inexplicable activities of the busy bot but on their return journey they will see the PER's day's work: all the leaves sorted from one side of the yard to the other from red to yellow.

|

|

Curriculum Development - Robotics and Java

Ohlone College

Fremont, California

Ohlone College will use the PER to teach multithreading and 'fuzzy' objectives to Java programming students. Combining a speech package with the PER software will bring home asychronous processing when the students makes the PER walk and talk at the same time. Detecting an object by color and navigating to the object, independent of distance, will require the student to develop programs where the objective requires a "try and refine" approach; a very different paradigm than traditional business software.

|

|

The Anthropocentric Robotics Project

Carnegie Mellon University Robotics Institute and University of Pittsburgh Learning Research and Development Center

Pittsburgh, Pennsylvania

As an increasing number of robots have been designed to interact with people on a regular basis, research into human-robot interaction has become more widespread. At the same time, little work has been done on the problem of long-term human-robot interaction, in which a human uses a robot for a period of weeks or months. As people spend more time with a robot, it is expected that how they make sense of the robot - their “cognitive model” of it - may change over time. In order to examine this cognitive model, a study was conducted involving the Personal Exploration Rover (PER) museum exhibit and the museum employees responsible for it. The results of the study suggest a number of relevant components of a cognitive model for human-robot interaction.

Related Publications:

Long-term Human-Robot Interaction: The Personal Exploration Rover and Museum Docents, The 12th International Conference on Artificial Intelligence in Education, 2005

|

|

UPCLOSE (University of Pittsburgh Center for Learning in Out of School Environments)

Investigating Children's Assumptions About Intelligent Technologies

Learning Research and Development Center, University of Pittsburgh

Pittsburgh, Pennsylvania

This research project investigates children’s ideas about the capabilities of intelligent technology. Specifically, we are interested in whether children think of robots as ‘intelligent’ (i.e., able to think, calculate, plan, remember and learn), and whether this affects their interactions with robots. We began by asking children a series of questions about the capabilities of the PER, the Sony QRIO, a computer, a calculator, and some living things. Children were then given the opportunity to engage with the PER in a goal-directed task. We are currently analyzing the data to see how children’s ideas about the PER’s intelligent capabilities influenced their patterns of interaction.

Related Publications:

Investigating Children’s Beliefs About Artificially Intelligent Artifacts, Conference poster, 2005

|

|

Kinematics Theory Versus Practice in Wheeled Mobile Robots

University of Oklahoma

Norman, Oklahoma

The Personal Exploration Rover (PER) is being used to research robot kinematics. One of the goals of the project is to implement Ackerman steering on the PER. Ackerman steering is the turning mechanism used by cars. After realizing the Ackerman steering, a web interface will be developed that will allow the PER to be controlled from a computer with web access. The web control interface will allow users to create and test programs on the PER. The results of the PER’s actions will then be compared to the theoretical kinematics model of the programmed motion.

|

Master of Software Engineering Studio Project -

Polaris team

Carnegie Mellon University

Pittsburgh, Pennsylvania

The Polaris team created an architectural modeling tool and code generation system based upon the architecture of the NASA Mission Data System (MDS) robotic control system. This modeling tool and code generation system were intended to facilitate maintaining a concrete link between system design and implementation in the domain of spacecraft control systems. The PER was used as a demonstration platform to show that the system that was created was in fact capable of generating code for an actual robot.

| |

Sub Vocal Speech Research Team - Discovery and Systems Health Group in the Intelligent Systems Division (code TI)

NASA Ames Research Center

Moffett Field, California

Sub Vocal Speech is a new human communication method that understands tiny neural signals in the vocal tract rather than sounds. These electromyographic signals or EMG's arise from commands sent by the brain's speech center to tongue and larynx muscles which enable production of audible words. What sub vocal speech does is intercept EMG signals before any sound is produced and infer what words would have been said. As a result, whether or not audible sounds occur is no longer important. Further, the amount of surrounding noise or even speech intelligibility no longer hinders communication. What is important is that whatever EMG patterns are produced are consistent. Such as system permits silent, non audible speech to be used for vehicle control or auditory control in conditions of extremely high ambient noise.

We are looking at using the PER as a test platform for sub vocal control software of robotic first responder location and fire rescue robotic concepts. Its primary function is for incorporating realistic mechanical actuator delays and dialog generation feasibility tests into real time sub vocal recognition software. Its small size and low cost provide a convenient first approximation of larger scale device control.

|