Roger B. Dannenberg

Home Publications Videos Opera Audacity

Photo by Alisa.

Roger B. DannenbergHome Publications Videos Opera Audacity |

Photo by Alisa. |

Developing a Web Audio Worklet in C++ Using WASM

I was unable to find a single working open source code example that compiles and runs C++ in Web Audio. Maybe that will change by the time you read this. The main problems I set out to solve are: (1) Installing Emscripten (Emscripten's instructions are incomplete at best), (2) Compiling C++ code (Emscripten has changed, so most examples are broken), (3) Writing code to load and communicate with a Web Audio Worklet (this has always been confusing and poorly documented). This is all conducted on a MacBook Air M2 running Ventura (macOS) 13.5.2.

Ultimately, we want the following capabilities:

Emscripten installation is fragile. What I got to work was the

following. Note that instead of the git clone line, you

can just download and unzip emsdk-main.zip from the

Code button on the emsdk GitHub page.

# run this in a NEW TERMINAL! /Users/rbd % git clone https://github.com/emscripten-core/emsdk.git /Users/rbd % cd emsdk /Users/rbd/emsdk % ./emsdk install latest /Users/rbd/emsdk % ./emsdk activate latest /Users/rbd/emsdk % source ./emsdk_env.sh

Why do I say fragile? A few gotcha's are

./emsdk install

latest and ./emsdk activate latest.

source ./emsdk_env.sh, sets

some environment variables that will influence future

behavior. E.g. if you delete ~/emsdk, start over with

the zip file and create ~/emsdk-main, then cd

~/emsdk-main; source

./emsdk_env.sh will fail! (Starting a new shell or carefully

removing environment variables EMSDK, EMSDK-PYTHON and EMSDK-NODE

will probably fix this.

emcc, only in the

current shell!, for example cd; emcc -v.

emsdk/upstream/emscripten/test/webaudio. I'm not sure yet

how that works, so I'll copy the whole webaudio

directory, renaming to my ~/soundcool/online/example.

-sMINIMAL_RUNTIME=1 does not work. That’s actually really

promising because removing the switch apparently does work.

I copied the compile recipe from this Issue to a Makefile I'll use to record how to build the full example. (See the sidebar for a link to source code.)

Even if you do a “hard” reload, browsers do not seem to reload modules. To eliminate this potential problem, I always restart the server with a new port for every test, e.g. the example below shows port 8138.

The simplest thing you can try is:

% # start in the example1 directory % make % cd web % python3 -m http.server 8138

Run Chrome and visit UTL localhost:8138/test.html.

Unfortunately, the code will not work, and if you open the browser

console (e.g. CMD-OPT-I), you'll see:

uncaught ReferenceError: SharedArrayBuffer is not defined

To begin with, you can turn of the security by quiting Chrome and running it from the command line in another terminal using:

% /Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome --enable-features=SharedArrayBuffer

Note that the particular problem is that the Web Audio code uses

SharedArrayBuffer, but this symbol is unbound if security

requirements are not met. Enabling SharedArrayBuffer apparently leaves

the security in place but makes an exception for

SharedArrayBuffer.

Now, you can again visit localhost:8138/test.html

(assuming your local server is still running) and you should hear

noise when you click on the “Toggle playback” button.

Note: When I run this, the web page has two big black boxes that look like something failed. I’m not sure of the intention of this emscripted-generated page but at least for this simple test, you can ignore this problem.

In example1, I have provided a small Python programm that adds some headers that will enable Cross-Origin Isolation. The headers are:

Cross-Origin-Embedder-Policy: require-corp Cross-Origin-Opener-Policy: same-origin

You can run the server from the example1/web directory

using:

% ../webserver 8158

Quit the Chrome you started from the command line (to make sure you

quit that Chrome, you can check for a new prompt in the Terminal

window where you started it). Then start Chrome in the normal manner

and visit localhost:8158/test.html. The “Toggle

playback” button should work.

Now we have an ugly page that makes noise, and we need to isolate the means to write and execute C++ code in an audio worklet. The purpose for starting in this manner was to start with something that is simple to reproduce and shows some signs of working, even if it is not directly useful. Let’s move on from there.

The first cleanup is to remove most of the HTML from

test.html, replacing it with a simple title. It would

also be very helpful to test for Crosss-Origin Isolation, so I have

added the following to test.html:

<script> console.log("Executing script in test.html"); if (!crossOriginIsolated) { console.error("crossOriginIsolated is " + crossOriginIsolated, "which will result in no definition for SharedArrayBuffer.\n", "Try serving pages with the following headers:\n", " Cross-Origin-Embedder-Policy: require-corp\n", " Cross-Origin-Opener-Policy: same-origin"); } </script>

You can test this by running python3 -m http.server

8200 and visiting localhost:8200/test.html. You

will see on the browser console the crossOriginIsolated

error followed by the Uncaught ReferenceError: .... At

least now there is a clear explanation when you fail to run this with

the necessary headers.

Since the original web/test.html is created by running

emscripten, we rename the modified test.html to

test2.html in the same directory as the

make file. We modify Makefile to copy our

test2.html to web/test2.html.

We also rename audioworklet.c to

audioworklet.cpp, and change the name in

Makefile. This eliminates an error message since,

previously, we were invoking the em++ C++ compiler on a C

file.

The final form of Example 2 is in the example2

directory (see the sidebar for example source code). You

can run it from the directory example2/web using

../webserver 8159 and visiting

localhost:8159/test2.html in the browser. Be sure to use

test2.html and not test.html.

Next, we need to be able to communicate with the audio worklet.

The

main change is to add the flag -lembind to

em++ and create some declarations in the source code to

create and export functions to JavaScript. Here is what I added to

audioworklet.cpp:

float example3(int a, float b) { return a + b; }; void set_gain(float g) { gain = g; } EMSCRIPTEN_BINDINGS(my_module) { emscripten::function("example3", &example3); emscripten::function("set_gain", &set_gain); }

The bindings code requires a header, the set_gain

function sets a global variable

gain that must be declared, and I need to apply

gain in the audio generation, so the code also has

these additions and changes:

// near the top ... #include <emscripten/bind.h> // after the includes ... float gain = 1.0; // in ProcessAudio() function ... outputs[i].data[j] = (rand() / (float)RAND_MAX * 2.0f - 1.0f) * 0.3f * gain; ...

In this example, I renamed things from test and

test2 to example3. Since I do not want the

default example3.html generated by emscripten, I

changed the compiler output flag to -o web/example3.js,

which generates only example3.js and example3.ww.js.

I modified the example3.html (former

test2.html) with buttons to call the new

functions as follows:

...

<script>

function call_example3() {

var sum = document.getElementById("sum");

sum.innerHTML = "" + Module.example3(5, 10.3);

}

function call_soft() {

Module.set_gain(0.1);

}

function call_loud() {

Module.set_gain(1.0);

}

</script>

</head>

<body>

<h1>Example 2</h2>

<button type="button" onclick="call_example3();">Add</button>

<span id="sum"></span><br><br>

<button type="button" onclick="call_soft();">Soft</button>

<button type="button" onclick="call_loud();">Loud</button><br><br>

</body>

This test is not very pretty, but it is simple and has the

essentials to create an audio module and to get data in and out

(note that the example() function returns a float to

JavaScript.) You can run the server from the example3/web directory

using:

% ../webserver 8160

Then visit localhost:8160/example3.html

gain variable. The

“Add” button just returns and displays a sum to prove

that can compute and return values from C++.

A couple of remaining problems are (1) this code creates the Audio Context automatically, but in general we want to create the Audio Context in JavaScript and then create one or more instances of audio nodes based on WASM; (2) when we call WASM module functions, they run asynchronously with respect to audio processing. That’s fine (usually) for gain control, but what if we need to run some code at a clean point between two audio block computations? We do not want to use locks in a real-time audio thread, so we need to use message passing. Let’s implement all of that in Example 4.

window.emgl_audio_context. The code fragments are shown

below:

// make the audio context in C++ function worklet_create: EMSCRIPTEN_WEBAUDIO_T context = emscripten_create_audio_context( 0 /* use default constructor options */); // when the Audio Worklet Processor is ready, the C++ // AudioWorkletProcessorCreated callback runs and performs: call_worklet_callback(audioContext, success); // call_worklet_callback is embedded JavaScript as follows: EM_JS(void, call_worklet_callback, (EMSCRIPTEN_WEBAUDIO_T audioContext, EM_BOOL success), { audioContext = emscriptenGetAudioObject(audioContext); window.emgl_audio_context = audioContext; window.emgl_audio_worklet_callback(success ? 0 : 1); });

UserData parameter to pass in a pointer to the

node instance's state structure. Each node instance will run the

same audio process function but each with a different state

structure.audioutil.js. Some details for using the code appear at

the top of that file.

To run example 4, click on “Play a Tone” and you should hear a sine tone. The “Soft” and “Loud” buttons will change the amplitude. Clicking “Play a Tone” again and you should hear a second sine tone. Each click adds a new tone to the mix by creating a new audio node. Note that “Soft” and “Loud” affect only the first tone.

There's a new checkbox to suspend or resume the audio context

using an added checkbox and toggle_audio function in

example4.html.

The next example will try to clean up and generalize this example.

In this example, I present a more complete example and flesh out the growing “framework&rdqou;. I used quotes here because the goal is only to provide some useful and reusable functions supporting this particular style of creating web audio nodes with WASM. This example is even longer than Example 4, so if you want to learn the basics of creating and running audio nodes in C++ and WASM, you might want to study more basic and more concrete code in earlier examples.

Module, so we would need a way

to give each separate compilation a different global name. Even if

that were solved, we have no way to hand an audio context to the WASM

module, so each module would create a separate audio context. As far

as I can tell, interconnected nodes have to be created in the same

context.

The alternative is to pretend like there is just one type of

node, which we will call an awnode, but when we create

the audio node, we get to provide a void *UserData, so

we'll pass in a pointer to an instance of class

Awnode. Then, we can subclass Awnode to make

as many different “node types” as we want. It’s a

hack, but works within the limitations we are facing in our

understanding of emscripten.

audio_node_create(name) from JavaScript, which invokes

audio_node_create(name) in C++. This function looks up

name in a table of constructor functions and runs the

constructor to create an instance of the named subclass of

Awnode. Then,

emscripten_create_wasm_audio_worklet_node is called to

make an actual audio node with the signal processing callback

awprocess and UserData set to the

instance. When the callback is called, it invokes the

process method on the instance.

To access the instance for parameter updates or other operations,

the instance address is stored in the table audio_nodes

and the table index is returned to the JavaScript caller as a node

identifier or “node_id”. A JavaScript global variable,

window.emgl_audio_node, is used to return the audio node

as a second return value. The caller can use the audio node directly

to make audio connections. The node_id is used in calls

to update the audio node state, described in the following

paragraphs.

Since audio processing is on its own thread (associated with the audio

context), we must be careful updating parameters of unit

generators. The scheme I adopted is best illustrated by describing a

particular update: Setting the frequency of a sine tone

generator. Recall that a node_id was returned to the

caller when the sine tone generator was created. To update the

frequency to 440 Hz, the main thread in JavaScript calls

sinetone_set_freq(node_id, 440.0). This function is implemented in

C++ in the WASM module.

The sinetone_set_freq function finds an instance of

Awnode at audio_nodes[node_id]. Every

Awnode has a message queue implemented in C++. Messages

are simply structs where the first field is typically an

“opcode” (enum value) that identifies the message

type. The rest of the message consists of parameters. For the

Sinetone class the message struct is simply the

following:

/enum Opcode : int32_t {SET_GAIN = 0, SET_FREQ = 1}; struct Sinetone_msg { Opcode opcode; float val; };

and sine_set_freq looks like this:

void sinetone_set_freq(int id, float hz) { Sinetone_msg msg; msg.opcode = SET_FREQ; msg.val = hz; send_message_to_node(id, 'sntn', (char *) &msg); }

send_message_to_node enqueues the message. Leaving out

some consistency checks, it looks like:

void send_message_to_node(int id, int class_id, char *msg) { Awnode *awn = audio_nodes[id]; assert(awn->class_id == class_id); Pm_Enqueue(awn->queue, msg); }

To make sure that sinetone messages only go to instances of class

Sinetone, we store a unique class identifier in every instance and

pass the expected class_id (a four-letter multi-character constant,

which packs the ascii letters 'sntn' into a 32-bit

integer in this case). The assert checks for an instance

of the expected type.

In the audio processing callback awprocess, the first

thing we do is deliver any pending messages from the instance’s

queue. The code looks like:

EM_BOOL awprocess(int numInputs, const AudioSampleFrame *inputs, int numOutputs, AudioSampleFrame *outputs, int numParams, const AudioParamFrame *params, void *userData) { char msg_buffer[64]; Awnode *awn = (Awnode *) userData; // process all incoming messages: while (Pm_Dequeue(awn->queue, msg_buffer)) { awn->message_handler(msg_buffer); } // run the sample processing method: if (!awn->process(numInputs, inputs, numOutputs, outputs, numParams, params)) { ... clean up when node is finished ... } return EM_TRUE; }

This gets the data into Sinetone’s message

handler, which looks like:

void Sinetone::message_handler(char *msg) { Sinetone_msg *stm = (Sinetone_msg *) msg; if (stm->opcode == SET_GAIN) { gain = stm->val; } else if (stm->opcode == SET_FREQ) { phase_inc = stm->val * M_PI * 2 / 48000; } }

So finally, the gain member variable of our Sinetone is set. Note that

message handling always takes place synchronously on the audio thread

in between calls to the (audio) process method.

Be careful if you make your own FIFO for messages because the sender and receiver are on different threads, and you cannot use locks for a critical section where you add data and update pointers.

The implementation here is almost a direct copy from

PortMidi which has been using the same code for more than

a decade. The algorithm cleverly relies only on atomic reads/writes to

32-bit words with no special instructions like compare-and-swap (CAS).

One caveat with PortMidi's fifos is that there are special checks to make sure overflow do not go unnoticed, but my example code assumes this never happens.

As mentioned earlier, I used Embind to create function call interfaces to access C++ functions from JavaScript.

In this example, I moved all the EMSCRIPTEN_BINDINGS into

relevant source files, e.g. the binding for

sinetone_set_freq is now created in

sinetone.cpp, and there is no

EMSCRIPTEN_BINDINGS in the main example5.cpp

file. In retrospect, this is obvious, but it is not documented that

each use of EMSCRIPTEN_BINDINGS(name) requires a unique

symbol for name. Otherwise, one or the other set of

bindings will be silently dropped.

Putting it together, our main progam, exampl5.cpp, is now quite

simple. We just have to register the classes we want to use:

int main() { srand(time(NULL)); assert(!emscripten_current_thread_is_audio_worklet()); register_audio_worklet_class("sinetone", &sinetone_constructor); register_audio_worklet_class("noise", &noise_constructor); main_has_run = true; }

The assignment to main_has_run is used for consistency

checking when worklet_create is called. The constructor

functions are very simple and included in sinetone.cpp

and noise.cppsinetone.cpp:

Awnode *sinetone_constructor() { return new Sinetone(); }

example5/web directory, run ../webserver

8052 (I continue to restart the server on a new port each time

I recompile the WASM module to make sure we do not get a cached

version.) Then, visit localhost:8052/example5.html.

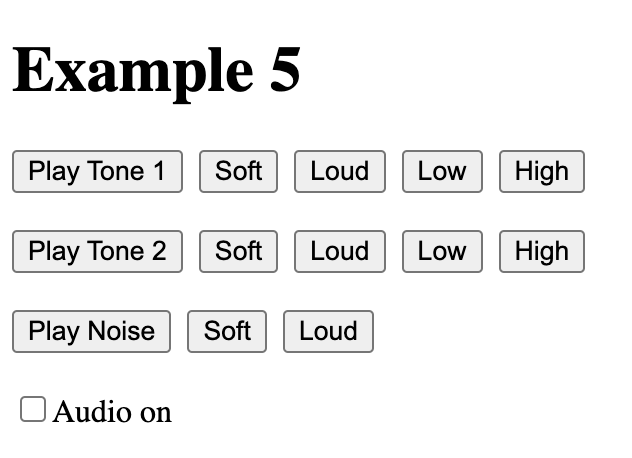

The screen looks like this:

emsdk_env.sh has a chicken-and-egg problem: it uses

shell variables that it also sets, so if you want to switch to

another emsdk directory or simply rename one that you are using,

emsdk_env.sh will not run in the new location.emsdk_env.sh is

part of the installer, so it could easily check to see if you

have installed emscripten before trying to configure it.-sAUDIO_WORKLET=1 switch in emscripten creates

code that loads a module accessed as Module. It is not

clear (is it even possible?) how to use a different name to allow

multiple modules, but it is very limiting to say you can only have

one audio node implemented with WASM.EMSCRIPTEN_BINDINGS(id), each instance

across all compilation units must use a unique symbol for

id. The function or requirements for id

are not mentioned in the documentation, and failing to use different

id's (e.g. just using my_module as

suggested by the documentation example, but in multiple places),

will silently fail to create (some) function interfaces.

audio_node_create() in

awutil.cpp. It should be simple to pass in parameters

to allow for other configurations. That might allow a caller to

instantiate a "sinetone" with 4

audio inputs (!), but it seems that a good design would somehow allow

additional parameters to audio_node_create(), pass

these into the constructor function to configure the particular

instance of Awnode, and finally query the instance to

get the number of inputs, number of outputs, channel counts, etc.,

to pass on to

emscripten_create_wasm_audio_worklet_node().

There is also no message delivery from audio nodes back to

JavaScript. I think this can be done relatively simply by creating a

single message queue using Pm_QueueCreate. Any node can

enqueue a message including the node’s id (which will have to

stored in a new member variable and initialized when the instance is

created). The main JavaScript thread can poll the queue through a

simple procedural interface, or maybe there is a way to activate a

JavaScript callback function when the queue is non-empty.

I need to think about how to handle FIFO overflow should be handled. For testing, it seems like overflow should result in a hard crash that cannot go unnoticed, but in production, maybe just dropping a message is better.

I would still be stuck without helpful hints from Hongchan Choi at Google, sbc100 via github (Sam Clegg) and ad8e via github (Kevin Yin).