Research Groups

Research Groups Principal Investigators

Principal Investigators Technical Area: Video Surveillance and Monitoring (VSAM)

Technical Area: Video Surveillance and Monitoring (VSAM)  Technical Agent

Technical Agent  Project Details

Project Details

Technical Objectives

Technical Objectives The expected contributions of our work under this program are based in part on the following innovative claims and insights:

Military Relevance

Military Relevance

Technical Approach

Technical Approach In describing our approach we divide the technologies to be developed into those concerned with the human-form and action executed by a single (or small number of) individual(s), and those focused on the general problem of detecting or recognizing actions in video imagery.

Presupposing the existence of 3-dimensional information, Aaron Bobick has focused on methods of interpreting of action. For whole-body action the most relevant work is the use of phase-space constraints to detect actions. The basic idea is to learn during training that certain relationships between body parts are in effect only during a particular action. Then, recognizing action reduces to detection of which constraints hold over a given time interval. We have used this technology to successfully recognize 9 fundamental ballet movements from 3D data. The important point is that the set of phase-constraints is the representation of the action; this is as opposed to a particular space-time trajectory.

We note that recently we have integrated these areas in constructing a system that automatically tracks and recognizes the gestures of Tai Chi.

We intend to extend the tracking and modeling to handle outdoor situations with significantly less controlled environments. Our goal is to use multiple cameras and dynamic constraints (e.g. temporal smoothness and kinematic plausibility) to enhance the robustness of the algorithms. We also plan to incorporate statistical decision making into the phase-space constraint approach, including the HMMs to be discussed below.

This is the case even though there are no discernible features in each individual

frame. This simple demonstration indicates that geometric modeling is

not necessary to recognize action. And given the difficulties present

in computing the 3D structure, it might not even be desirable.

Recently, we have begun to develop appearance-based methods of recognizing action. We leave the discussion of statistically- and HMM-based techniques for the next section. Here we want to emphasize some recent work that focuses on motion patterns varying over time as an index for action recognition. The basic idea is to separate where motion is happening in the image (i.e. the shape of the motion field) from how the motion is moving (i.e. the movement of the motion field). By using simple motion differencing operations we create a mask image; statistical moments describing that shapes are used as an index into stored models of action. From the model we retrieve how the motion varies over time, and then test for agreement. The procedure is fast and entirely appearance-based. For more information go here.

The natural progression from this work was to explore the use of Hidden Markov Models for describing gesture. One innovative technique we introduced allowed for different features to be measured for each state: the basic idea is that no single representation may be valid for an entire action and the ``right'' features to measure may be different at different phases of a gesture.

Our most recent work has once again moved away from HMMs and back to explicit (or visible) states. The main idea is that for gesture it is often the case that the temporal characteristics of the desired action are known and that one would like to devise a parsing mechanism capable of segmenting such gestures from incoming video. We have demonstrated an approach which allows us to parse natural gestures generated by someone telling a story. The system is able to identify important or meaningful gesture based upon the temporal structure. Under this project we will develop more fully the idea of temporal structure modeling. Our principal observation is that often the temporal nature of an action is better specified than the spatial configuration. One potential application is a coding system where the most meaningful gestures are granted a greater share of the video bandwidth.

As a final note we mention our work on recognizing American Sign Language. Although this may not be considered natural gesture, it is a grammar controlled action, much like the assembly of a device or the unloading of particular type of object: Part A must be raised before Part B can be extracted. We intend to explore the use of HMMs in the understanding of such temporally structured gestures.

Our recent work on approximate world modeling is designed to address this problem. The basic idea is to use some potentially inaccurate but universally applicable general purpose vision routines to try to establish an approximate model of the world. This model, in turn is then used to establish the context and select the best vision routine to perform a given task. A fundamental innovation of this work is that approximate models can be augmented by extra-visual, contextual information. For example, a linguistic description might be available indicating an approximate position for an object. Because we assume a potentially inaccurate world model, that information can be incorporated directly.

Under this project we intend to extend our initial work on action recognition within this framework. We are already using a simple inference system that draws implications about visual features that might be present during a given action in a given context. Our goal is to extend this work by deriving a ``Past-Now-Future'' calculus (derived from Allen's temporal interval algebra) that would allow the system to reason about sequences of events that constitute an activity.

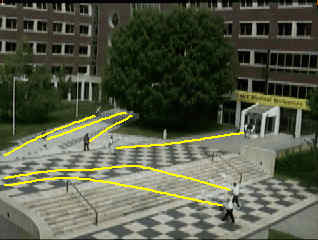

A fundamental problem to be addressed in any surveillance or monitoring scenario is that of information filtering: deciding whether a scene contains an activity or behavior worth analyzing. Our approach to the detection of such situations is to generate a statistical descrption of typical behavior such that typical scenarios are seen as being highly probable as being drawn from that distribution. With tuning, these same descriptions will cause much less typical situations to evaluate with a relatively lower probability.

We have begun to generate the tracking and trajectory mechanisms necessary to consrtuct the statistical descrption of activity. Also, since we are interested in interactions, we will employ the coupled HMMs we developed earlier to represent the statistical coupling between two people who are aware of each's presence. The goal is that the coupled HMMs will be sensitive enough to the pair's behavior that we can use it to detect "atypical interactions."

|

|

|

| Single frame from pedestrian scene | Segmented people | Tracked trajectories for behavior system |

Even apparently simple driving actions can be broken down into a long chain of simpler sub-actions. A lane change, for instance, may consist of the following steps (1) a preparatory centering the car in the current lane, (2) looking around to make sure the adjacent lane is clear, (3) steering to initiate the lane change, (4) the change itself, (5) steering to terminate the lane change, and (6) a final recentering of the car in the new lane. Under this project we will statistically characterize the sequence of steps within each action, and use the first few preparatory steps to identify which action is being initiated. Initial pilot studies indicate that driver's patterns are quite predictable and it is possible to both know almost instantly when a drive is going to turn in a particular direction, and to know if the control is following normal statistical patterns.

Some initial results:

|

|

| Single frame from vehicle scene | Tracked trajectories for behavior system |

Object detection in video is a natural extension of the problem of finding objects in single images. The need for robust, configurable systems that can locate objects in images is rapidly increasing due to the explosion in the amount of visual data available; searching and indexing this data is currently expensive.

Example-based learning techniques have proven to be successful in a wide variety of areas, from data mining to face detection in cluttered scenes. Through the use of examples, these algorithms avoid the need to explicitly model the object being searched for; rather, the model of the object is implicit in the patterns automatically learned by the algorithm. Thus, we feel this class of algorithms is well suited to the task we wish to investigate, in that their use will make the object detection system applicable to a wider variety of domains.

We have already developed a pedestrian detection system for static imagery. The basic approach is to construct a statstical basis set of the appearance of pedestrians in a scene and then to estimate the probability that a given region of an image a view of a person.

|

|

| Statistical training architecture | People found in static imagery. |

Clearly, one of the most important steps in detecting objects in video is to localize where motion is occuring in a frame. The simplest technique is to use change detection, or the differencing of consecutive frames of video, to see where motion has occured. Another, more sophisticated, approach to analyzing motion in video is represented by the class of optical flow algorithms. These algorithms automatically compute pixel-wise correspondences between grey level images. The information provided by the optical flow algorithms is more detailed than simple change detection and will be useful in subsequent steps of detecting objects. By integrating detection information across multiple frames, the system should exhibit a lower signal to noise ratio than by using isolated frames.

To actually determine what is and what is not a person, a classifier will be trained to recognize various measurements of people, including dynamic information not available in static images:

All these measurements will be combined into a feature vector that will be used to train a classifier to differentiate the Person from the Non-Person classes. To classify objects in this system, we will be using the support vector machine (SVM) classification technique developed by Vapnik, 1995. The support vector algorithm uses structural minimization to find the hyperplane that optimally separates two classes of objects; this is equivalent to minimizing a bound on generalization error.

A significant challenge to this approach is that the core pattern detection technique that we develop will have to be able to be applied to finding objects from a wide variety of dissimilar classes. Also, people, unlike faces, are non-rigid objects and therefore the space of patterns may be huge; the hope is that the support vector machinery will be able to recover this decision surface. The lack of a sufficient number of examples for this difficult, high dimension classification problem needs to be addressed as well. Furthermore, the fact that we are dealing with images in video that tend to be more noisy than single images will undoubtedly cause difficulties to arise in processing the data. We hope that certain preprocessing steps will lessen the negative impact of this noise.

A fundamental problem in understanding activities in video is simply keeping track if the individual entities. Much work in tracking only applies to situations with static backgrounds and non-occluding objects; unfortunately, only occasionally do such situations arise. Typically tracking requires consideration of complicated environments with difficult visual scenarios.

To address this problem we have developed a closed-world tracking technique that exploits local context to track objects. The fundamental idea is that one is not tracking an object against an unwanted, unknown distractor. Rather, there are no distractors. All objects and background must be tracked. The advantage is that in this situation it is possible to design custom trackers specifically suited to resolve a current ambiguity. We have initially tested this work in the the football domain with reasonable success. But to apply this technique to other domains we need to transform it from a fragile experimental system to a robust portable one. Under this project we would continue our development of the closed world tracking technology including developing a stand-alone system that could be easily included in other systems.

Publications

Publications Nonlinear Parametric Hidden Markov Models, Andrew Wilson and Aaron Bobick, to appear ICCV, Bombay, India, 1998

Human Action Detection using PNF Propagation of Temporal Constraints, Claudio Pinhanez and Aaron Bobick, MIT Media Lab PerCom TR 423, 1997

Dynamic Modeling of Human Motion, Chris Wren and A. Pentland, MIT Media Laboratory PerCom TR 415, 1997

"Linear Object Classes and Image Synthesis from a Single Example Image," (T. Vetter and T. Poggio). IEEE Transactions on Pattern Analysis and Machine Intelligence, Vol 19, No. 7, July 1997, 733-742.

"Comparing Support Vector Machines with Gaussian Kernels to Radial Basis Function Classifiers," (B. Schoelkopf, K. Sung, C. Burges, F. Girosi, P. Niyogi, T. Poggio and V. Vapnik). Special Issue of IEEE Trans Signal Processing, in press.

"Multidimensional Morphable Models: A Framework for Representing and Matching Object Classes," (M. Jones and T. Poggio). International Journal of Computer Vision, submitted 1997.

Coupled HMMs for Complex Action Recognition, Brand, M., Oliver, N., and Pentland, A., CVPR, San Juan, Puerto Rico, 1997.

Movement, Activity, and Action: The Role of Knowledge in the Perception of Motion, Aaron Bobick, Philo. Proc. of Royal Society, 1997.

The Representation and Recognition of Action Using Temporal Templates James W. Davis and Aaron F. Bobick, IEEE CVPR (and MIT Media Lab TR #402), San Juan, Puerto Rico, June 1997.

Recovering the Temporal Structure of Natural Gesture, Andrew Wilson, Aaron Bobick and Justine Cassell, CVPR, San Juan Puerto Rico, 1997

Real-time Recognition of Activity Using Temporal Templates Aaron F. Bobick and James W. Davis, Workshop on Applications of Computer Vision (and MIT Media Lab TR #386) 1996

An Appearance-based Representation of Action Aaron F. Bobick and James W. Davis, International Conference on Pattern Recognition (and MIT Media Lab Percom TR #369) 1996

"Role of Learning in Three-dimensional Form Perception," (P. Sinha and T. Poggio). Nature, Vol. 384, No. 6608, 460-463, 1996.

"Image Representation for Visual Learning," (D. Beymer and T. Poggio). Science, 272, 1905-1909, 1996.

"Example-based Learning for View-based Human Face Detection," (K.-K. Sung and T. Poggio). In: Proceedings from Image Understanding Workshop, November 13-16, 1994 (Morgan Kaufmann, San Mateo, CA), 843-850

"Learning and Vision," (T. Poggio and D. Beymer). In: Early Visual Learning, S. Nayar and T. Poggio (eds.), Oxford University Press, 43-66, 1996.

Related links

Related links

Last modified: November 6, 1997

This is the site of a DARPA-sponsored contractor. The views and conclusions contained within this website are those of the web authors and should not be interpreted as the official policies, either expressed or implied, of the Defense Advanced Projects Agency or the United States Government.