Single Camera Surveillance Technologies

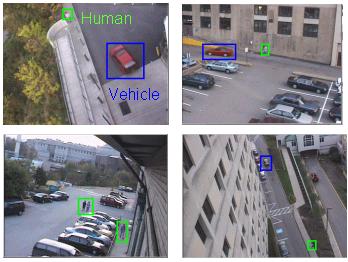

Keeping track of people, vehicles

and their interactions in a complex environment is a difficult task. The

first step of VSAM video understanding technology is to automatically "parse"

people and vehicles from raw video. We have developed robust routines for

detecting moving objects and tracking them through a video sequence using

a combination of temporal differencing and template tracking. Detected

objects are classified into semantic categories such as human, human group,

car, and truck using shape and color analysis, and these labels are used

to improve tracking using temporal consistency constraints. Further classification

of human activity, such as walking and running, has also been achieved.

Moving Object Detection

Detection of moving objects in video

streams is the first stage in automated video surveillance. Aside from

the intrinsic usefulness of being able to segment video streams into moving

and background components, detecting moving blobs provides a focus of attention

for recognition, classification, and activity analysis, making these later

processes more efficient since only "foreground" pixels need be considered.

CMU has developed three methods for moving object detection within the

VSAM testbed. A fourth approach to moving object detection from a

moving airborne platform has been developed by the Sarnoff Corporation.

This approach, based on image stabilization using special video processing

hardware, is described in the section on Airborne

Surveillance.

A Hybrid Algorithm for Moving Object

Detection

We have developed a hybrid algorithm

for detecting moving objects, by combining adaptive background subtraction

with three-frame differencing. We combine the two methods by using three-frame

differencing to determine regions of legitimate motion, followed by adaptive

background subtraction in those regions to extract the entire moving object.

This hybrid algorithm is very fast, and surprisingly effective -- it is

the primary algorithm used by the majority of the SPUs in the VSAM testbed

system.

Temporal Layers for Adaptive Background

Subtraction

A robust detection system should continue

to "see" objects that have ceased moving, and to disambiguate between overlapping

objects. This is usually not possible with traditional pixel-based motion

detection algorithms. We have developed a mechanism for maintaining

temporal object layers to allow greater disambiguation of moving objects

that stop for a while, are occluded by other objects, and then resume motion.

Layered detection is based on two

processes: pixel analysis and region analysis. The purpose of pixel analysis

is to determine whether a pixel is stationary or transient by observing

its intensity value over time. The technique is derived from the observation

that legitimately moving objects in a scene cause much faster intensity

transitions than changes due to lighting, meteorological, and diurnal effects.

Region analysis collects groups of labeled pixels into moving regions and

stopped regions,

and assigns them to spatio-temporal

layers. A layer management process keeps track of the various background

layers.

Background Subtraction from a Continuously

Panning Camera

Pan-tilt camera platforms maximize the

virtual field of view of a single camera without the loss of resolution

that accompanies a wide-angle lens. They also allow for active tracking

of an object of interest through the scene. However, moving object

detection using background subtraction is not directly applicable to a

camera that is panning and tilting, since all image pixels are moving .

It is well known that camera pan/tilt is approximately described

as a pure camera rotation, where apparent motion of pixels depends only

on the camera motion, and not on the 3D scene structure. In this respect,

the problems associated with a panning and tilting camera are much easier

than if the camera were mounted on a moving vehicle traveling through the

scene.

An initial background model is constructed

by methodically collecting a set of images with known pan-tilt settings.

The main technical challenge is how to register incoming video frames to

the appropriate background reference image in real-time. We have developed

a novel approach to registration that relies on selective integration

of information from a small subset of pixels that contain the most information

about the state variables to be estimated (2D projective transformation

parameters). The dramatic decrease in the number of pixels to process results

in a substantial speedup of the registration algorithm, to the point that

it runs in real-time on a modest PC platform. More details can be

found in Dellaert

and Collins, 1999.

Object Tracking

To begin building a temporal model of

activity, individual object blobs generated by motion detection are tracked

over time by matching them between frames of the video sequence. Given

a moving object region in a current frame, we determine the best match

in the next frame by performing image correlation matching, computed

by convolving the object's intensity template over candidate regions in

the new image. Due to real-time processing constraints in the VSAM

testbed system, this basic correlation matching algorithm is only

computed for "moving'' pixels, regions are culled that are inconsistent

with current estimates of object position and velocity , and imagery is

dynamically sub-sampled to ensure a constant computation time per match.

The tracker maintains multiple match hypotheses, and can split and merge

hypotheses when appropriate in order to disambiguate objects that pass

each other, causing temporary pixel occlusion in the image. More

details can be found in Lipton,

1999a and Collins

et.al., 2000.

Object Type Classification

The ultimate goal of the VSAM effort

is to identify individual entities. As a first step, two object

classification algorithms have been developed. The first uses view

dependent visual properties to train a neural network classifier to recognize

four classes: single human; human group; vehicles; and clutter. Each

neural network is a standard three-layer network, trained using the backpropagation

algorithm. Input features to the network are a mixture of image-based and

scene-based object parameters: image blob dispersedness, image blob area;

apparent aspect ratio, and camera zoom. This neural network classification approach

is fairly effective for single images; however, one of the advantages of

video is its temporal component. To exploit this, classification

is performed on each blob at every frame, and the results of classification

are kept in a histogram. At each time step, the most likely class label

for the blob is chosen, as described in Lipton,

Fujiyoshi and Patil, 1998.

The second method of object classification

uses linear discriminant analysis to provide a finer distinction between

vehicle types (e.g. van, truck, sedan) and colors. This method has

also been successfully trained to recognize specific types of vehicles,

such as UPS trucks and campus police cars. The method has two sub-modules:

one for classifying object shape, and the other for determining color (this

is needed because the color of an object is difficult to determine under

varying outdoor lighting). Each sub-module computes an independent discriminant

classification space using linear discriminant analysis (LDA), and calculates

the most likely class in that space using a weighted k-class nearest-neighbor

(k-NN) method. In LDA, feature vectors computed on training examples

of different object classes are considered to be labeled points in a high-dimensional

feature space. LDA then computes a set of discriminant functions,

formed as linear combinations of feature values, that best separate the

clusters of points corresponding to different object labels. See

Collins

et.al., 2000 for more details.

Another approach to distinguish between living objects (e.g. humans,

animals) and non-living objects (e.g. vehicles) is to measure the

rigidity of the moving object. Two different approaches can be found

in Lipton, 1999b

and in Selinger

and Wixson, 1998.

Activity Analysis

After detecting objects and classifying

them as people or vehicles, we would like to determine what these objects

are doing. In our opinion, the area of activity analysis is one of

the most important open areas in video understanding research. We have

developed two prototype activity analysis procedures. The first uses the

changing geometry of detected motion blobs to perform gait analysis of

walking and running human beings. The second uses Markov model

learning to classify simple interactions between multiple objects, such

as two people meeting, or a vehicle driving into the scene and dropping

someone off.

Gait Analysis

We have developed a "star'' skeletonization

procedure for analyzing human gaits. The star skeleton consists of the

centroid of a motion blob, and all of the local extremal points that

are recovered when traversing the boundary of the blob. For a human being,

the uppermost star skeleton segment is assumed to represent the torso,

and the lower left segment is assumed to represent a leg, which can

be analyzed for cyclic motion. The posture of a running person can

easily be distinguished from that of a walking person, using the angle

of the torso segment as a guide. Also, the frequency of cyclic motion of

the leg segments provides cues to the type of gait. See Fujiyoshi

and Lipton, 1998 for details.

Activity Recognition using Markov

Models

We have developed a prototype activity

recognition method that estimates activities of multiple objects from attributes

computed by low-level detection and tracking subsystems. The activity

label chosen by the system is the one that maximizes the probability of

observing the given attribute sequence. To obtain this, a Markov

model is introduced that describes the probabilistic relations between

attributes and activities. We tested the functionality of our method with

synthetic scenes which have human-vehicle interaction. In our test system,

continuous feature vector output from the low-level detection and tracking

algorithms is quantized into a discrete set of attributes and values

for each tracked blob

-

object class: Human, Vehicle,

HumanGroup

-

object action: Appearing, Moving,

Stopped, Disappearing

-

interaction: Near, MovingAwayFrom,

MovingTowards, NoInteraction

These features were quantized into symbols

and used as the input of the system. } The activities to be labeled are

1) A Human entered a Vehicle, 2) A Human got out of a Vehicle, 3) A Human

exited a Building, 4) A Human entered a Building, 5) A Vehicle parked,

and 6) Human Rendezvous. To train the activity classifier, conditional

and joint probabilities of attributes and actions are obtained by generating

many synthetic activity occurrences in simulation, and measuring low-level

feature vectors such as distance and velocity between objects, similarity

of the object to each class category, and a noise-corrupted sequence

of object action classifications.