Abstract

Abstract |

|

| We present a 2D model-based approach to localizing human body in images viewed from arbitrary and unknown angles. The central component is a statistical shape representation of the nonrigid and articulated body contours, where a nonlinear deformation is decomposed based on the concept of parts. Several image cues are combined to relate the body configuration to the observed image, with self-occlusion explicitly treated. To accommodate large viewpoint changes, a mixture of view-dependent models is employed. Inference is done by direct sampling of the posterior mixture, using Sequential Monte Carlo (SMC) simulation enhanced with annealing and kernel move. The fitting method is independent of the number of mixture components, and does not require the preselection of a ``correct'' viewpoint. The models were trained on a large number of interactively labeled gait images. Preliminary tests demonstrated the feasibility of the proposed approach. | |

| |

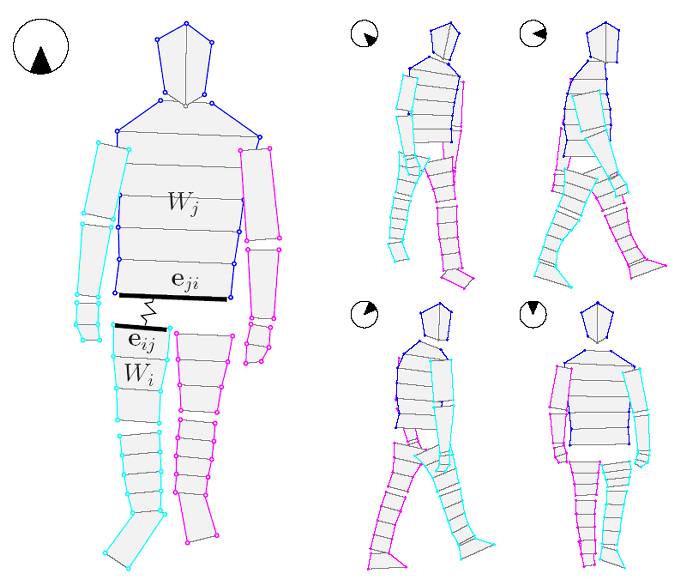

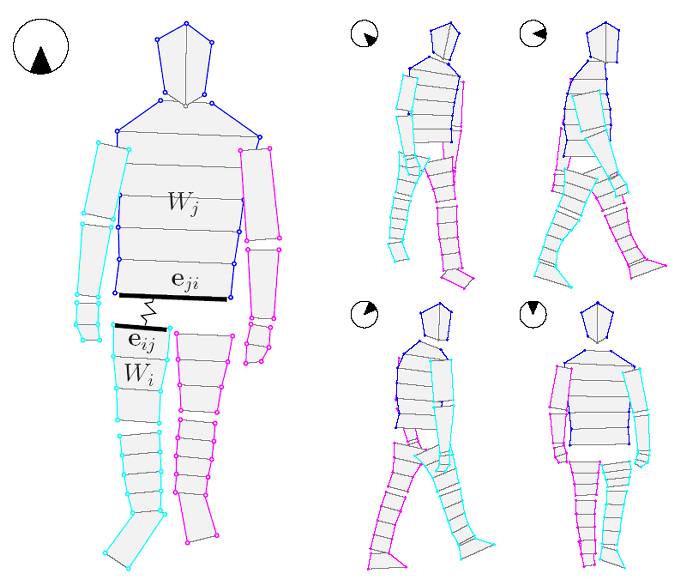

| Figure 1. Topology of five basic component models. Landmarks are grouped into a collection of parts with depth order. | |

Publication |

|

The paper at ICCV'05:

| |

Results |

|||||||||

| |||||||||

|

Last update: Nov 18, 2005 |