15-494/694 Cognitive Robotics: Lab 3

I. Software Update and Initial Setup

- At the beginning of every lab you should

update your copy of the cozmo-tools package. Do this:

$ cd ~/cozmo-tools

$ git pull

- For this lab you will need a robot, a charger, a Kindle, and some

landmark sheets. You will not need light

cubes for this lab, and in fact you do not want Cozmo to see any light

cubes while you're performing this lab.

- Log in to the workstation.

- Make a lab3 directory.

- Download the file Lab3.py and put it in your

lab3 directory. Read the file.

- Put Cozmo on his charger and connect to him; put him in SDK mode.

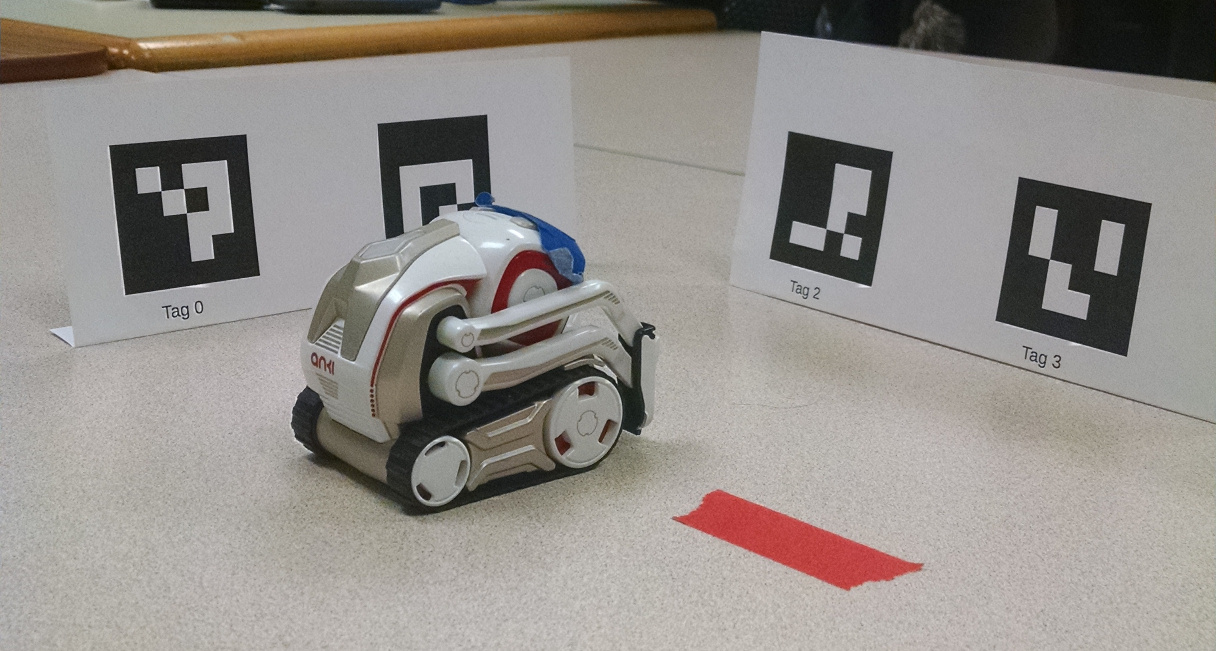

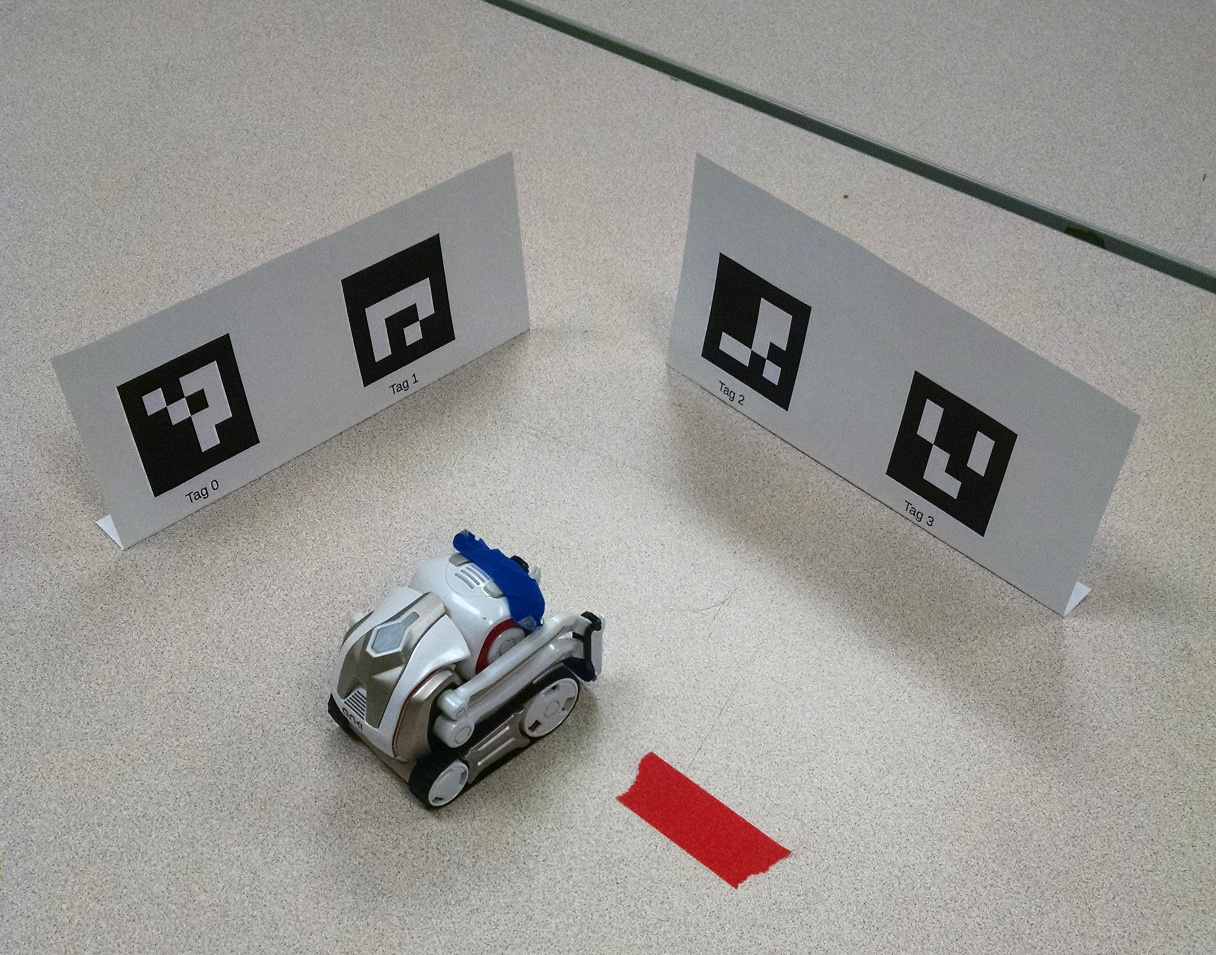

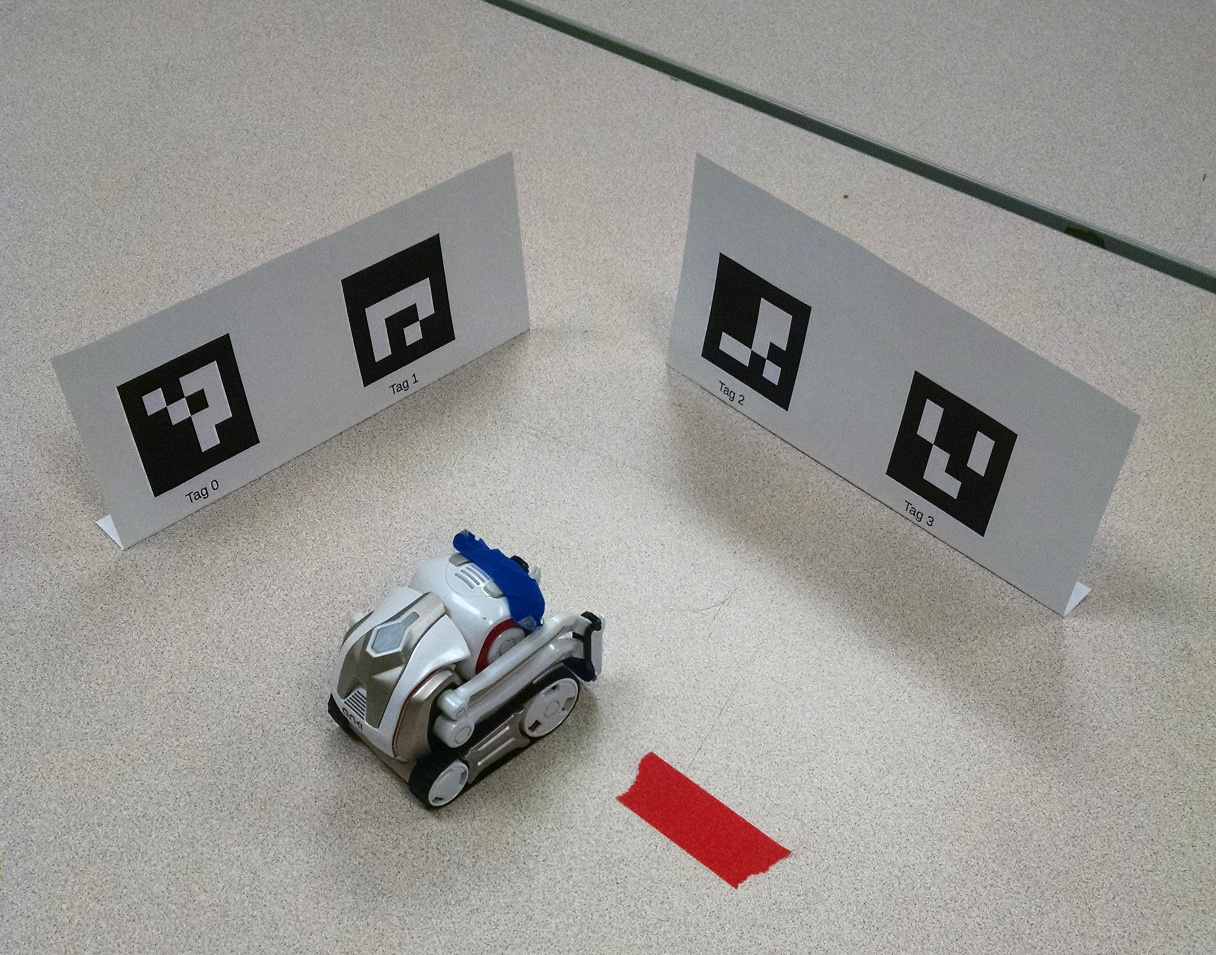

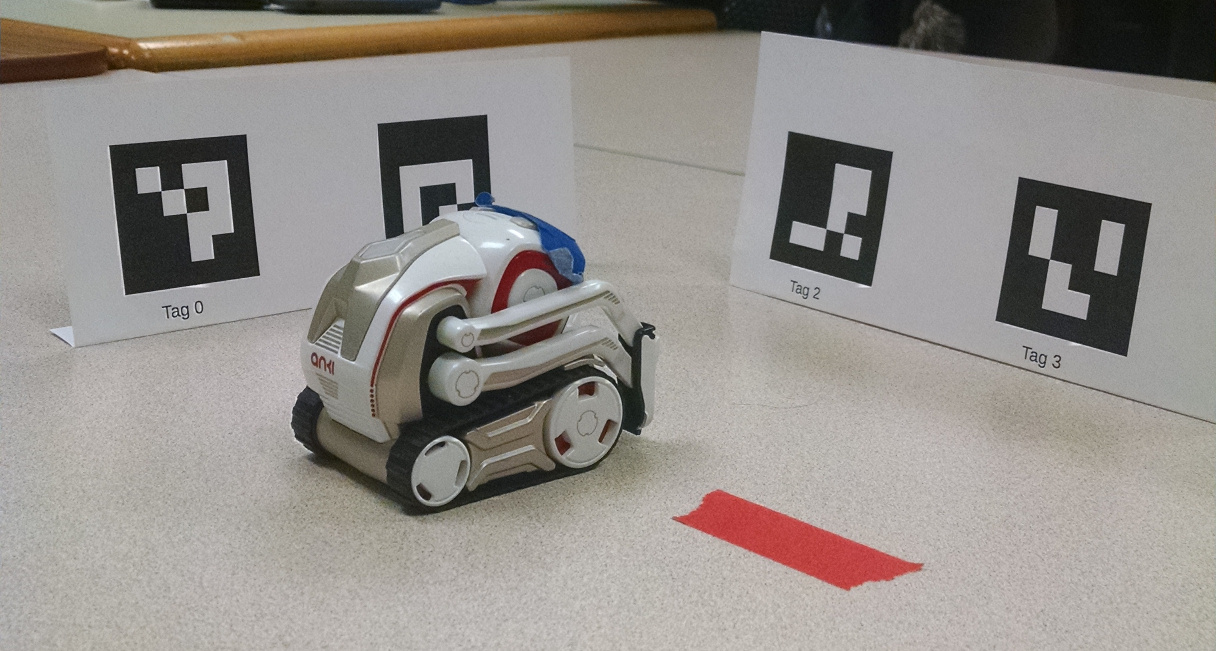

- Set up the first landmark sheet so that landmarks 2 and 3 are

directly ahead of the robot, at a distance of 160 mm (6.29 inches)

measuring from his front axle. Fold the sheet at the bottom and use masking

tape to secure it to the table.

- Set up the second landmark sheet perpendicular to the first one

so that landmarks 0 and 1 are running along the robot's left side,

about 160 mm to the left of his midline.

- Open a shell on the workstation, cd to your lab3 directory, and type "simple_cli".

- Do runfsm('Lab3') to begin the lab.

- Type "show particle_viewer" to bring up the particle viewer.

- Notice that the particles are initially distributed randomly.

II. Localization Using Just Distance Features

- The particle filter implementation we're using is based on these

lecture slides. You may find it helpful to review them if you want to

understand the code in more detail.

- Lab3 sets up a particle filter that evaluates the particles based

on their predictions about the distances to landmarks. For a

single landmark, if we know only its sensor-reported distance z, then

p(x|z) forms an arc of radius z.

- Place an object (not a light cube!) in front of marker 2 so that

Cozmo can only see marker 3.

- The particle filter viewer accepts keyboard commands. Press "z"

to randomize the particles, then press "e" to ask the sensor model to

evaluate the particles based on the current sensor information. Then

press "r" to resample based on the particle weights. Do this several

times. What do you see?

- With two landmarks visible we can narrow down our location a bit,

but it helps if the landmarks are widely separated. Landmarks 2 and 3

are not that well separated, but they're good enough. Unblock

landmark 2 so the robot can see both, and press "e" and "r" some more

to observe the effect.

- The yellow triangle shows Cozmo's location and heading, and the

blue triangle shows the variance in his heading estimate.. Cozmo

has no way to determine his heading from distance data alone. So

even though his location estimate converged quickly, he still has no

clue as to his heading. The particles in the display are all at

roughly the correct distance, but they have random headings.

- What does Cozmo need to do to narrow down his heading estimate?

III. Localization Using Distance Plus Motion

- Remove the charger, put Cozmo back at his starting position,

Cover landmark 2 again. Randomize the particles, and press the

"r" key a bunch of times. The particle headings are random.

- The particle viewer uses the w/s keys to move forward and

backward. Drive Cozmo forward and backward and observe what happens

to the particles. Although the particles still cover a broad arc,

they are now all pointing toward the landmark. This is because

particles whose headings were inconsistent with Cozmo's motion earned

low weights, and were eventually replaced by the resampling algorithm.

Now Cozmo's estimated heading, being the weighted average of the

particles, is closer to his true heading.

- Uncover landmark 2 so Cozmo can see both landmarks 2 and 3.

What effect does the availability of a second landmark have on

Cozmo's localization?

- The particle viewer uses the a/d keys to turn left and right.

Turn to view the 0/1 landmarks, move toward or away from them, then

turn back to the 2/3 landmarks, and so on. This provides more

information to the sensor model and allows the particle filter to

better discriminate among particles. What do you observe the

particles doing when the robot moves this way?

IV. A Bearing-Based Sensor Model

- Lab3.py uses a class called ArucoDistanceSensorModel to weight

particles. It's defined in cozmo_fsm/particle.py. Instead of

distances, we could use choose to use bearings to landmarks.

- Create a variant program Lab3a.py that uses

ArucoBearingSensorModel instead. When only a single landmark is

visible, the distance model distributes particles in an arc around the

landmark, but the bearing model provides no position constraint. It

simply forces all the particles to point toward the landmark. How big

a difference does it make to have multiple landmarks in view? Let

Cozmo see both landmarks 2 and 3, and hold down the "r" key for a

while.

V. A Combination Distance and Bearing Sensor Model

- There's no reason we can't combine distance and bearing

information to have the best features of both. Write another variant

program Lab3b.py that uses

ArucoCombinedSensorModel.

- How does the particle filter behave now?

- Drive the robot around using the wasd keys but keep it facing

away from the landmarks so it cannot correct for odometry error.

What do you observe about the particle cloud?

VI. An Odometry Issue

- Note: this section doesn't involve use of the particle filter;

you can put the landmarks away. The particle filter's motion model

relies on Cozmo's built-in odometry to measure the robot's travel.

But there is a problem with it.

- Cozmo's origin is located on the floor at the center of his front

axle. However, when he turns in place, he rotates about a point

between the front and back axles. Thus, although the position of

Cozmo's center of rotation isn't changing (ignoring any wheel

slippage), the position of his origin does change. To the motion

model, it looks like Cozmo is traveling forward or backward.

- To see this, pick Cozmo up and put him back down, so that

robot.pose.position is close to (0,0). Then do Turn(90).now() and

re-check robot.pose. Try a series of 90 degree turns and check the pose

after each one.

- Write code to estimate the distance R between Cozmo's origin and

his center of rotation by making a bunch of 180 degree turns under program

control and looking at robot.pose after each turn. Your program

should collect this data and come up with a value for R.

- It may interest you to know that the motion model used by

cozmo_fsm takes into account the fact that the robot's center of turn

is offset from the origin, and makes appropriate corrections.

VII. Programming Problems

These are somehwat open-ended problems that will allow you to

experiment a bit. We're more interested in seeing what you come up

with than in getting a single "right" answer. You may do these

problems either on your own, or with one partner. Teams should not be

larger than 2 persons.

- Write a FindClosestCube node that computes the distance from the

robot to each cube and finds the cube that is closest. It should broadcast

that cube using a DataEvent. Write another node that can receive

this DataEvent and make Cozmo turn to face the closest cube. Then put

everything together in a state machine.

- The cozmo-tools particle filter treats landmarks as points, so if

the robot sees only a single ArUco tag it cannot fully localize

itself. If it travels a bit and then spots another tag, the

particle cloud should then collapse to a single tight cluster.

Construct an illustration of this by configuring some physical

landmarks and writing code to look at one landmark, turn 90 degrees

and travel a bit, then turn 90 degrees again and look at the second

landmark. Can Cozmo figure out where he is?

- Can Cozmo toss a ball? Write a Toss node to have him raise

his lift as quickly as possible. (It should inherit from the MoveLift

node in cozmo_fsm/nodes.py.) Make a little frame or basket out of paperclips,

rubber bands, masking tape, etc., that can be affixed to his lift. The

frame should be just large enough to hold a ping pong ball or a wad of paper

or some other small object. How far can Cozmo toss it? How reliably can you

control the direction of the toss?

If you can't raise the lift quickly enough to get a good toss,

consider running one of the built-in animations that makes rapid

lift gestures. The animation may be able to move the lift more

quickly than a standard API call can do it.

Hand In

Collect the following into a zip file:

- The code you wrote to determine the distance R, and the value you came up with.

- The code you wrote for the programming problems, and some screen

shots and a brief narrative to describe your results.

- The name of your partner if you worked in a team.

Hand in your work through AutoLab by next Friday.

|