Practical Hypothesis Testing

The generic formulation for hypothesis testing, once we've specified our null and alternate hypothesis $H_0$ and $H_a$ is as follows:

- We start with an $\alpha$ probability (probability of type 1 error) that we think is acceptable, or alternately, the desired confidence in the test (where confidence is 1 - $\alpha$). Let us call this $\alpha_z$. Typical $\alpha_z$ values are 0.05 or 0.01.

- We first derive the probability mass function (or probability density function) function of the statistic that we are evaluating.

- From this distribution, we compute the probability of type 1 error for different rejection regions. Since the rejection region is typically parameterized by a threshold $\theta$, this equates to computing the $\alpha$ value for different thresholds (different $\theta$ values), until we find a threshold $\theta_r$ where the computed $\alpha$ value is the desired $\alpha_z$ value.

- We then determine if the value of the statistic derived from our sample falls in the rejection region defined by the threshold $\theta_r$ to accept or reject the null hypothesis.

In addition to the rejection region, we may also be able to control the number of samples $N$. Typically, however, we will focus on the confidence

Rejection regions, one-sided, and two-sided hypothesis testing

The rejection region $R$ generally comprises comparing the statistic $Y$ against a threshold $\theta$. However whether the rejection region includes values less than the threshold, or if it only includes values greater than the threshold, or if it includes regions that are both less than one threshold or greater than another depends on the alternate hypothesis.

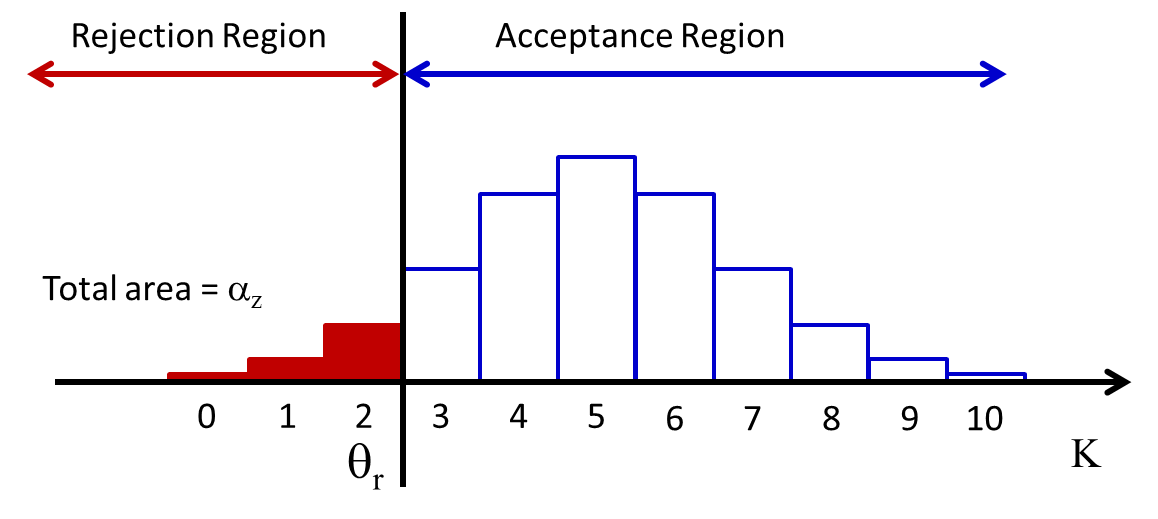

- Left Sided Test: If, in order to reject the null hypothesis in favor of your alternate hypothesis the statistic $Y$ must lie below the threshold, we have a left-sided test. In our coin example, if our claim is that $P(heads) < 0.5$, then we would want the number of heads in $N$ tosses to be less than some threshold. So we choose the threshold $\theta_r$ such that the total probability of $Y \leq \theta_r$ is no more than the desired $\alpha_z$. E.g. for the coin problem, we may want the total number of heads to be no more than 2. This is illustrated in the figure below.

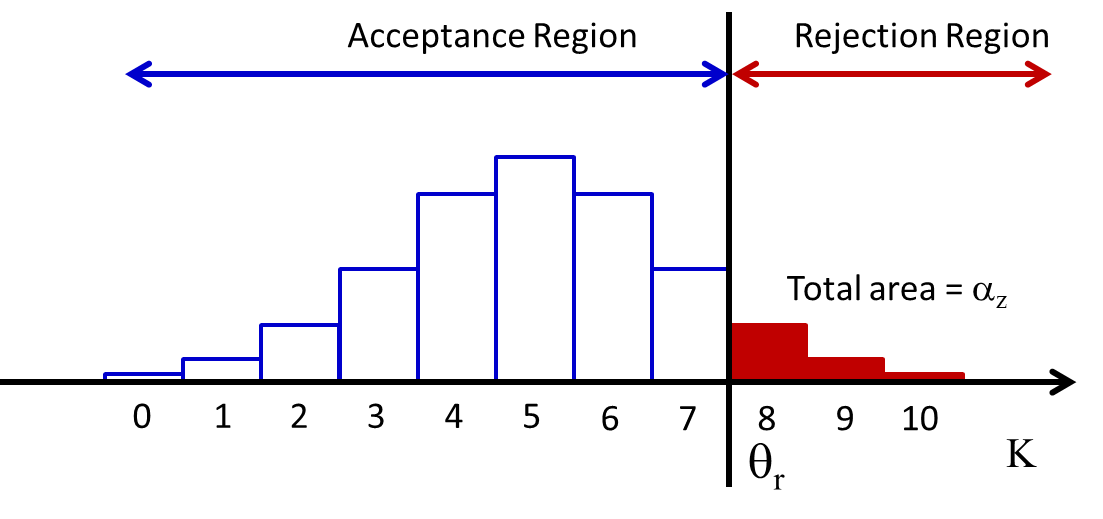

- Right Sided Test: If, in order to reject the null hypothesis in favor of your alternate hypothesis the statistic $Y$ must lie above the threshold, we have a right-sided test. In our coin example, if our claim is that $P(heads) > 0.5$, then we would want the number of heads in $N$ tosses to be more than some threshold. So we choose the threshold $\theta_r$ such that the total probability of $Y \geq \theta_r$ is no more than the desired $\alpha_z$. For the coin problem, for example, we may want the total number of heads to be at least 8 to support our alternate hypothesis $H_a$. This is illustrated in the figure below.

- Two Sided Test: Often, we will not propose a direction for our alternate hypothesis $H_a$; just that it contradicts the null hypothesis $H_0$. For instance, in our coin example, we may simply claim that the coin is not fair, but not specify if $P(heads) > P(tails)$ or the other way around. In this case, in order to reject the null hypothesis $H_0$ we will generally require the test statistic to be distant from a mean, without mentioning the direction, i.e. we reject $H_0$ if $|Y - \mu| \geq \theta_r$. We will now choose $\theta_r$ such that the total probability of either $Y \geq \mu + \theta_r$ or $Y \geq \mu-\theta_r$ is no more that $\alpha_z$, i.e., we will choose $\theta_r$ such that $P(Y \geq \mu + \theta_r) + P(Y \leq \mu-\theta_r) \leq \alpha_z$. When the probability distribution of $Y$ is symmetric about its mean, this simply becomes $P(Y - \mu \geq \theta_r) > \frac{\alpha_z}{2}$.

For the coin problem, for example, we may want the absolute difference between the total number of heads obtained and 5 (the expected number of heads in 10 tosses of a fair coin) to be at least 3 in order to decide that the coin is not fair. This is illustrated in the figure below, which also shows the two-sided probability of type-1 error.

Left- and right-sided tests are called one-sided tests.