Lecture 12: Theory of Variational Inference: Marginal Polytope, Inner and Outer Approximation

Introduction of Loopy Belief Propagation algorithm and the theory behind it and Mean-field approximation.

Recap of the message passing algorithm and its properties

In the previous lectures, we have looked at exact inference algorithms and observed that they are not very efficient. Hence, a need for more efficient inference algorithms arises. This lecture will focus on such algorithms which are called Approximate Inference Algorithms.

Inference using graphical models can be used to compute marginal distributions, conditional distributions, the likelihood of observed data, and the modes of the density function. We have already studied that exact inference can be accomplished either using brute force (i.e. eliminating all the required variables in any order) or by refining our elimination order so as to reduce the number of computations. In this context, the belief propagation or sum-product message passing algorithm run on a clique tree generated by a given variable elimination ordering was introduced as an equivalent way of performing variable elimination. We have seen that the overall complexity of the algorithm is exponential in the number of variables in the largest elimination clique which is generated when we use a given elimination order. The tree width of a graph was defined as one less than the smallest possible value of the cardinality of the largest elimination clique, ranging over all possible elimination orderings. If we can find an optimal elimination order, we can reduce the complexity of belief propagation. As the problem of finding the best elimination order is NP-hard, exact inference is also NP-hard. Belief propagation is guaranteed to converge to a unique and fixed set of values after a finite number of iterations when it is run on trees.

For more details about the message passing algorithm, please look at the Notes for Lecture 4.

Message Passing Protocol

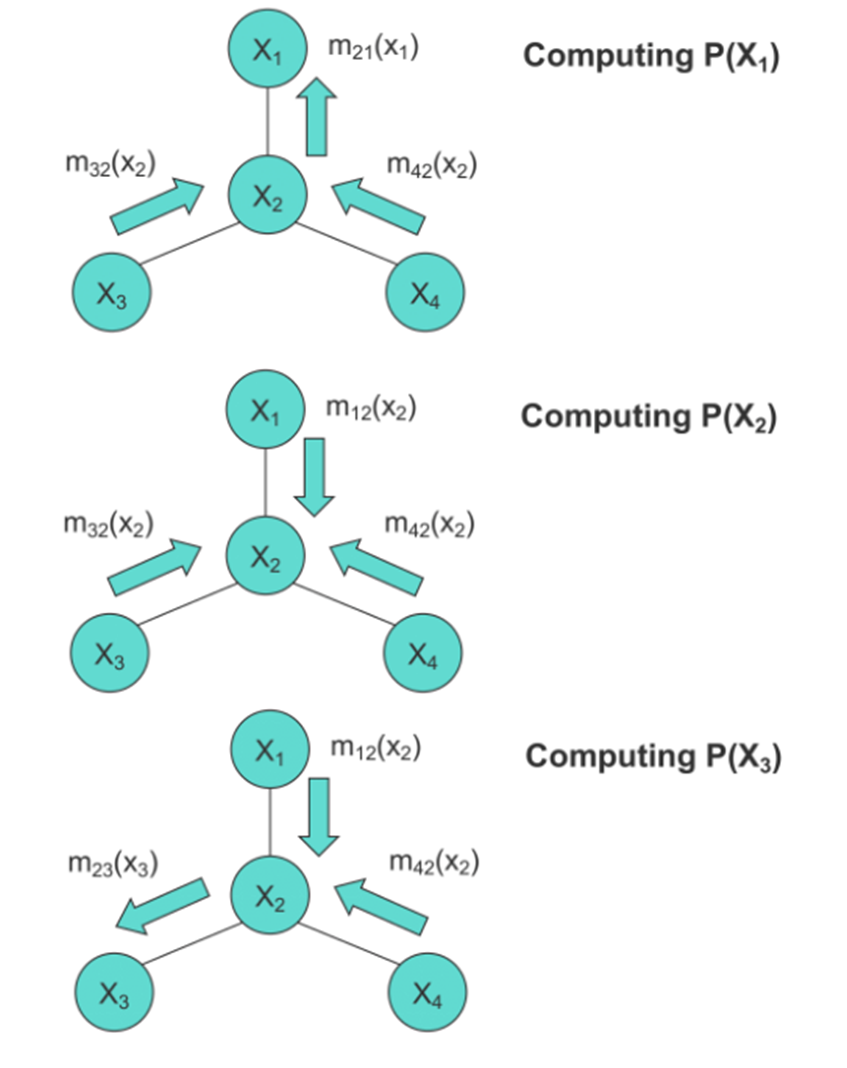

The message passing protocol dictates that a node can only send a message to its neighbors when and only when it has received the messages from all of its other neighbors. Hence, to naively compute the marginal of a given node, we should treat that node as the root and run the message passing algorithm. This is illustrated by the three figures given below, each of which shows the messaging directions to be used when computing the given marginal:

Message Passing for HMMs

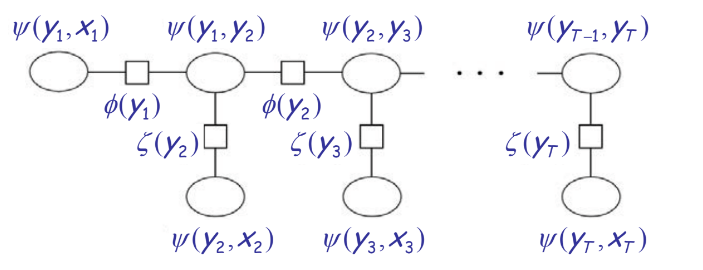

When the message passing algorithm is applied to a HMM shown below, we will see that the forward and backward algorithms can be obtained.

The corresponding clique tree for the HMM shown above is:

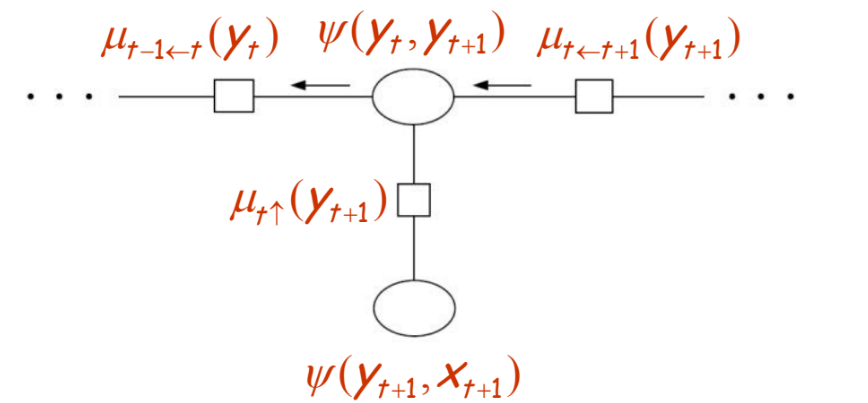

Now, the messages (denoted by s) and the potentials (denoted by s) involved in the rightward pass are depicted by the below figure:

We have that,

We know that:

is the probability of transitioning from to and

is the probability of emitting in state .

which is the forward algorithm.

Similarly, the messages and the potentials involved in the leftward pass are depicted by the below figure:

Then we have that,

which is the backward algorithm.

Correctness of Belief Propagation in Trees

Theorem: The message passing algorithm correctly computes all of the marginals in a tree.

This is a result of there being only one unique path between any two nodes in a tree. Intuitively, this guarantees that only two unique messages can be associated with an edge, one for each direction of traversal.

Local and Global Consistency

Let and denote the set of functions which are associated with cliques and separator sets respectively.

These sets of functions are locally consistent if the following properties hold:

The first property implies that the functions associated with each separator set are proper marginals. The second property requires that if we sum any clique function over all the variables in the clique which are not present in a sepset , we must obtain a function .

The aforementioned sets of functions are global consistent if all and are valid marginals.

For junction trees, local consistency is equivalent to global consistency. A proof of this fact can be found at this link.

However, for graphs which are not trees, local consistency does not always imply global consistency.

Example

Consider the two following message passing sequences for the same graph:

It can be seen that we obtain different values for based on the message passing sequence.

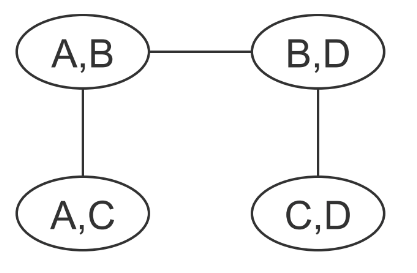

Similarly, if we construct a clique tree for the above graph (shown below), we see that the random variable is part of two non-neighbouring cliques. Hence, it is impossible for the two clique potentials which contain to agree on the marginal associated with since no information about is ever passed in the messages.

Loopy Belief Propagation

Above examples illustrate that on a non-tree graphical model the message passing algorithms are not guaranteed to provide a correct solution to the inference problem anymore. One way to deal with this is to convert a non-tree graphical model into a junction tree. However, such conversion often leads to graph with extremely large tree-width which is also unaffordable. For example in the following figure, an Ising model with grid and will yield a clique with entries. Therefore, we have to do approximation inference such as loopy belief propagation or mean field approximation.

Introduction to loopy Belief propagation and empirical observations

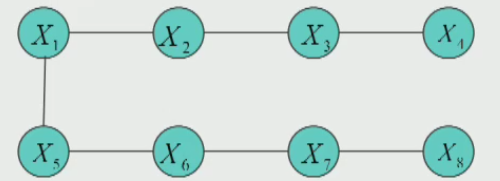

The main idea of loopy belief propagation does is to extend belief propagation algorithm (a.k.a message passing algorithm) from tree to non-tree graphical models. Considering an undirected graphical model with pairwise and singleton potential functions, the loopy belief propagation algorithm calculates the messages and marginal probability based on the following equations:

To be specific, the messages are updated and passed iteratively among nodes at the same time. Different from belief propagation algorithm where we pass the messages from the leaves to the root, the message passing here is recurrent. Another difference is that the loopy belief propagation algorithm doesn’t need to pass messages only after collecting all the messages from its neighbors. Previous studies showed that directly copying the idea of belief propagation from tree to non-tree graphical model leads to two outcomes:

- The algorithm converges and the approximated results are usually close to the results calculated by brute-force marginalization of variables.

- The algorithm fails to converge at all and oscillates between multiple answers of .

A theory behind loopy belief propagation: Bethe approximation to Gibbs free energy

It is often the case to use a distribution Q to approximate the intractable distribution P. In order to obtain a good approximation, the KL-divergence between Q and P is supposed to be reasonably small. Based on the factorized probability of the joint distribution, we can write the KL-divergence between Q and P as follows:

We call the first two terms the Free Energy. Now we consider an example of tree-structured distribution shown above. Based on the chain rule and local Markov property of undirected graphical model, we can expand the joint probability to factorized probability. According to the Bayes rule, we can further write the joint probability in the following form:

Note that only the singleton probabilities and don’t occur in the above equations. Therefore, we can summarize the joint probability for any tree-structured distribution as , where represents the degree of that note . With this probability and the definition of the Free Energy, we can further write the entropy term and Free Energy as follows:

Then we consider a non-tree graph like this:

We can not write down its probability like:

Then it’s hard to calculate the Free energy . However, for a general graph, we can choose approximate , which has the formulation:

Note that this is equal to the exact Gibbs free energy when the factor graph is a tree, but in general, is not the same as the of a tree.

Then for the loopy graph above, we can write the as:

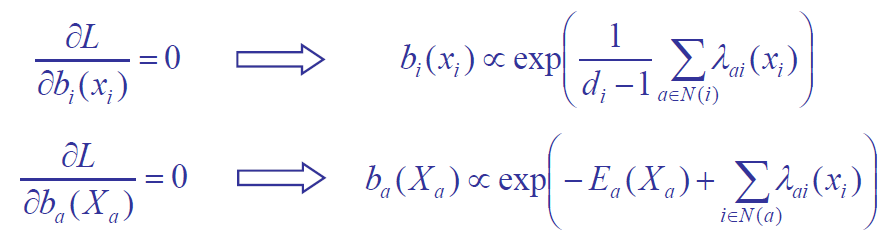

Constrained minimization of the Bethe free energy

Then we want to solve the constrained minimization problem:

We can write the Lagrange form as:

Then we can have the zero-gradient solutions:

A interesting finding is that, if we identify , then we get exactly the BP equations:

Theory of Variational Inference

We have learned two families of approximate inference algorithms: Loopy belief propagation (sum-product) and mean-field approximation. Then in this section we’ll re-exam them from a unified point of view based on the variational principle: Loop BP – outer approximation, Mean Filed – inner approximation.

Variational Methods

“Variational” is a fancy name for optimization-based formulations, which represent the quantity of interest as the solution to an optimization problem. Actually many problems can be formulated in a variational way:

-

Eigenvalue problem: find the eigenvalue of , which means for any . Then we have the Courant-Fischer for eigenvalues: .

-

Linear systems of equations: . The variational formulation can be: . For large systems, we can apply conjugate gradient method to compute this efficiently.

Inference Problems in Graphical Models

Given an undirected graphical model, i.e.,

where denotes the collection of cliques, one is interested in the inference of the marginal distributions

Ingredients: Exponential Families

Definition: We say follows from an exponential family provided that the parametrized collection of density functions satisfies:

Moreover, is one of the sufficient statistics for , see Larry Wasserman’s lecture notes from 10/36-705 for more details and examples here; and is usually known as the log partition function, which is convex and lower semi-continuous. Further,

- Parametrize Graphical Models as Exponential Family For undirected graphical model, if we assume

and let

then

- Examples: Gaussian MRF, Discrete MRF.

Ingredients: Convex Conjugate

Definition: For a function , the convex conjugate dual, which is also known as the Legendre transform of , is defined as

and the convex conjugate dual is convex, no matter the original function is convex or not, and Moreover, if is convex and lower semi-continuous, then

- Application to Exponential Family

Let be the log partition function for the exponential family

The dual for is

and the stationarity condition is,

we can thus represent through the mean parameter . Therefore, we have the following Legendre mapping:

where is the Boltzmann-Shannon entropy function.

Ingredients: Convex Polytope

Half-plane Representation: the Minkowski-Weyl Theorem

Theorem: A non-empty convex polytope can be characterized by a finite collection of linear inequality constraints, i.e.

Marginal Polytope

Definition: For a distribution and a sufficient statistics , the mean parameter is defined as:

and the set of all realizable mean parameters is denoted by:

-

is a convex set;

-

For discrete exponential families, is called the marginal polytope, and has the following convex hull representation:

By the Minkowski-Weyl Theorem, the marginal polytope can be represented by a finite collection of linear inequality constraints, see the examples for the 2-node Ising model.

Variational Principle

The exact variational formulation is:

where is the marginal polytope, as mentioned before, which is difficult to characterize. is the conjugate dual (entropy) without explicit form.

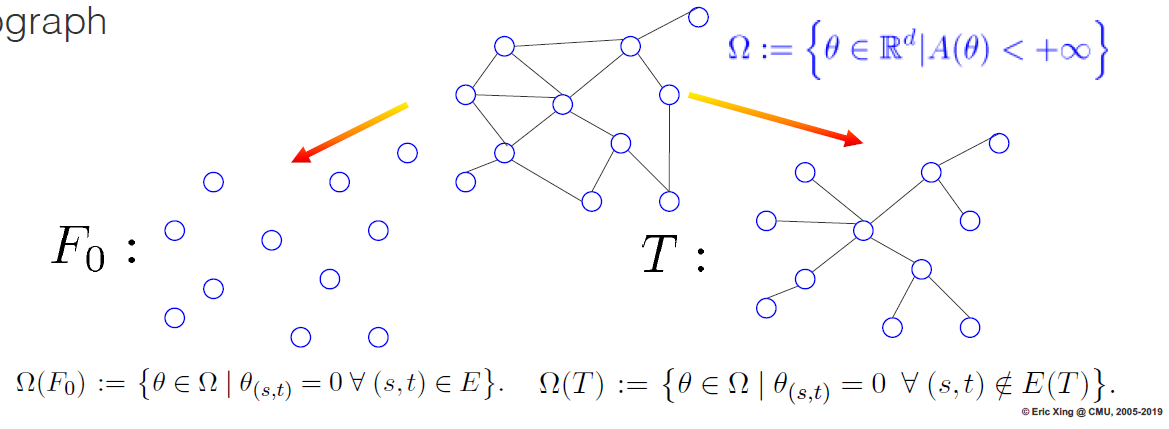

Then we’ll talk about two approximation methods: 1. mean field approximation: non-convex inner bound and exact form of entropy. 2. Bethe approximation and loopy belief propagation: polyhedral outer bound and non-convex Bethe approximation.

Mean Field Approximation

First we recall that For an exponential family with sufficient statistics defined on graph , the set of realizable mean parameter set is defined as:

Then we restrict to a subset of distributions associated with a tractable subgraph. For example, we transform a general graph with mean parameter set , to a subgraph with , or a subgraph with . This is illustrated in the following figure.

For a given tractable subgraph , a subset of canonical parameters is:

This stands for the inner approximation for variational principle. Then the mean filed method solves the relaxed problem:

where is the exact dual function restricted to .

Geometry of Mean Field

Mean field optimization is always non-convex for any exponential family in which the state space is finite. This can be seen very easily - the marginal polytope is a convex hull and contains all the extreme points of this polytope. This implies that is a strict subset of and is thus non-convex. For example, consider a two-node Ising model:

This has a parabolic cross section along and hence it is non-convex.

Bethe Approximation and Sum-Product

The Sum-Product/Belief Propagation algorithm is exact for trees but it is approximate for loopy graphs. It is interesting to consider how the algorithm on trees is related to the variational principle and what the algorithm is doing for graphs with cycles. In fact, it turns out that the message passing updates are a Lagrange method to solve the stationary condition of the variational formulation.

Bethe Variational Problem (BVP)

In the variational formulation , there usually exists 2 problems: the marginal polytope is hard to characterize, and the exact entropy lacks explicit form. Therefore, in BVP, we use the following 2 approximation to solve the problem:

- Substitute the marginal polytope with the tree-based outer bound:

- Substitute the exact entropy with the exact expression for trees:

With these two ingredients, the BVP is formulated as a simple structured problem:

Geometry of BVP

-

Loopy BP can be derived as am iterative method for solving a Lagrangian formulation of the BVP; similar proof as for tree graphs;

-

A set of pseudo-marginals given by Loopy BP fixed point in any graph if and only if they are local stationary points of BVP;

-

For any graph, ; if and only if the graph is a tree;

-

For any element of outer bound , it is possible to construct a distribution with it as a BP fixed point.

Remark

The connection between Loopy BP and the Lagrangian formulation of the Bethe Variational Problem provides a principled basis for applying the sum product algorithm for loopy graphs. However, there are no guarantees on the convergence of the BP algorithm on loopy graphs, although there is always a fixed point of loopy BP. Even if the algorithm converges in the end, due to the non-convexity of Bethe Variational Problem, there are no guarantees on the global optimum. In general, there are no guarantees that is a lower bound of .

Nevertheless, the connection and understanding of this suggest a number of avenues for improving upon the ordinary sum-product algorithm, via progressively better approximations to the entropy function and outer bounds on the marginal polytope such as Kikuchi clustering

Summary

Variational methods turn the inference problem into an optimization problem via exponential families and convex duality. However, the exact variational principle is usually intractable to solve and approximations are required. In a theoretical view, there are two distinct components for approximations:

- Either inner or outer bound to the marginal polytope;

- Various approximation to the entropy function.

In this lecture, we went through the theoretical guarantee behind two mainstream variational methods: Mean field and Belief Propagation. In addition, there is another storyline on Kikuchi clustering and its variants

- Mean field: non-convex inner bound and exact form of entropy;

- Belief Propagation: polyhedral outer bound and non-convex Bethe approximation;

- Kikuchi and variants : tighter polyhedral outer bounds and better entropy approximations.

More information on this topic can be found in Section 3 and 4 from Wainwright & Jordan’s paper

References

- Generalized belief propagation

Yedidia, J.S., Freeman, W.T. and Weiss, Y., 2001. Advances in neural information processing systems, pp. 689--695. - Graphical models, exponential families, and variational inference

Wainwright, M.J., Jordan, M.I. and others,, 2008. Foundations and Trends{\textregistered} in Machine Learning, Vol 1(1--2), pp. 1--305. Now Publishers, Inc.