Lecture 13: Approximate Inference: Monte Carlo and Sequential Monte Carlo Methods

Wrapping up variational inference, and overview of Monte Carlo methods.

Recap of Variational Principles

Last class, we reformulated probabilistic inference as an optimization problem. Invoking conjugate duality, we defined the exact variational formulation for the partition function

where:

\theta are the canonical parameters in an exponential family distribution\mu are the mean parameters\mathcal{M} is the marginal polytope (convex combination of the marginals of the sufficient statistics)A^* is the negative entropy function

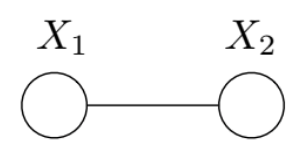

Example: Two-node Ising Model

To better understand this variational formulation, we consider the two-node Ising model.

Its distribution is

and it has sufficient statistics .

Plugging into the variational formulation of the partition function, we get

where the marginal polytope

As we showed in the previous lecture, the dual

By plugging in for

In this example, we were able to compute everything exactly. However this is not always possible, and so approximations such as the mean field method, Bethe approximation, and loopy belief propagation are used. The mean field method (to be described more in the following section) gives a non-convex inner bound and exact form of entropy. The non-convex Bethe approximation and loopy belief propagation (discussed later in these notes) provide polyhedral outer bounds.

Mean Field Approximation

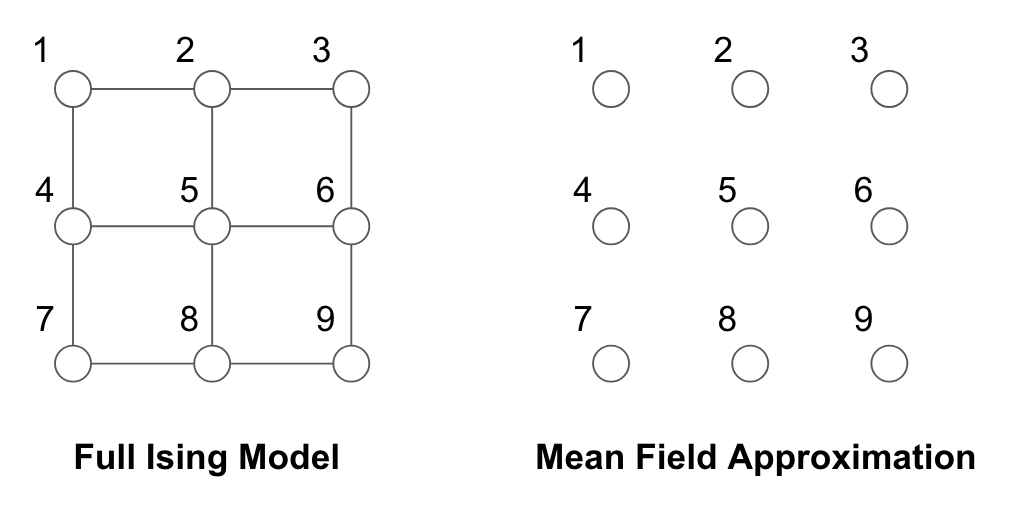

Graphically, the mean field approximation can be thought of as a tractable

subgraph approximation

Mean Field Methods

For a given tractable subgraph

This subset of parameters constrained by the subgraph defines a new subspace, known as

the inner approximation to the full marginal polytope. That is,

Making the mean field approximation, we solve the relaxed problem

where is the exact dual function restricted to .

Naive Mean Field for Ising Model

Consider the Ising model in its representation:

In making the mean field approximation, we cut out all of the edges as shown below:

The mean parameters are:

For the fully disconnected graph

Note that by the mean field approximation, we have that

The mean field objective, which lower bounds

To solve, take the derivative with respect to

Summary of Mean Field Approximation

- Mean field optimization is always non-convex for any exponential family in which the state space

\mathcal{X}^m is finite. Thus, it is not guaranteed to get the global optimum. - Recall that the marginal polytope is a convex hull, and that the adjusted marginal under the mean field approximation contains all the extreme points. Note that if this adjusted marginal is a strict subset, then it must be non-convex.

- Simple algorithm, but solves a much more complex intractable problem in an iterative fashion.

Bethe Approximation and Sum-Product

Sum-Product Algorithm Recap

Message passing rule:

Marginals:

Trees Graphic Models

We have discrete variables

The sufficient statistics are:

\mathbb{I}_j(x_s) wheres \in V, j \in \chi_s \mathbb{I}_{jk}(x_s, x_t) where(s, t)\in E, (j, k)\in \chi_s \times \chi_t

Then the mean parameters are marginals and pair-wise marginals:

Marginal Polytopes for Trees

The algorithm produces an exact solution for tree graphic models. The marginal polytope for is the same as the true polytope, because the local consistency is sufficient for global consistency in a tree.

If

Decomposition of Entropy for Trees

In order to perform optimization, we define

The entropy can be decomposed as:

Exact Variational Inference on Trees

With

We use the Lagrangian to solve the problem:

We assign Lagrange multipliers:

\lambda_{ss} forC_{ss}(\mu) := 1 - \sum_{x_s}\mu(x_s) = 0 ,\lambda_{ts}(x_s) forC_{ts}(x_s;\mu) := \mu(x_s) - \sum_{x_t}\mu_{st}(x_s, x_t) = 0

The derivatives are given by

By setting the derivatives to 0 and solving for

After adjusting the Lagrange multipliers to enforce constraints,

Belief Propagation on Arbitrary Graphs and Bethe Approximation

Inspired by sum-product algorithm on tree graph, we can have another approximation approach to solve the variational formulation:

The two main difficulties of above variational problem are:

- The marginal polytope

\mathcal{M} is hard to characterize. - The exact entropy

-A^*(\mu) lacks explicit form.

To address the first difficulty, we use the tree-based outer bounder:

The conditions on the

For the second difficulty, we can approximate the true entropy with Bethe entropy, which is the exact expression for trees:

It has the explicit form, which is the sum of entropy of every node minus the sum of mutual information of every edge.

Combining these two approximations, we derive the Bethe Variational Problem (BVP):

In contrast to the Mean Field Method, which uses an inner approximation

Some notes about BVP:

- It may not converge (because the objective function is not convex).

- It may not converge to the right answer (because the solution may not be in the

\mathcal{M}(G) ). - There is no guarantee that

A_{Bethe}(\theta) is the lower bound ofA(\theta)

In summary:

- Variational methods in general turn inference into an optimization problem via exponential families and convex duality.

- The exact variational principle is intractable to solve. There are two distinct components for approximation:

- Inner or outer bound for the marginal polytope

- Various approximation for the entropy function

- Three approximated approaches:

- Mean field: non-convex inner bound of marginal polytope and exact form of entropy

- Bethe Variational Problem: polyhedral outer bound and non-convex Bethe approximation of entropy.

- Kikuchi: tighter polyhedral outer bounds and better entropy. approximations

Monte Carlo and Sequential Monte Carlo Methods

Overview

Changing gears, we now introduce an alternative set of methods for approximate inference. These methods are based on on stochastic simulation/sampling from a target distribution. In many inference tasks (such as finding a marginal

In such problems, we may not have the true distribution in closed-form or this integral might be tough to carry out. However, if we are able to simply sample from this distribution, approximate inference is possible by using a sample-based representation of

This is in essence the spirit of Monte Carlo Methods, which give us a stochastic representation of a potentially complex distribution. This representation can then be used to compute the marginals and expectations that we care about. The good news is that these approximations are asymptotically exact (they get closer to the true

- How exactly do we draw from them from complex distributions?

- Not all samples are equally useful (more on this later).

- How do we know we’ve drawn enough samples?

Direct Sampling: A Naive Approach

We first discuss a seemingly obvious solution for sampling in the case where we can easily sample from the joint distribution. Such a case is if we have a BN, which gives us a straightforward generative model for a set of RVs. To sample from a joint distribution, we can simply traverse a BN in topological order, constructing our sample by filling in values node by node. At each step, we use the CPDs as well as the previously filled-in values. We can run this process many times and then use frequency counts to perform any inference task, such as finding a conditional probability.

However, we observe that we run into an issue when we deal with large models and want to consider rare events. In such cases our approximate inference estimates can easily be biased because as the sample size is too small. If we want to condition on such a rare event, our frequency counts will give a very low-sample estimate. In the worst case, we may even have 0 examples for a particular rare event, falsely indicating that such events are impossible and also making it impossible to condition on such events. This can be extended in general to any case where we can sample from the joint easily. If we want inferences that involve conditionals, we will have to perform and keep track of an exceedingly large number of samples.

Rejection Sampling

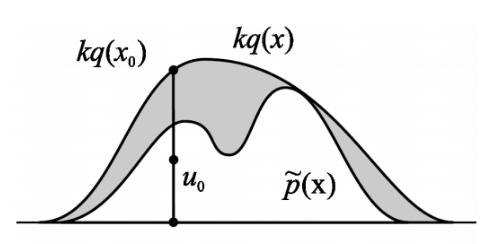

Direct sampling assumed the joint was easy to sample from. However, now let’s consider a case where

- The target distribution

p(x) is difficult to sample from. - The unnormalized distribution

\tilde{p}(x) = \frac{1}{Z}p(x) is easy to evaluate. Note that this alone does not makep(x) amenable to sampling. - The proposal distribution

q(x) is a distribution that we can easily sample from (e.g., uniform or normal). k is a chosen constant such thatkq(x) \geq \tilde{p}(x) for allx . This is called the comparison function.

Procedure

- Sample

x_0 fromq(x). - Sample a number

u_0 from the uniform distribution over[0,kq(x_0)] . - Reject the sample if

u_0 > \tilde{p}(x_0) and retain the sample otherwise.

Note that steps 2 and 3 are akin to accepting the sample

Correctness

We can formally show that this procedure samples correctly from

Pitfalls

If the proposal distribution

for

One potential way to fix this is to use adaptive rejection sampling, which covers

Importance Sampling

Suppose we want to evaluate expectations using samples from a complicated probability distribution. We assume that:

p(x) is hard to sample from but easy to evaluateq(x) is easy to sample fromf(x) is a function we want to evaluate in expectation:\langle f(x) \rangle .q(x) > 0 wheneverp(x)>0 orq dominatesp .

Unnormalized Importance Sampling

Procedure:

- Draw

M samplesx^{(m)} fromq(x) . - Determine weights

w^{(m)} for samples equal to the likelihood ratiow^{(m)} = \frac{p(x^{(m)})}{q(x^{(m)})} . - Compute expectation as:

We call this unnormalized because these weights are likelihood ratios, so there is no reason that they need to sum to

Correctness

Note that this does not give us sample from the target distribution but we can prove correctness for the expected value estimate.

The key step is the third equality where we can approximate the integral assuming

Normalized Importance Sampling

Here we no longer assume that we know

Procedure

- Draw

M samplesx^{(m)} fromq(x) . - Calculate ratios

r^{(m)} for samples equal tor^{(m)} = \frac{\tilde{p}(x^{(m)})}{q(x^{(m)})} . - Compute expectation as

Correctness

We observe first that

Again the key step is the fourth equality were we approximate both numerator and denominator using samples drawn form

Comparison between Normalized and Unnormalized

On finite samples. The unnormalized version gives an unbiased estimator of the true expectation while the normalized version gives a biased estimator of the true expectation. However, the variance of the normalized version is generally lower in practice.

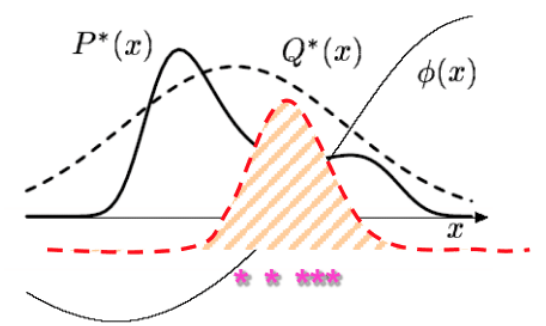

Pitfalls

These importance sampling approaches are based on likelihood weighting, which is simple to operate but still might be inaccurate in some peculiar scenarios. Again the core issue is when our proposal and target distributions are not similar enough. Consider the following

Essentially, what importance sampling is trying to do is to weight the samples such that they reflect relative importance to each other. The hope is that even if regions where

- We could use heavy-tailed proposal distributions. However, this has the disadvantage of inefficiently drawing a lot of wasted samples with low importance.

- We can use weighted re-sampling (see next section).

Weighted Re-Sampling

- Draw

N samples fromq :X_1, …, X_N - Construct weights

w_1, …, w_N equal tow^m = \frac{P(X^m)/Q(X^m)}{\sum_m P(X^m)/Q(X^m)} = \frac{r^m}{\sum_m r^m} - Sub-Sample

N’ examplesx from{X_1, …, X_N} with probability{w_1, …, w_N} with usuallyN’ > > N .

This is a sense amplifies the high importance samples while diminishes the low importance samples.

Particle Filters Sketch

Here is another algorithm that uses the re-sampling idea to do very efficient and elegant sequential inference tasks. The goal here is to make a fast sampling based inference algorithm for the state space model we looked at previously.

We have already studied the Kalman Filter algorithm for this model. However, KF is often demanding to implement in high dimensional spaces or when the transitional model is not Gaussian. Hence, a sampling algorithm could be useful. The goal here is to get samples from the posterior

We establish a recursive relation like we did for the KF algorithm. We want to update the posterior distribution of the hidden variables in light of each new observation. This is essentially a recursive two step process:

- The starting point at time

t is - We want to draw samples from

p(X_t | Y_{1:t-1}) (treat like our proposal distribution forp(X_t | Y_{1:t}) ) and give them weights equal tow_t^m = \frac{p(Y_t | X_t^m)}{\sum_{m=1}^M p(Y_t | X_t^m)} . We can now representp(X_t | Y_{1:t}) as the set of samples and weights, noting that\sum_m w_t^m = 1 . - To find weights and samples at the next time step for

p(X_{t+1} | Y_{1:t+1}) , we do a time update and then a measurement update:

Time update: We decompose the probability into a term that we can replace with our sample representation and the transition probability:

The final term is the weighted sum of the transition probabilities conditioning on the samples of the previous states. This is a mixture distribution and samples can be easily drawn by choosing a component

Measurement Update: Here we essentially perform step 1 again except now using the sample representation generated in the Time Update. We have a new observation

Trading the Time and Measurement updates, we can proceed sequentially down the chain. The following schematic illustrates the process where the mixture distribution for the posterior at each time step is represented by circles whose sizes indicate their weights.

Particle Filters are especially interesting because we can now draw samples from more complicated distributions such as SSMs.

Rao-Blackwellised Sampling

The idea of Rao-Blackwellised Sampling is that if our samples involve a long list of RVs, we will get high variance in any estimates we make. However, we can sample a smaller subset

where the

Using law of total variance

we get

which implies that