Work in progress

Aural Fauna

| PARTICIPANT

INTERACTION VIDEO 1 | PARTICIPANT

INTERACTION VIDEO 2 VOICE MIMICKING TEST 1 | VOICE MIMICKING TEST 2 | AMBIENT SOUNDSCAPE (MP3) |

|

|

|

|

|

|

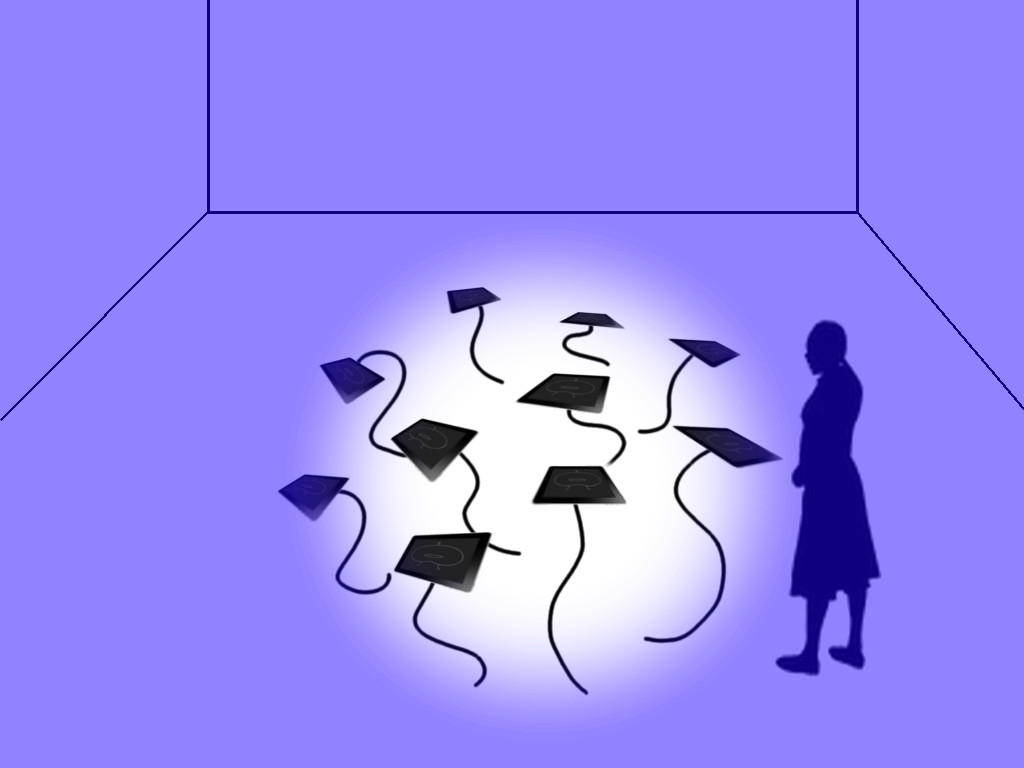

A version of it, Aural Fauna and a Bower Bird was presented in Seattle in January 2014. The Aural Fauna and a Bower Bird has a story like this; Aural fauna are sleeping. From time to time, one of them awakes and sings. A bowerbird (horn player) happens to come near them. The bowerbird listens to aural fauna and mimics their sounds. At the end they all sing together. There were 6 iPad installed surrounding the audience and the horn player walked around while interacting with 6 aural fauna. Later the audience was invited to interact with aural fauna by themselves. The ambient sound was played through 2 sets of loud speakers and 6 iPads ran the Aural Fauna app. Each iPad was showing a different entity. After the show, audiences were invited to interact with Aural Fauna by themselves. Above PARTICIPATION INTERACTION 1 VIDEO and 2 VIDEO are two short video clips showing their enjoyment.

The project is responsive to sound, responding differently to pitched sound as opposed to non-pitched. Pitched sound will provoke a response similar to singing. (Technically, the entity will sing in its own octave but will try to match the pitch of the visitor’s voice.) A person coming into the space and speaking triggers short non-"singing" vocalizations from the Aural Fauna. Longer tones will provoke a kind of singing from the entity. The entity is normally invisible, even when vocalizing amongst themselves. But when they are reacting to a visitor, they become visible. All sound, visualization and interactions are done by customized software and hardware system made by the team. The software system utilizes interactive technologies such as Supercollider, a real-time audio synthesis language for the audio control and synthesis, and an openFrameworks application for video and animation. The sound of the aural fauna will be synthesized and spatialized in real-time. The spatialized sound will appear to have location and distance, using surround sound. Installation requires at least one video projector and eight speakers, one or more computers, and several iPads.

For future development, the Aural Fauna are expected to grow as a child grows. For example, developing more complex communication system for tutti, or perhaps inaudible conversation among the fauna. Additionally, applying a machine learning algorithm to give Aural Fauna artificial intelligence to mimic human vocal behavior and to create a more harmonic tutti as a group.

In collaboration with Donald Craig