Cho ruwächulew, kas tzij qˈuia u wäch ri quechˈaw wi ri winak, chquijujunal cˈut cˈo ri quel cubij ri qui tzij.

Structured Belief Propagation for NLP

Tutorial at ACL 2015 in Beijing and ACL 2014 in BaltimoreSlides

Matthew R. Gormley, Jason Eisner.

ACL Tutorials. 2015. Beijing, China.

ACL Tutorials. 2014. Baltimore, MD.

The latest slides for ACL 2015 are now available!

[slides (.pptx)] [slides (.pdf)]

(See below for individual sections.)

Videos

The ACL 2015 video recordings are available by request.

Short Summary

Do you want to push past the simple NLP models (logistic regression, PCFG, etc.) that we've all been using for 20 years? Then this tutorial is extremely practical for you!

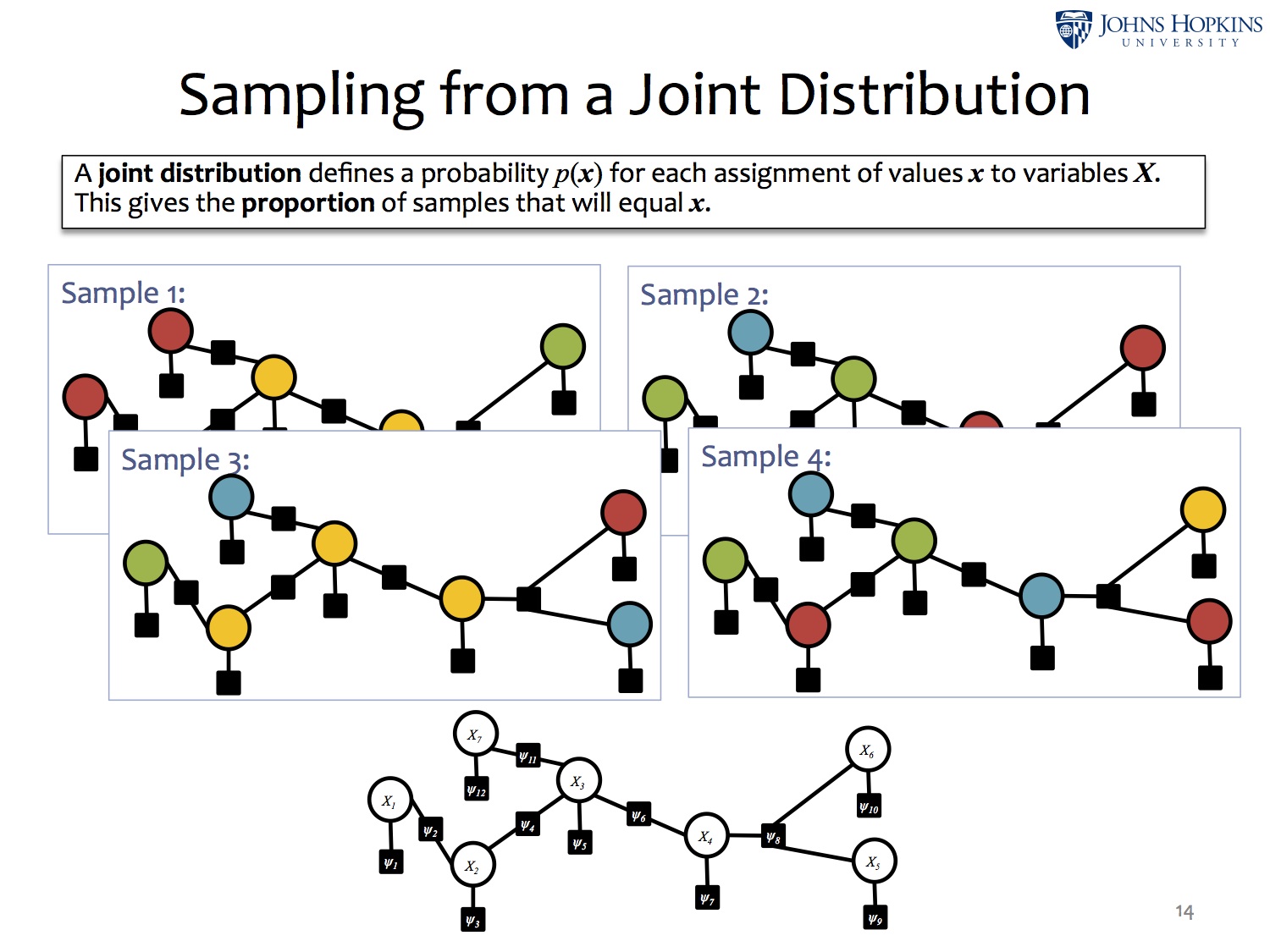

- Models: Factor graphs can express interactions among linguistic structures.

- Algorithm: BP estimates the global effect of these interactions on each variable, using local computations.

- Intuitions: What’s going on here? Can we trust BP’s estimates?

- Fancier Models: Hide a whole grammar and dynamic programming algorithm within a single factor. BP coordinates multiple factors.

- Tweaked Algorithm: Finish in fewer steps and make the steps faster.

- Learning: Tune the parameters. Approximately improve the true predictions – or truly improve the approximate predictions.

- Software: Build the model you want!

Abstract

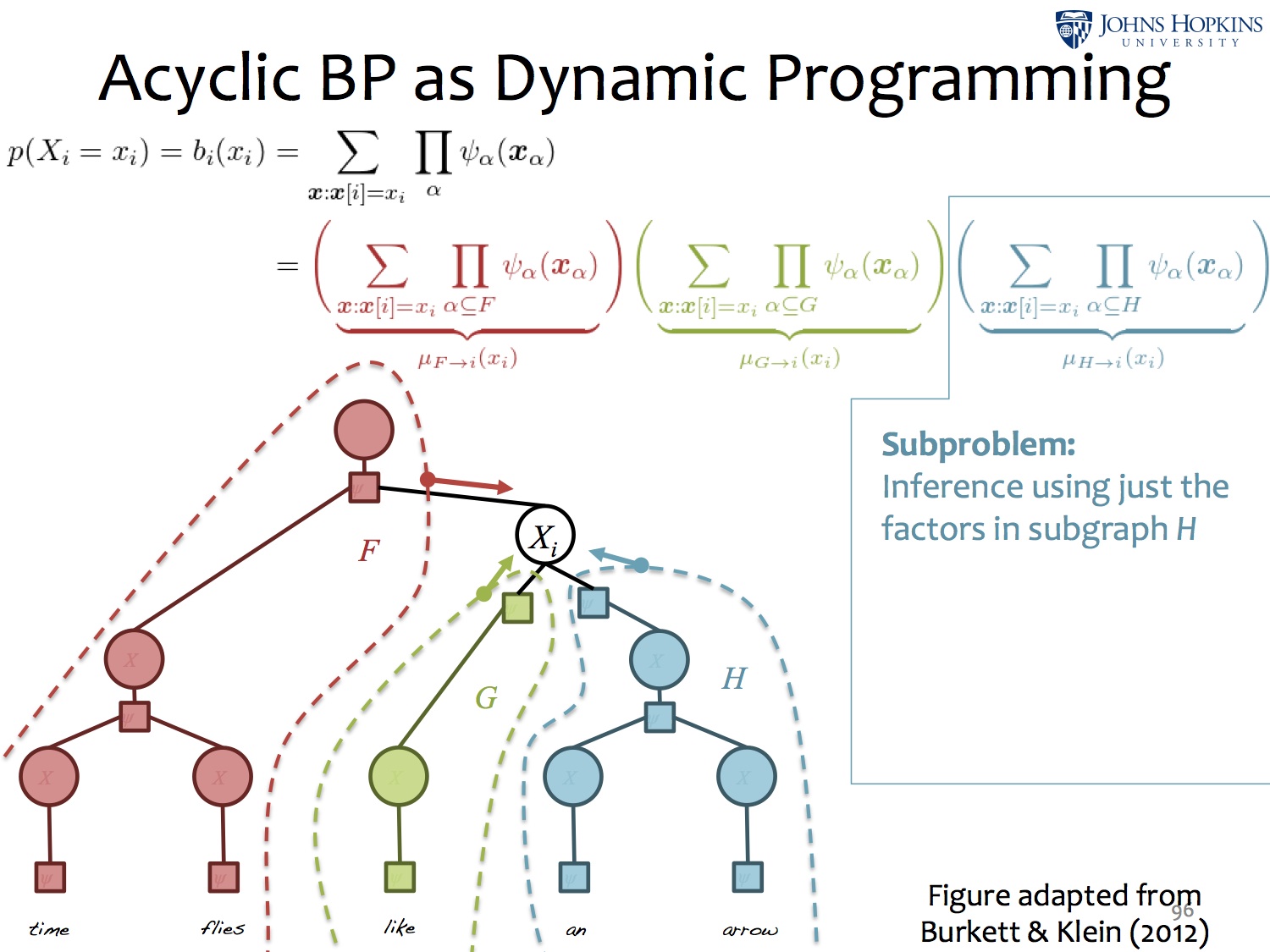

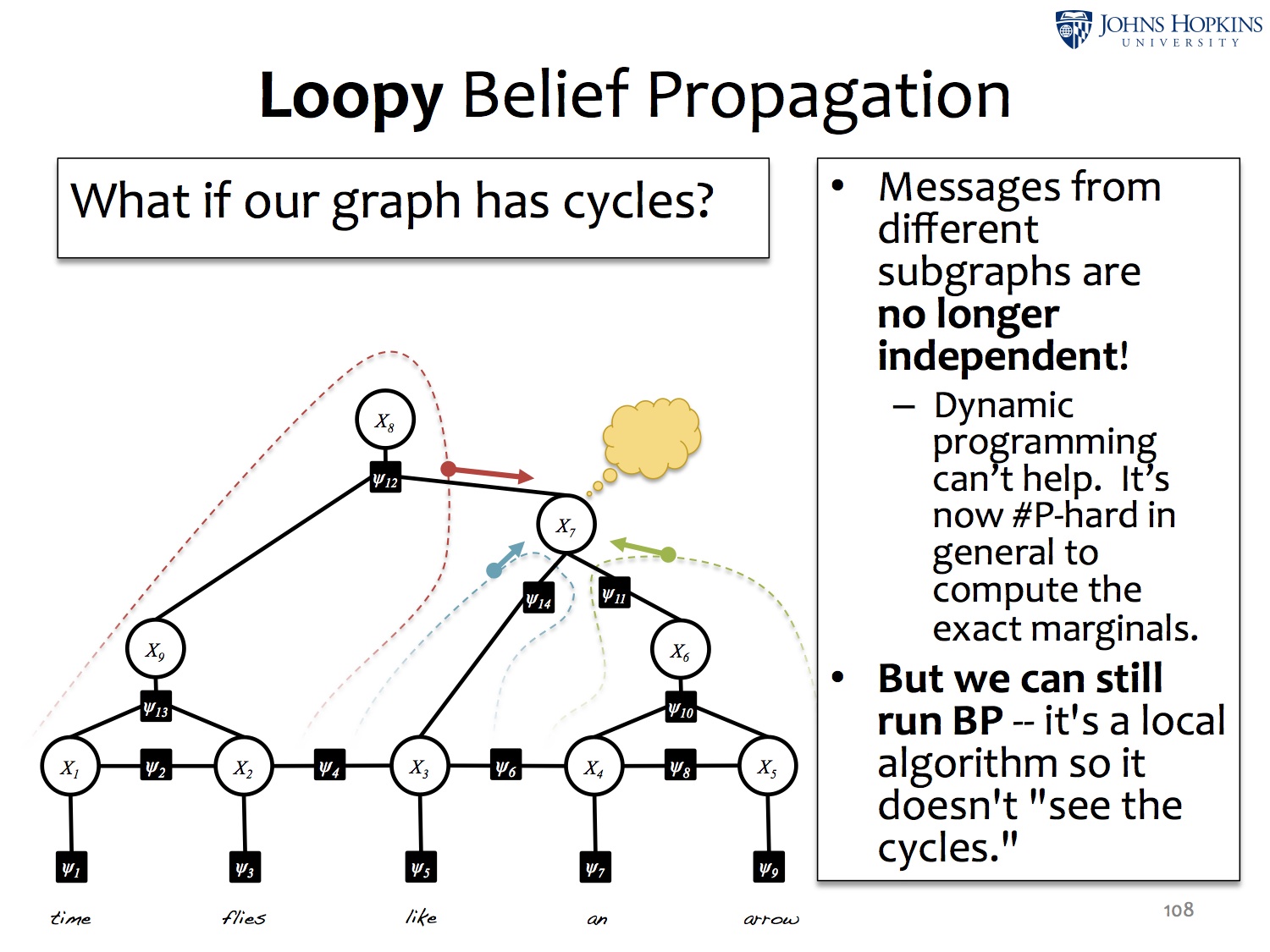

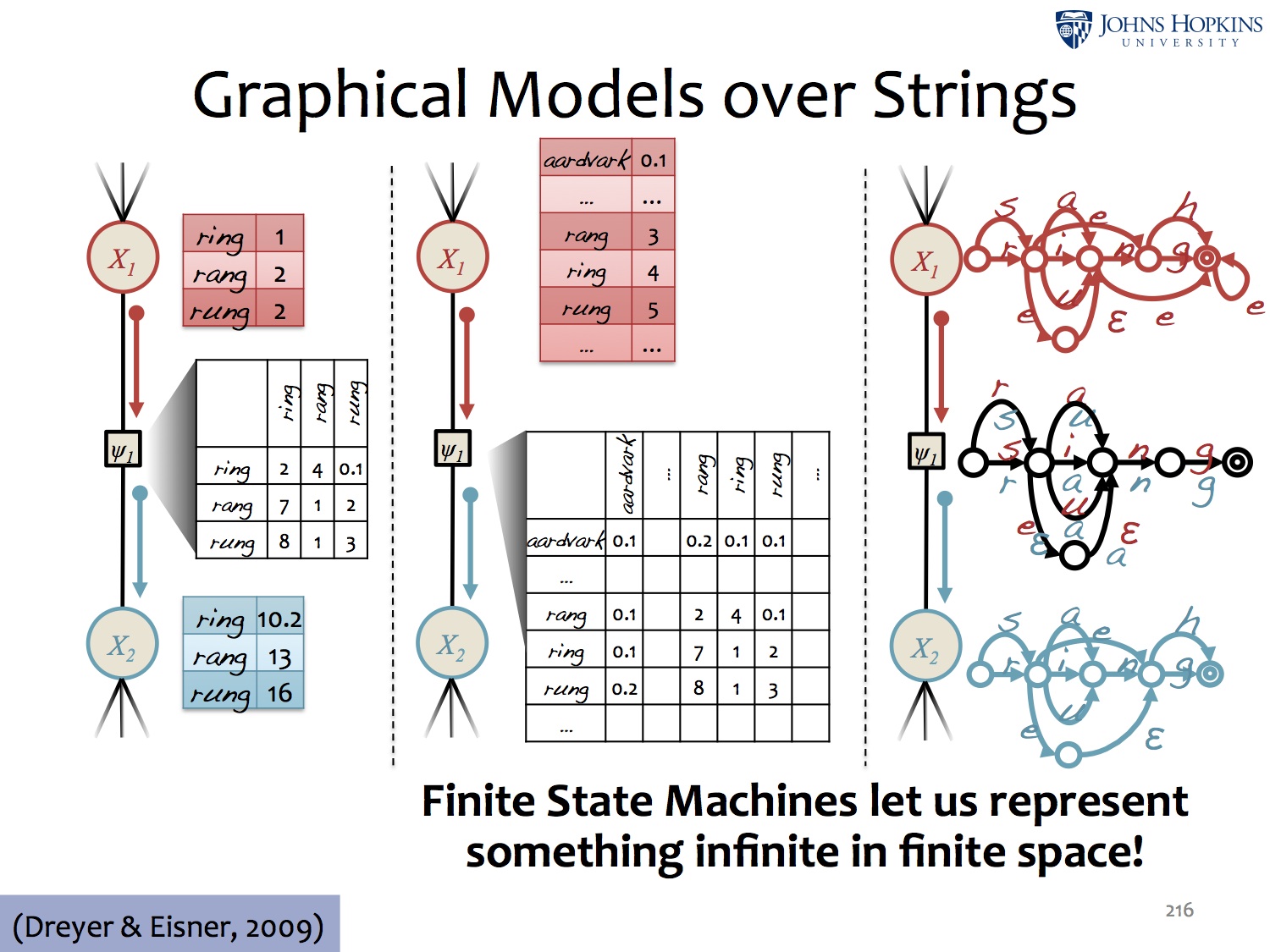

Statistical natural language processing relies on probabilistic models of linguistic structure. More complex models can help capture our intuitions about language, by adding linguistically meaningful interactions and latent variables. However, inference and learning in the models we want often poses a serious computational challenge. Belief propagation (BP) and its variants provide an attractive approximate solution, especially using recent training methods. These approaches can handle joint models of interacting components, are computationally efficient, and have extended the state-of-the-art on a number of common NLP tasks, including dependency parsing, modeling of morphological paradigms, CCG parsing, phrase extraction, semantic role labeling, and information extraction (Smith and Eisner, 2008, Dreyer and Eisner, 2009, Auli and Lopez, 2011, Burkett and Klein, 2012, Naradowsky et al., 2012, Stoyanov and Eisner, 2012).

This tutorial delves into BP with an emphasis on recent advances that enable state-of-the-art performance in a variety of tasks. Our goal is to elucidate how these approaches can easily be applied to new problems. We also cover the theory underlying them. Our target audience is researchers in human language technologies; we do not assume familarity with BP. In the first three sections, we discuss applications of BP to NLP problems, the basics of modeling with factor graphs and message passing, and the theoretical underpinnings of “what BP is doing” and how it relates to other variational inference techniques. In the second three sections, we cover key extensions to the standard BP algorithm to enable modeling of linguistic structure, efficient inference, and approximation-aware training. We survey a variety of software tools and introduce a new software framework that incorporates many of the modern approaches covered in this tutorial.

Outline

The links below are for the per-section slides. Included are the extra Appendix slides for sections 2 and 3.

- Probabilistic Modeling (.pptx)

- Intro: Modeling with factor graphs

- Constituency and dependency parsing

- Joint CCG Parsing and supertagging

- Transliteration; Morphological paradigms

- Alignment; Phrase extraction

- Congressional voting

- Joint models for NLP; Semantic role labeling; Targeted sentiment

- Variable-centric view of the world

- Belief Propagation Basics (.pptx)

- Messages and beliefs

- Sum-product algorithm

- Relation to the forward-backward and Viterbi algorithms

- BP as dynamic programming

- Acyclic vs. loopy graphs

- Appendix: Tensor Notation for BP (.pptx)

- Intuitions: Methods like BP and in what sense they work (.pptx)

- Sum-product, max-product, and deterministic annealing

- From arc consistency to BP

- From Gibbs sampling to particle BP to BP

- Convergence properties

- Global/local consistency of beliefs

- Bethe free energy

- Appendix: BP as an Optimization Algorithm (.pptx)

- Incorporating Structure into Factors and Variables (.pptx)

- Embedding dynamic programs (e.g. inside-outside) within factors

- String-valued variables

- BP for the coordination of marginal inference algorithms

- Message approximation and scheduling (.pptx)

- Computing fewer messages by choosing a better update order

- Pruning messages

- Expectation Propagation

- Approximation-aware Training (.pptx)

- Empirical risk minimization under approximations (ERMA)

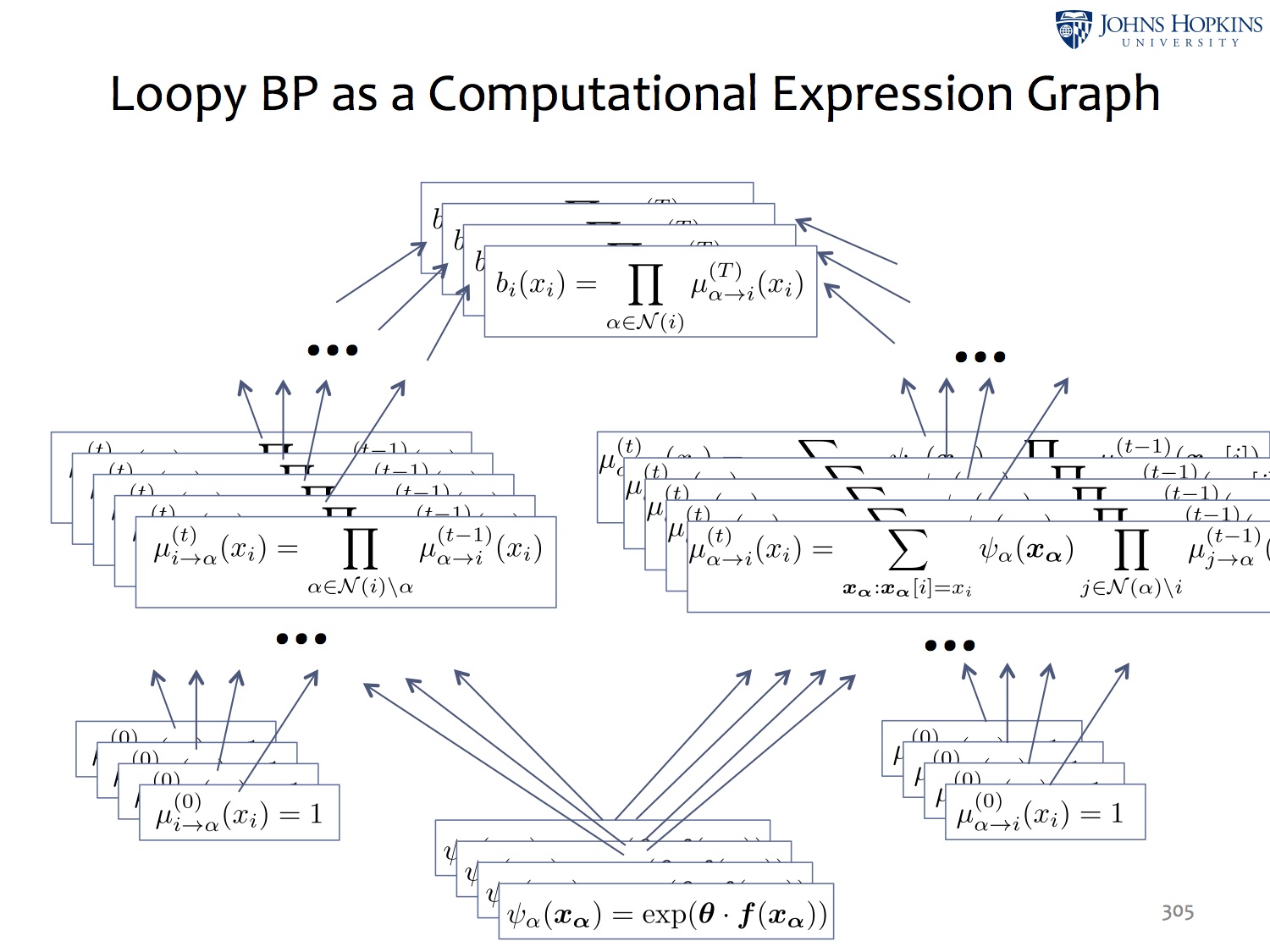

- BP as a computational expression graph

- Automatic differentiation (AD)

- Software (.pptx)