Due to their specialization in short-distance flights, most commercial VTOL designs are smaller in size and have only one pilot on board, as this table shows. This poses a potential risk, because if workload reaches critical levels, there would be no redundancy in the cockpit. Additionally, due to their unique design, VTOL introduces unique challenges for the pilot that are not present in other aircraft designs. For example, the tasks of transitioning from fixed-wing mode to rotorcraft and vice versa would never be practiced by traditional pilots. Therefore, there is a need for some system to monitor or analyze the pilot’s workload as they fly a VTOL.

Our work suggests that pilot workload monitoring is viable which fosters understanding of VTOL operations for safety and efficiency. That’s why we built a multimodal ML model that estimates pilot workload at different flight operations.

Flight Simulator

We simulated the flight dynamics of our passenger VTOL in IsaacSim using PX4 flight controller. Here you can see the results of our simulation

User Study

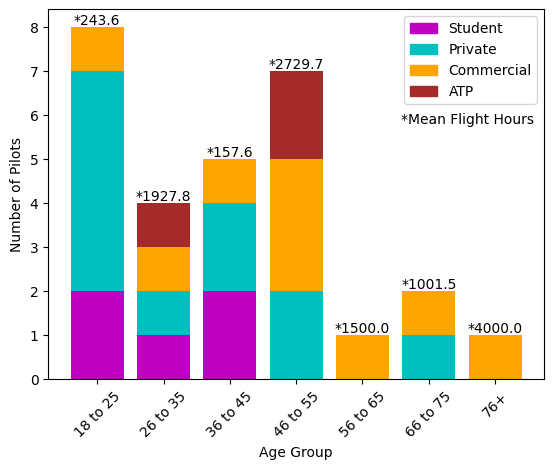

We recruited 28 pilots to fly in a VTOL simulator with

- At least one solo flight

- Different certification levels

- A few (5) student pilots + many more experienced pilots

- 10 - 10,000 hrs flight time

- No prior VTOL experience

- 2 helicopter pilots, rest were fixed-wing

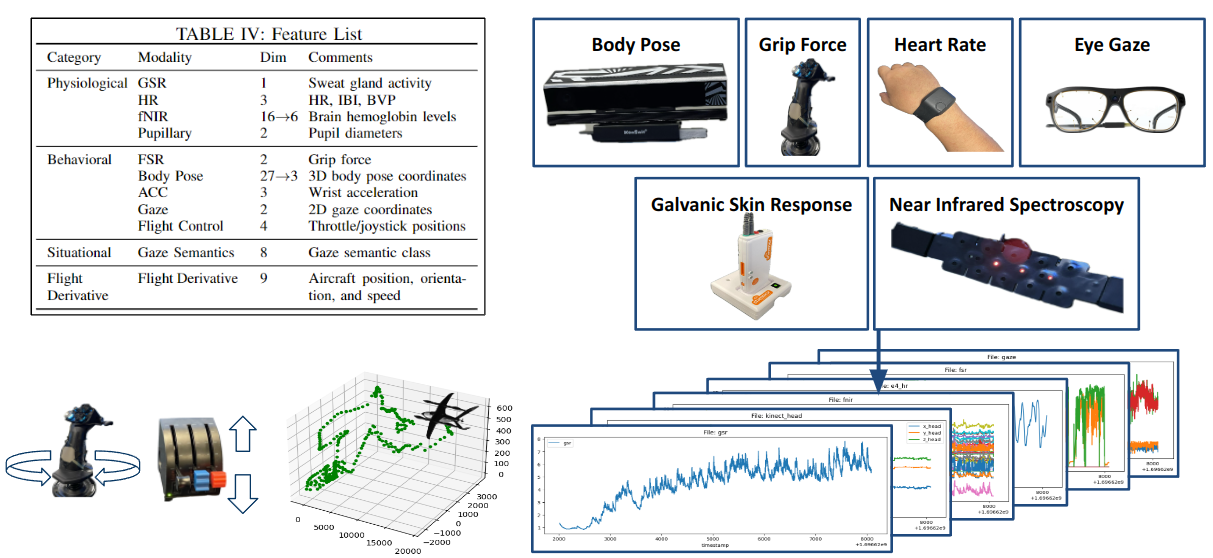

Collected Data

- Xbox Kinect (body pose)

- Force-sensitive resistors (grip strength)

- GSR (sweat gland activity)

- E4 wristband (heart rate)

- Tobii Pro glasses (eye tracking)

- fNIRS headband (brain activity)

Feature Extraction

Eye gaze projection

Frame-by-frame mapping of gaze position from eye tracking glasses video to screen recording

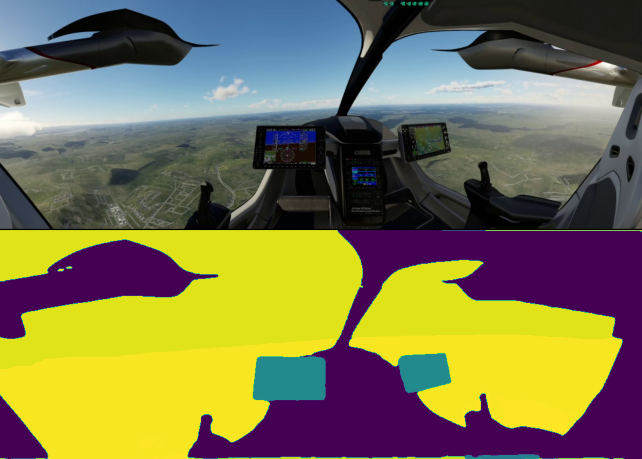

Semantic Segmentation

We use the DiNAT (Dilated Attention Transformer) model pre-trained on COCO dataset.

Semantic Gaze Annotation

Combining two previous results, we annotated the pilots gaze on segmented data. We also prioritized semantic class group so that when the gaze is fixed to multiple objects, we can weight them differently and tell what the pilot was more paying attention to.

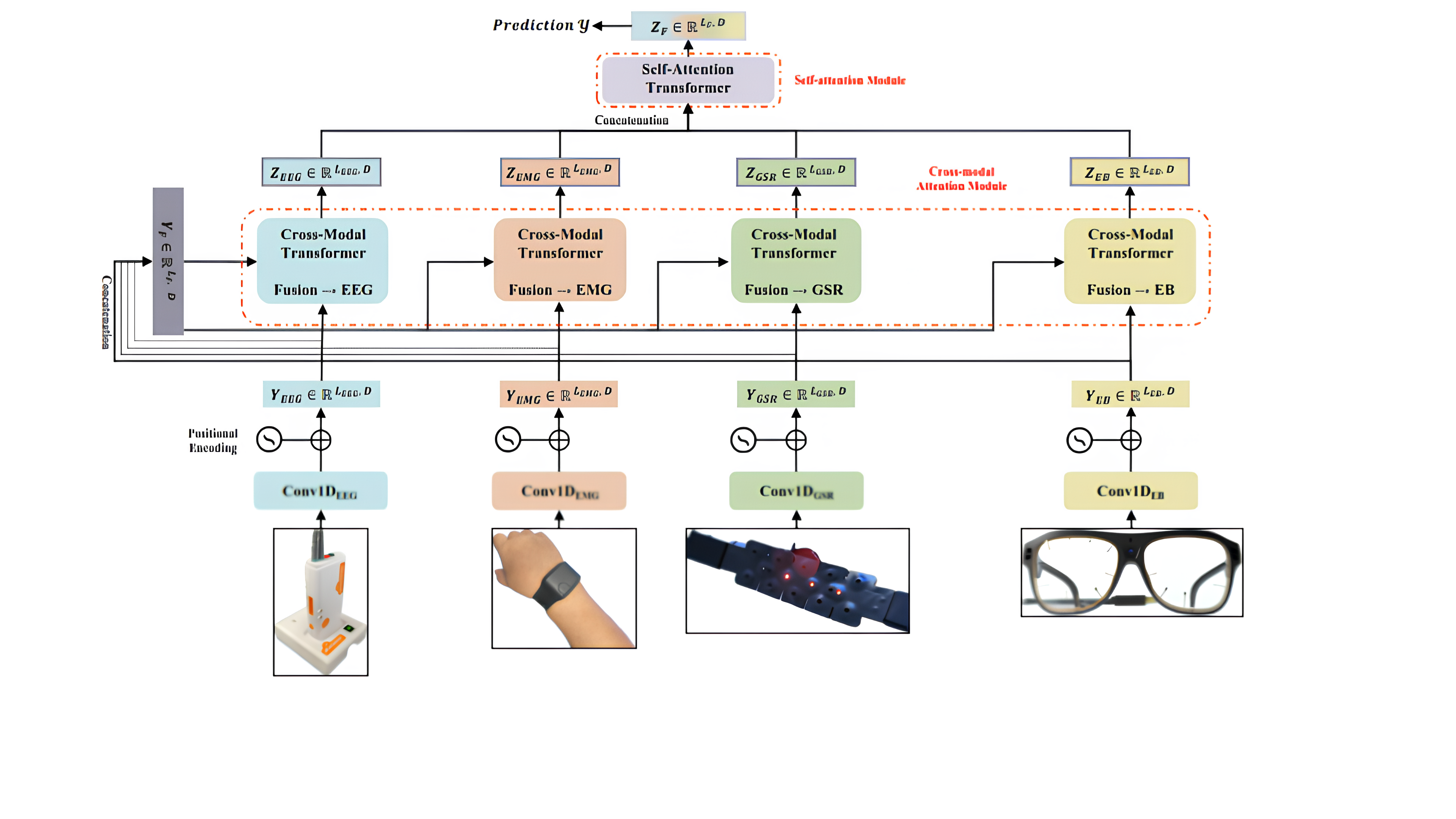

ML Pipeline

- Inputs

- Extracted features for each task group (mean/min/max/z-scores of each non-gaze modality + gaze data)

- Output

- Low/medium/high classification (using surveyed mental demand as ground truth)

We found 65% classification accuracy compared with ground truth of NASA-TLX surveys filled out by the applicants.

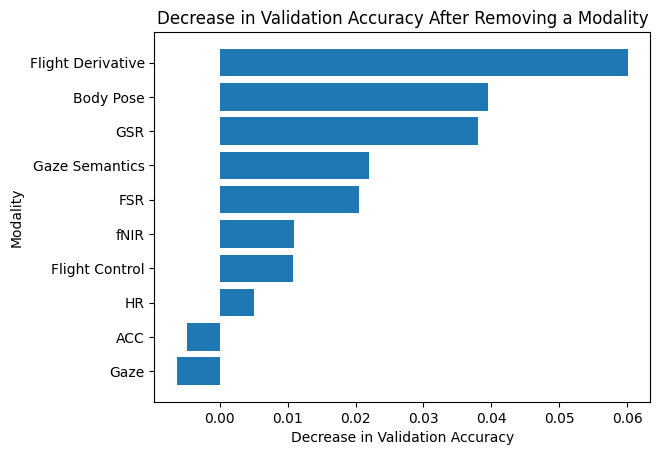

Ablation

Publications

IEEE Style:

J. H. Park, L. Chen, I. Higgins, M. Mousaei, Z. Zheng, S. Mehrotra, K. Salubre, S. Willits, B. Levedahl, T. Buker, E. Xing, T. Misu, S. Scherer, and J. Oh, "How is the Pilot Doing: VTOL Pilot Workload Estimation by Multimodal Machine Learning on Psycho-physiological Signals," in 2024 33rd IEEE International Conference on Robot and Human Interactive Communication (ROMAN), 2024.