Simulated and Unsupervised Learning for the Generation of Realistic Imaging Sonar Datasets

In this project I present a novel Simulated and Unsupervised (S+U) learning approach for the generation of realistic imaging sonar datasets.

Introduction

In this project I present a novel Simulated and Unsupervised (S+U) learning approach for the generation of realistic imaging sonar datasets. Underwater Autonomous Vehicles (UAVs) rely on imaging sonars for underwater localization and mapping, as they perceive boarder areas and obtain more reliable information than optical sensors in underwater environments. However, sonar data collection is time-consuming and sometimes dangerous. In addition, underwater simulators have yet to provide realistic simulation data. To enable underwater tasks, in particular to train learning-based methods with large datasets, I proposed a SimGAN-based baseline to transfer simulated imaging sonar dataset to an unpaired real-world sonar dataset. In particular, I integrate self-regularization loss and cycle consistency loss into the refiner and introduce masked generative adversarial network (GAN) loss into the discriminator. The masks are generated from the smallest of cell-averaging constant false alarm rate (SOCA-CFAR) detector. The experimental results demonstrate that our proposed method is capable of providing more realistic imaging sonar datasets compared to existing state-of-the-art GAN methods.

Baseline

The figure of baseline is shown as follows. The refiner's task is to transform the noise distribution of simulated inputs to the domain of the real-world dataset. The discriminator, on the other hand, will distinguish the real sonar images and the synthetic outputs.

Given the unpaired dataset with synthetic sonar data from HoloOcean underwater simulator and real-world data from our test tank, our goal is to learn a mapping function between synthetic and real datasets. Denote the simulated inputs and unpaired real data as and respectively, the data distribution as and , and the two mappings as and . For the refiner, I use a 9-block ResNet architecture. For the discriminator, I use a basic 3-layer PatchGAN classifier with masked inputs whose masks are obtained from a SOCA-CFAR detector. Denote the foreground masks as and , and background masks as and . Notably, the background masks are the reverse of the foreground masks.

In the project I modify the originally CycleGAN codebase (https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix).The overall objective of the networks can be defined as:

I aim to solve:

The overall training loss consists of three parts: masked adversarial Loss, cycle consistency Loss, and self-regularization loss. The masked adversarial loss can be represented as:

The cycle consistency loss can be expressed as:

The identity loss is set to make the goal mappings generate identical outputs to inputs when inputs are within the target domain. It is represented as:

The self-regularization loss is expressed as:

Dataset

Our unpaired dataset comes from 2 sources. For synthetic datasets, HoloOcean, a realistic underwater simulator, provides us unlimited access to simulated imaging sonar data. I collected our training and testing datasets from 2 different simulated underwater scenarios (submarine and sinked plane). The following figures demonstrate the 3D models of them. (Figure source: https://github.com/rpl-cmu/neusis)

I also collected our real-world dataset from our test watertank located in Newell-Simon Hall using Bluefin Hovering Autonomous Underwater Vehicle equipped with Sound Metric DIDSON 300m imaging sonar. Two datasets are for unsupervised training and post-training experiments. The following figures are the structures deployed in the watertank when collecting the dataset.

Experiment Results

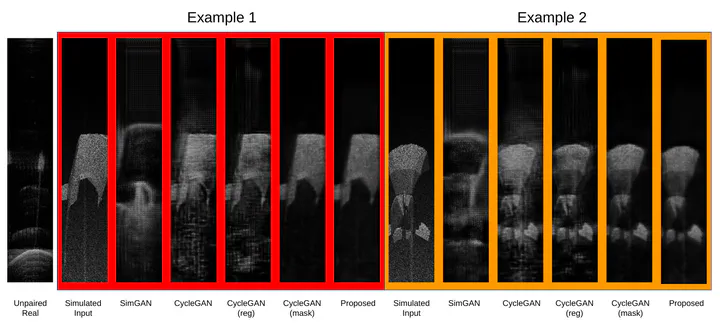

For measuring the performance of our proposed baseline, I did our ablation study on multiple models, including the original version of SimGAN, CycleGAN, CycleGAN with only self-regularization loss and with masked GAN loss. I trained those networks with our collected datasets. I demonstrated the generation results qualitatively. To quantify the performance I created paired datasets from the generated sonar images from the model and the foreground masks of the original simulated dataset. Then I trained the models with the created datasets for the segmentation task. The models are tested with a real-world dataset for the segmentation masks. The generated masks are compared to the groundtruth masks of the real-world dataset under the metric of the intersection over union (IoU). Higher IoU implies a better transformation performance of our proposed baseline over other network architectures.

The following figures demonstrate the testing results from different models. Here the proposed method not only retains the objects of the simulated input but reduces the vertical noises, making them more realistic and closer to the unpaired real-world dataset.

The following figures give the segmentation results with conditional GANs (pix2pix) using different datasets that generated from experiment models. From the results our proposed model predicted better masks than other 4 models.

The following table shows the quantitative results of the segmentation results. Our proposed method gives the best IoU scores among all baselines.

| Model | IoU |

|---|---|

| SimGAN | 0.001 |

| CycleGAN | 0.702 |

| CycleGAN (reg) | 0.679 |

| Proposed | 0.734 |

Conclusions and Future Works

The experimental results demonstrate the potential capability of our proposed method to generate realistic sonar datasets from simulation.

The future plans are as follows:

- I will try to generate larger datasets from both simulator and real-world scenarios. Currently, the datasets only contain about 100 sonar images. Larger datasets with different scenarios will definitely make the model more robust to sonar images from different underwater environments.

- I will try to leverage StableDiffusion to better transform our simulated images to real-world domains.

- Instead of sonar data from a single type of sonar, I try to collect imaging sonar datasets from different imaging sonar, for example, Blueprint M1200d imaging sonar, and even other types of sonar, such as Side-scan sonar (SSS).

- More applications can be used as metrics for experiments to test the performance of our proposed baseline. For example, 3D NeRF reconstructions and learning-based feature matching of sonar images.