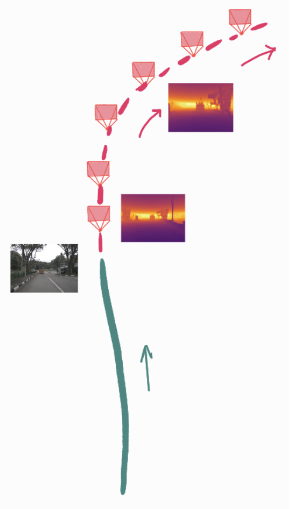

Figure 1

| Historical LiDAR Sweeps | Future 4D Occupancy | Rendered Point Clouds |

| t = {-T, ..., 0} | t = {1, ..., T} | t = {1, ..., T} |

| Historical LiDAR Sweeps | Future 4D Occupancy | Rendered Point Clouds |

| t = {-T, ..., 0} | t = {1, ..., T} | t = {1, ..., T} |

| Historical LiDAR Sweeps | Future 4D Occupancy | Rendered Point Clouds | Groundtruth Point Clouds |

| Groundtruth | SPFNet-U | S2Net-U | Raytracing | Ours (Point clouds) | Ours (Occupancy) |

| Groundtruth | SPFNet-M | S2Net-M | Raytracing | Ours (Point clouds) | Ours (Occupancy) |

| Groundtruth | ST3DCNN | Raytracing | Ours (Point clouds) | Ours (Occupancy) |

| Future Occupancy | nuScenes LiDAR | KITTI LiDAR | ArgoVerse2.0 LiDAR |

| Reference RGB frame at t = 0s | Novel-view Depth Synthesis |

|

|