Next: Conclusion Up: Competition Results Previous: Evaluation Tracks

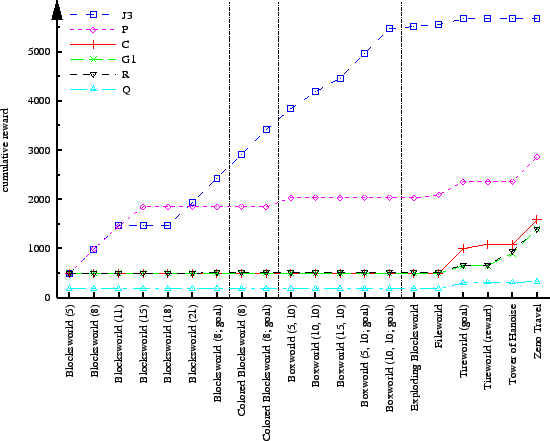

To display the results for each evaluation track, we plotted the cumulative reward achieved by each participant over the set of evaluation problems (reward is accumulated left to right). In the reward-based tracks, goal achievement was counted as 500 for problems without an explicitly specified goal reward. These plots highlight where one planner has an advantage over another (greater slope) as well as the total difference in score (height difference between the lines).

Figure 7 displays the results in the Overall category. Two planners, J3 and P, produced significantly more positive results than the others, with the replanning algorithm J3 clearly dominating the others. J3 was crowned Overall winner, with P as runner up. The figure also displays the results for the Conformant category, which consisted solely of Q, the uncontested winner of the category.

|

Similar results are visible in the Goal-based track, displayed in Figure 8, in which J3 again comes out ahead with P achieving runner-up status. Comparing Figures 7 and 8 reveals that the margin of victory between J3 and P, R and G1 is diminished in the Goal-based category. This suggests that J3 is more sensitive to the rewards themselves—choosing cheaper paths among the multiple paths available to the goal. In the set of problems used in the competition, this distinction was not very significant and the graphs look very similar. However, a different set of test problems might have revealed the fundamental tradeoff between seeking to maximize reward and seeking to reach the goal with high probability. Future competitions could attempt to highlight this important issue.

It is very interesting to note that J3's outstanding performance stems primarily from the early problems, which are the Blocksworld and Boxworld problems that are amenable to replanning. The later problems in the set were not handled as well by J3 as by most other planners.

Figure 9 displays the results for the Non-Block/Box category. Indeed, J3 performed much more poorly on the problems is this category, with Planner C taking the top spot. The runner-up spot was closely contested between planners R and G1, but G1 pulled ahead on the last problem to claim the honors. Planner P also performed nearly as well on this set.

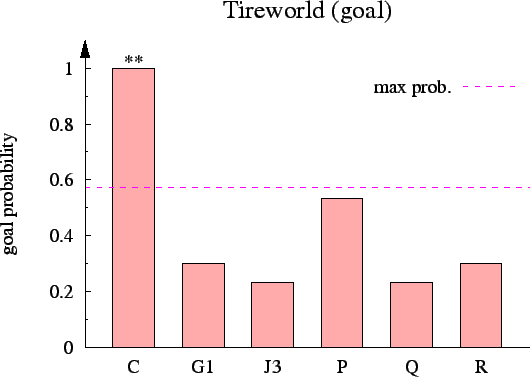

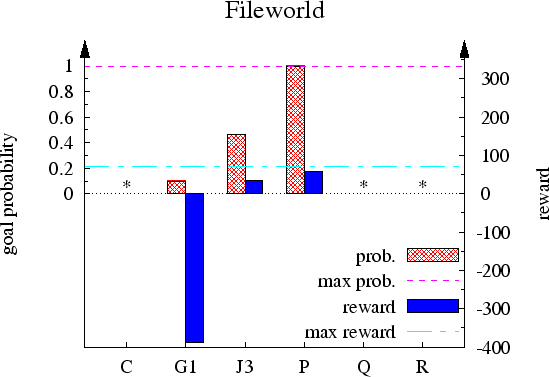

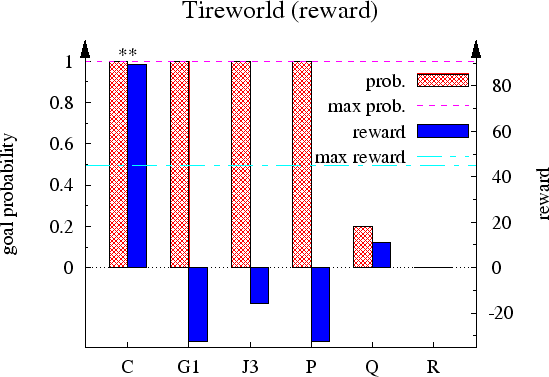

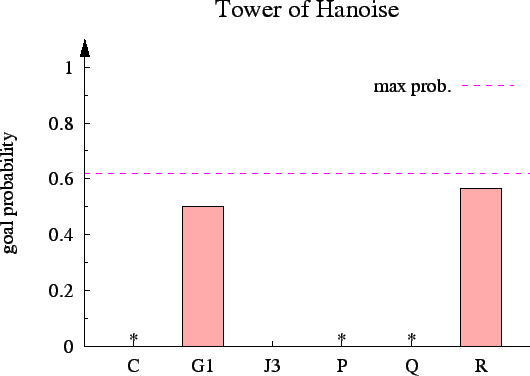

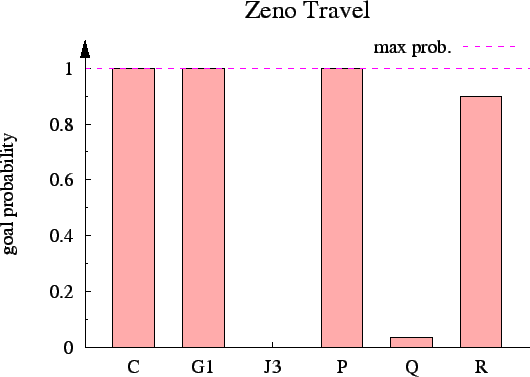

Figure 10 gives a more detailed view of the results in the Non-Blocks/Box category. The optimal score for each problem is indicated in the graphs.3 Note that Planner C's performance in the Tireworld domain is well above optimal, the result of a now-fixed bug in the competition server that allowed disabled actions to be executed. Planner P displayed outstanding performance on the Fileworld and goal-based Tireworld problems, but did not attempt to solve Tower of Hanoise and therefore fell behind G1 and R overall. Planner R used more time per round than Planner G1 in the Zeno Travel domain, which ultimately cost R the second place because it could only complete 27 of the 30 runs in this domain. Note that some planners received a negative score on the reward-oriented problems. We counted negative scores on individual problems as zero in the overall evaluation so as not to give an advantage to planners that did not even attempt to solve some problems. Planner Q was the only entrant (except, possibly, for C) to receive a positive score on the reward-based Tireworld problem. The planners with negative score for this problem used the expensive “call-AAA” action to ensure that the goal was always reached.

|

|

|

|

|

|

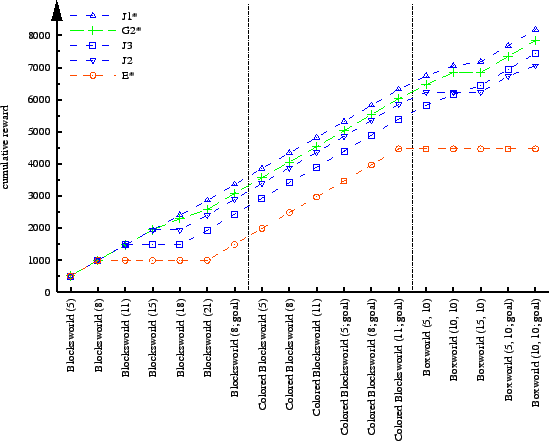

The results for the domain-specific planners are shown in Figure 11. The highest scoring planners were J1 and G2, with the difference between them primarily due to the two largest Blocksworld problems, which J1 solved more effectively than G2. The performance of the five domain-specific planners on the colored Blocksworld problems is virtually indistinguishable. As mentioned earlier, grounding of the goal condition in the validator prevented us from using larger problem instances, which might otherwise have separated the planners in this domain.

|

The two planners that won the “domain specific” category were ineligible for the “no tuning” subcategory because they were hand-tuned for these domains. Thus, J3 and J2 took the top spots in the subcategory. It is interesting to note that J3 won in spite of being a general-purpose planner—it was not, in fact, created to be domain specific. It overtook J2 due to two small Boxworld problems that J3 solved but J2 missed.

Figure 12 summarizes the competition results in the six evaluation categories.

|

Håkan L. S. Younes