Although this work is aimed at giving a general comparison of

heuristics for belief space planning, we also present a comparison

of the best heuristics within CAltAlt and ![]() to some of the

other leading approaches to conformant planning. Table

7 lists several features of the evaluated

planners, such as their search space, their search direction,

whether they are conditional, the type of heuristics, and the

implementation language. Note, since each approach uses a different

planning representation (BDDs, GraphPlan, etc.), not all of which

even use heuristics, it is hard to get a standardized comparison of

heuristic effectiveness. Furthermore, not all of the planners use

PDDL-like input syntax; MBP, and KACMBP use AR encodings which may

give them an advantage in reducing the number of literals and

actions. We gave the MBP planners the same grounded and filtered

action descriptions that we used in CAltAlt and

to some of the

other leading approaches to conformant planning. Table

7 lists several features of the evaluated

planners, such as their search space, their search direction,

whether they are conditional, the type of heuristics, and the

implementation language. Note, since each approach uses a different

planning representation (BDDs, GraphPlan, etc.), not all of which

even use heuristics, it is hard to get a standardized comparison of

heuristic effectiveness. Furthermore, not all of the planners use

PDDL-like input syntax; MBP, and KACMBP use AR encodings which may

give them an advantage in reducing the number of literals and

actions. We gave the MBP planners the same grounded and filtered

action descriptions that we used in CAltAlt and ![]() . We also

tried, but do not report results, giving the MBP planners the full

set of ground actions without filtering irrelevant actions. It

appears that the MBP planners do not use any sort of action

pre-processing because performance was much worse with the full

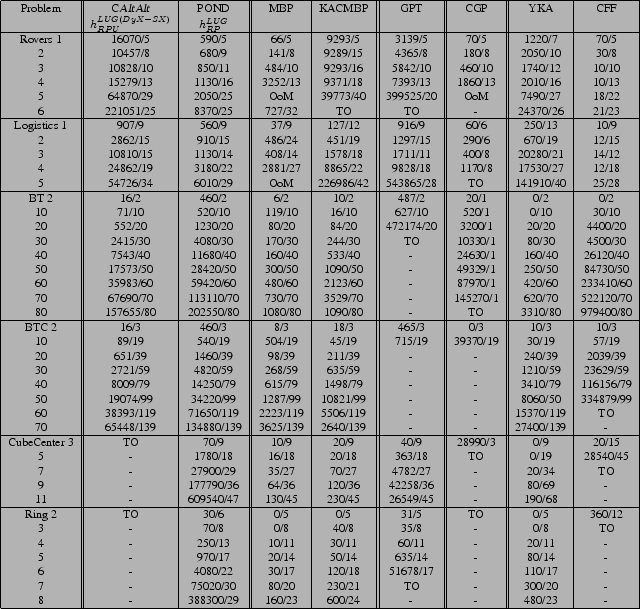

grounded set of actions. Nevertheless, Table 8 compares

MBP, KACMBP, GPT, CGP, YKA, and CFF with

. We also

tried, but do not report results, giving the MBP planners the full

set of ground actions without filtering irrelevant actions. It

appears that the MBP planners do not use any sort of action

pre-processing because performance was much worse with the full

grounded set of actions. Nevertheless, Table 8 compares

MBP, KACMBP, GPT, CGP, YKA, and CFF with

![]() in

CAltAlt and

in

CAltAlt and

![]() in

in ![]() with respect to run time and

plan length.

with respect to run time and

plan length.

|

MBP: The MBP planner uses a cardinality heuristic that in many

cases overestimates plan distances (as per our implementation with

![]() ). MBP uses regression search for conformant plans, but

progression search for conditional plans. It is interesting to note

that in the more difficult problem instances in the Rovers and

Logistics domains MBP and KACMBP tend to generate much longer plans

than the other planners. MBP does outperform

). MBP uses regression search for conformant plans, but

progression search for conditional plans. It is interesting to note

that in the more difficult problem instances in the Rovers and

Logistics domains MBP and KACMBP tend to generate much longer plans

than the other planners. MBP does outperform ![]() in some cases

but does not find solutions in certain instances (like Rovers 5),

most likely because of its heuristic. We note that KACMBP and MBP

are quite fast on the Cube Center and Ring domains, but have more

trouble on domains like Rovers and Logistics. This illustrates how a

heuristic modeling knowledge as opposed to reachability can do well

in domains where the challenge is uncertainty not reachability.

in some cases

but does not find solutions in certain instances (like Rovers 5),

most likely because of its heuristic. We note that KACMBP and MBP

are quite fast on the Cube Center and Ring domains, but have more

trouble on domains like Rovers and Logistics. This illustrates how a

heuristic modeling knowledge as opposed to reachability can do well

in domains where the challenge is uncertainty not reachability.

Optimal Planners: The optimal approaches (CGP and GPT) tend not

to scale as well, despite their good solutions. CGP has trouble

constructing its planning graphs as the parallel conformant plan

depth increases. CGP spends quite a bit of time computing mutexes,

which increases the planning cost as plan lengths increase. CGP

does much better on shallow and parallel domains like BT, where it

can find one step plans that dunk every package in parallel.

GPT performs progression search that is guided by a heuristic that measures the cost of fully observable plans in state space. GPT finds optimal serial plans but is not as effective when the size of the search space increases. GPT fails to scale with the search space because it becomes difficult to even compute its heuristic (due to a larger state space as well).

YKA: YKA, like CAltAlt is a regression planner, but the

search engine is very different and YKA uses a cardinality

heuristic. YKA performs well on all the domains because of its

search engine based on BDDs. We notice a difference in progression

and regression by comparing ![]() to YKA, similar to trends found

in the comparison between

to YKA, similar to trends found

in the comparison between ![]() and CAltAlt. Additionally, it

seems YKA has a stronger regression search engine than CAltAlt.

and CAltAlt. Additionally, it

seems YKA has a stronger regression search engine than CAltAlt.

![]() is able to do better than YKA in the Rovers and Logistics

domains, but it is unclear whether that it is because of the search

direction or heuristics.

is able to do better than YKA in the Rovers and Logistics

domains, but it is unclear whether that it is because of the search

direction or heuristics.

CFF: Conformant FF, a progression planner using a relaxed plan

similar to the ![]() relaxed plan, does very well in the Rovers and

Logistics domains because it uses the highly optimized FF search

engine as well as a cheap to compute relaxed plan heuristic.

However, CFF does not do as well in the BT, BTC, Cube Center, and

Ring problems because there are not as many literals that will be

entailed by a belief state. CFF relies on implicitly representing belief states in terms of

the literals that are entailed by the belief state, the initial belief state, and the action history.

When there are very few literals that can be entailed by the belief state,

reasoning about the belief state requires inference about the action history.

Another possible reason CFF suffers is our

encodings. The Cube Center and Ring domains are naturally expressed

with multi-valued state features, and in our transformation to

binary state features we describe the values that must hold but also

the values that must not hold. This is difficult for CFF because

the conditional effect antecedents contain several literals and

its heuristic is restricted to considering only one such literal.

It may be that CFF is choosing the wrong literal or simply not

enough literals to get effective heuristics. However in BT and BTC

where we used only one literal in effect antecedents CFF still

performs poorly.

relaxed plan, does very well in the Rovers and

Logistics domains because it uses the highly optimized FF search

engine as well as a cheap to compute relaxed plan heuristic.

However, CFF does not do as well in the BT, BTC, Cube Center, and

Ring problems because there are not as many literals that will be

entailed by a belief state. CFF relies on implicitly representing belief states in terms of

the literals that are entailed by the belief state, the initial belief state, and the action history.

When there are very few literals that can be entailed by the belief state,

reasoning about the belief state requires inference about the action history.

Another possible reason CFF suffers is our

encodings. The Cube Center and Ring domains are naturally expressed

with multi-valued state features, and in our transformation to

binary state features we describe the values that must hold but also

the values that must not hold. This is difficult for CFF because

the conditional effect antecedents contain several literals and

its heuristic is restricted to considering only one such literal.

It may be that CFF is choosing the wrong literal or simply not

enough literals to get effective heuristics. However in BT and BTC

where we used only one literal in effect antecedents CFF still

performs poorly.