Research Overview

-

Multimodal Emotion Analysis

Multimodal Emotion Analysis

Understanding human emotions is an important step to build natural human-computer interfaces. A wide range of applications is emerging in marketing, education, health care and entertainment that can benefit from the automatic emotion recognition. For example, service robots can improve the customer satisfaction by adjusting their reactions towards the customers’ emotion states; patients with mental diseases could be better treated with the virtual emotional companion.

Our researches focus on the emotion recognition problem with the two leading emotion models in cognitive science: the categorical emotion and the dimensional emotion. Since emotions are conveyed through multiple human behaviors, we develop our emotion recognition system using multiple modalities such as speech, verbal content, facial expression, and body movement. We explore the unimodal discriminative features, temporal dynamic of emotions and multimodal fusion strategies. Our goal is to build artificial intelligence with high emotional quotation. -

Multimedia Profiling for Historic Events

Multimedia Profiling for Historic Events

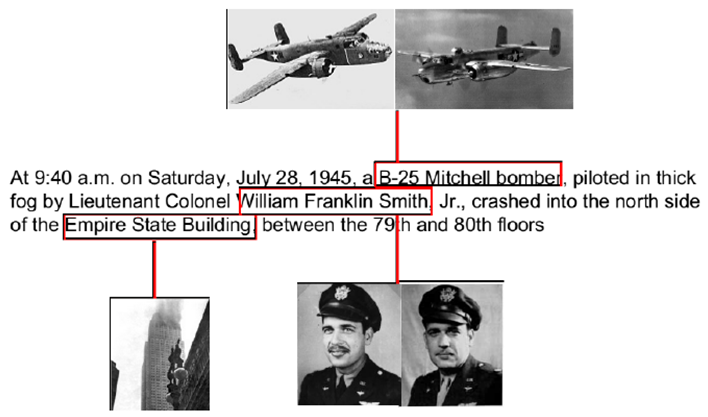

History event related knowledge is precious and multimedia such as imagery is a powerful medium that records diverse information about the event. In this work, we automatically construct an image/multimedia profile given a one sentence description of the historic event which contains where, when, who and what elements. Such a simple input requirement makes our solution easy to scale up and support a wide range of culture preservation and curation related applications ranging from wikipedia enrichment to history education. Furthermore, we automatically add explicit semantic information to image profiling by linking images in the profile with related phrases in the event description.

-

Image/Video Description with Natural Language

Image/Video Description with Natural Language

Generating natural language descriptions of visual content is an intriguing task. It has a wide range of applications such as text summarization for video preview, assisting blind people, or improving search quality for online videos. Different from images, a video consists of multimodalities, such as visual modality and aural modality. Visual modality provides rich information for understanding the semantic contents of the video such as motion, object, scene and so on from the visual perspecive, while aural modality provides rich information from sound and speech perspective. Our work focuses on the multi-modality fusion problem in the video description task.