LTI Students Earn Top Honors in Question-Answering Competition

Susie CribbsThursday, November 10, 2016Print this page.

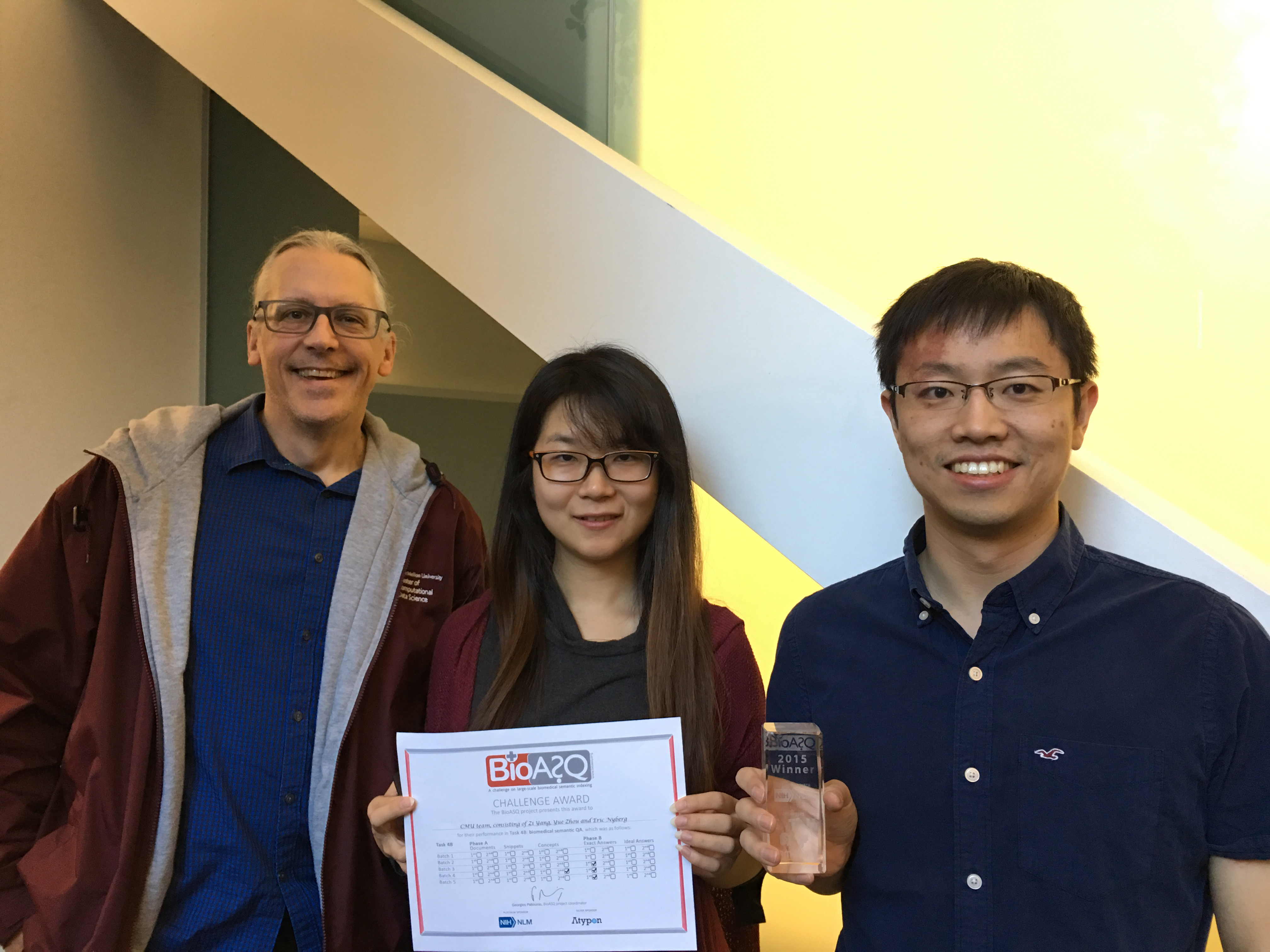

A team of students from the Language Technologies Institute (LTI), one of seven departments in Carnegie Mellon University's School of Computer Science, recently earned top honors for their performance in the BioASQ 2016 Biomedical Semantic Question Answering challenge.

Held annually, BioASQ pushes for a solution to the information access problem biomedical experts face by posing challenges on both biomedical semantic indexing and question answering. The CMU team, comprising Zi Yang, a student in the LTI's Ph.D. program; Yue Zhou, a student in the Master of Computational Data Science (MCDS) program; and Eric Nyberg, professor and director of the MCDS program; created a system that automatically responded to biomedical questions with relevant concepts, articles, snippets, RDF triples, exact answers and paragraph-sized summaries.

The test dataset was released in five batches, each containing approximately 100 questions, and separate winners were announced for each batch of each task. The LTI team took first place in the Exact answers category in batches three, four and five — the only ones in which they participated. The team also earned a second place finish for its work in the Concepts category in batch four.

This is the second year in a row that the LTI group has taken first place in more than one BioASQ task.

"I decided to participate in BioASQ two years ago because it focuses on one of the most important but challenging domains — biomedicine — and includes many teams from the world's top universities and institutes," Yang said. "I was honored to represent the team to present our winning system at the workshop, meet our competitors, openly share our experiences and discuss the technical difficulties that still remain."

The CMU team believes that informatics challenges like BioASQ are best met through careful design of a flexible and extensible architecture, coupled with continuous, incremental experimentation and optimization over various combinations of existing state-of-the-art components, rather than relying on a single "magic" component or single component combination. This year, the number of labeled questions in the development set grew to 1,307 (up from 810 in last year's dataset), which allows further exploration of the potential of supervised learning methods and the effectiveness of multiple biomedical NLP tools in various phases of the system.

"By adopting a multistrategy, service-oriented design and using the ECD and CSE frameworks developed by the Open Advancement of Question Answering team, Zi and Yue were able to explore a large space of possible solutions to find an optimal ensemble of components, given a QA development dataset for machine learning," Nyberg said.

More information, including technical details and program downloads, is available on GitHub. The work is part of CMU's Open Advancement of Question Answering initiative, and was supported in part by grants from the Hoffman-LaRoche Innovation Center (New York).

Byron Spice | 412-268-9068 | bspice@cs.cmu.edu