Research Highlight: Enabling Robot Interaction With Articulated Objects

Aaron AupperleeWednesday, October 19, 2022Print this page.

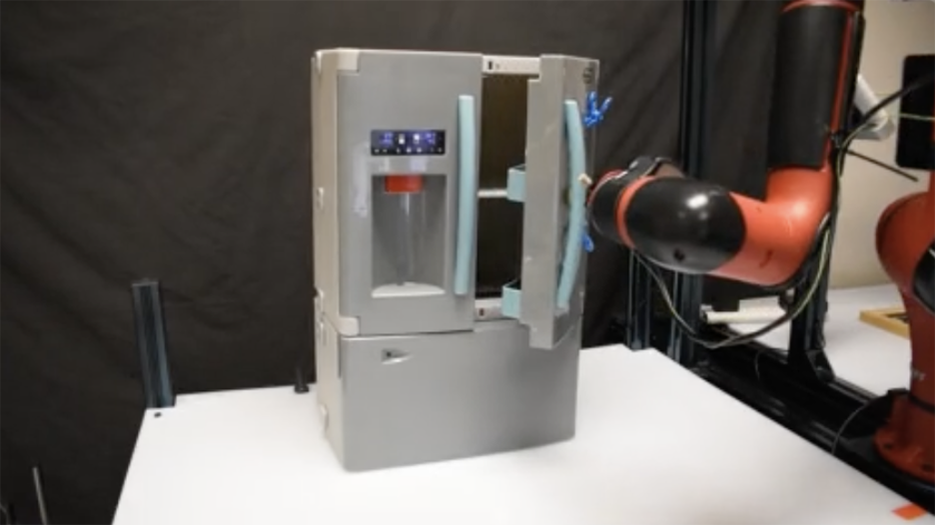

Research from Carnegie Mellon University's Robotics Institute could one day allow robots to seamlessly open drawers, doors and lids on hinges. While humans interact with various articulated objects daily — opening a refrigerator door or lifting a toilet seat are good examples — these tasks present a challenge in robotics.

Ben Eisner and Harry Zhang, both graduate students in Assistant Professor David Held's Robots Perceiving and Doing Lab, designed a new way to train robots to perceive and manipulate articulated objects in their project, "FlowBot3D: Learning 3D Articulation Flow To Manipulate Articulated Objects." The team presented their research at Robotics: Science and Systems this year, where it was a finalist for a best paper award.

FlowBot3D uses a vision-based system to help robots learn how to interact with many different kinds of articulated objects. The system learns to predict the motions an object's articulated parts could make and uses that information to guide the robot's downstream motion planning. To predict the motions, the team trained a network to output a dense vector field showing the potential movement of the articulated object. The system then used an analytical motion planning policy based on this vector field to achieve a grasp that yielded maximum articulation. The team trained the vision system in simulation and then demonstrated its capability to generalize on a Sawyer robot without retraining.

Learn more on the FlowBot3D website.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu