Generative Modeling Tools Renders 2D Sketches in 3D

Jamie MartinesWednesday, April 5, 2023Print this page.

A machine learning tool developed by researchers at Carnegie Mellon University's Robotics Institute (RI) could potentially allow beginner and professional designers to create 3D virtual models of everything from customized household furniture to video game content.

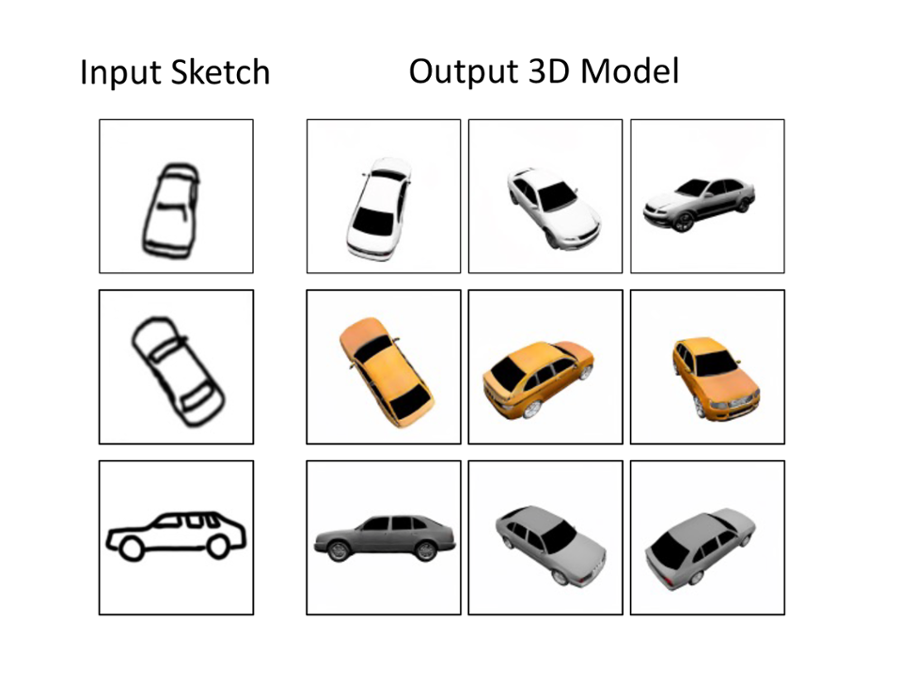

Pix2pix3d allows anyone to create a realistic, 3D representation of a 2D sketch using generative artificial intelligence tools similar to those powering popular AI photo generation and editing applications.

"Our research goal is to make content creation accessible to more people through the power of machine learning and data-driven approaches," said Jun-Yan Zhu, an assistant professor in the School of Computer Science and a member of the pix2pix3d team.

Unlike other tools capable of creating two-dimensional images, pix2pix3d is a 3D-aware conditional generative model that allows a user to input a two-dimensional sketch or more detailed information from label maps, such as a segmentation or edge map. Pix2pix3d then synthesizes a 3D-volumetric representation of geometry, appearance and labels that can be rendered from multiple viewpoints to create a realistic, three-dimensional image resembling a photograph.

"As long as you can draw a sketch, you can make your own customized 3D model," said RI doctoral candidate Kangle Deng, who was part of the research team with Zhu, Professor Deva Ramanan and Ph.D. student Gengshan Yang.

Pix2pix3d has been trained on data sets including cars, cats and human faces, and the team is working to expand those capabilities. In the future, it could be used to design consumer products, like giving people the power to customize furniture for their homes. Both novice and professional designers could use it to customize items in virtual reality environments or video games, or to add effects to films.

Once pix2pix3d generates a 3D image, the user can modify it in real-time by erasing and redrawing the original two-dimensional sketch. This feature gives the user more freedom to customize and refine the image without having to rerender the entire project. Changes are reflected in the 3D model and are accurate from multiple viewpoints.

Its interactive editing feature sets pix2pix3d apart from other modeling tools since users can make adjustments quickly and efficiently. This capability could be especially helpful in fields like manufacturing because users can easily design, test and adjust a product. For example, if a designer inputs a sketch of a car with a square hood, the model will provide a 3D rendering of such a car. If the designer erases that part of the sketch and replaces the square hood with a round one, the 3D model immediately updates. The team plans to continue refining and improving this feature in the future.

Deng noted that even the least artistic user will achieve a satisfactory result. The model can generate an accurate output of a simple or rough sketch. When provided with more precise and detailed data from segmentation or edge maps, the model can create a highly sophisticated 3D image.

"Our model is robust to user errors," Deng said, adding that even a 2D sketch loosely resembling a cat will generate a 3D image of a cat.

The team's research, "3D-Aware Conditional Image Synthesis," has been accepted to the 2023 IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR). More information is available on the project's website.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu