Teaching AI Agents To Navigate Ambiguous Instructions

Charlotte HuThursday, August 29, 2024Print this page.

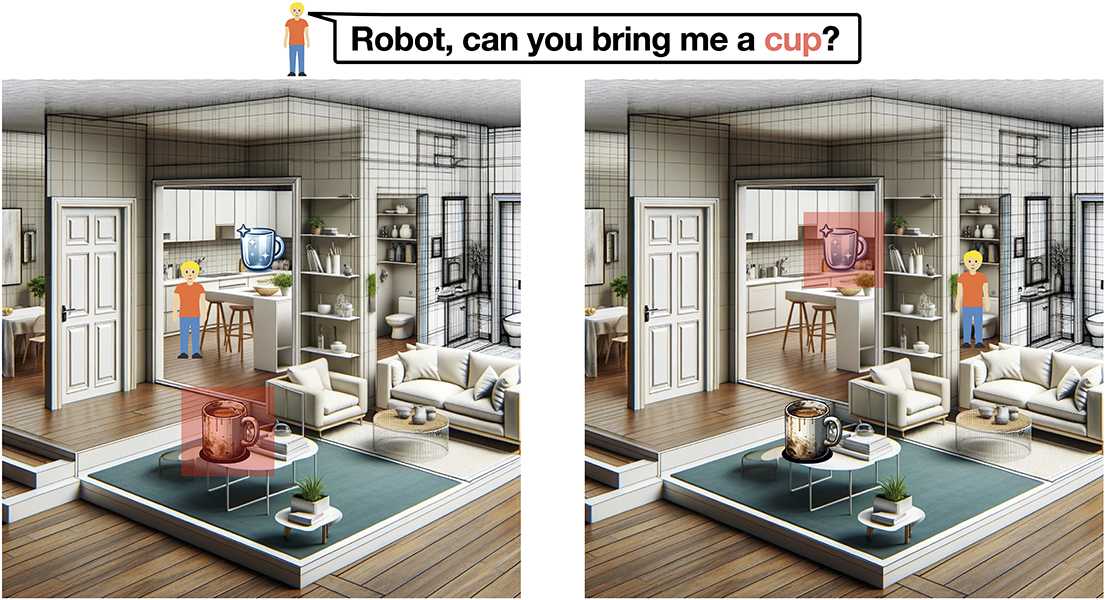

People can be relatively vague when they're giving instructions to one another because they pick up context from their shared environment or situation. For example, if one person is washing the dishes and asks someone to hand them a cup, it most likely means a nearby dirty cup rather than a clean one from inside the cabinet.

But with robots and artificially intelligent agents, people can't afford to take such shortcuts with language. Tiffany So Yeon Min, a Ph.D. student in the Machine Learning Department (MLD) at Carnegie Mellon University's School of Computer Science (SCS), wants to teach robots and AI agents how to operate in response to vague directions. She worked with a research team from Meta AI, led by Roozbeh Mottaghi, to leverage large language models (LLMs) and develop a dataset and models for AI agents designed to assist humans. The project received guidance and supervision from SCS faculty members Ruslan Salakhutdinov, a professor in MLD, and Yonatan Bisk, an assistant professor in both the Language Technologies Institute and the Robotics Institute.

"If we look at existing robot instruction-following tests, it's a spectrum in terms of how overspecified or ambiguous they are," Min said. "If we actually want to use these robot assistants, they have to understand language somewhere in the middle of the spectrum. That's what we tried to create in this situation-based, instruction-following dataset."

To create the dataset and models, Min used a premade virtual house that came with both a simulated human and robot. She sampled points from different places across the virtual house and used them to represent human movement from one point to another. She then created 480 different scenarios related to where the human was, what the human was doing and what its intentions were. An LLM then translated those scenarios into contextual information to guide a robot on what to look for, specific actions to take or how to identify objects of interest. By observing where the human is and what the human is doing in combination with what they're saying, the robot can then take appropriate action in response to fairly ambiguous instructions.

For example, if the human is walking and tells the robot "Bring me the basket. I'll be washing my face," the robot can first grab the basket and then analyze if there is a specific bathroom the human is referring to based on its mental map of the house. If the house has multiple bathrooms and the robot can't accurately predict which one the human is referring to, it can then take actions like following the human to resolve the uncertainty.

"A lot of existing research on robot planning or large language models has been on understanding the situation with perception and then trying to map this perception to some more direct actions like grabbing something or moving forward," Min said. "This work is closer to how language instructions are given in the real world."

The next step, she imagines, are AI agents that could identify the limitations of human instructions and learn to ask follow-up questions for clarification or more information.

The research team will present their work at the European Conference of Computer Vision, Sept. 29–Oct. 4 in Milan. Learn more about their work in their new paper.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu