Wehbe, Tarr Labs Publish Paper in Nature Machine Intelligence

Adam KohlhaasMonday, January 22, 2024Print this page.

Language has a profound effect on how humans see the world, and researchers have long used visual data and language data separately to simulate how the brain learns. In a new paper published in Nature Machine Intelligence, researchers in the Machine Learning Department (MLD) have combined visual and language information to predict brain responses to real-world images through multimodal models like contrastive language-image pretraining (CLIP).

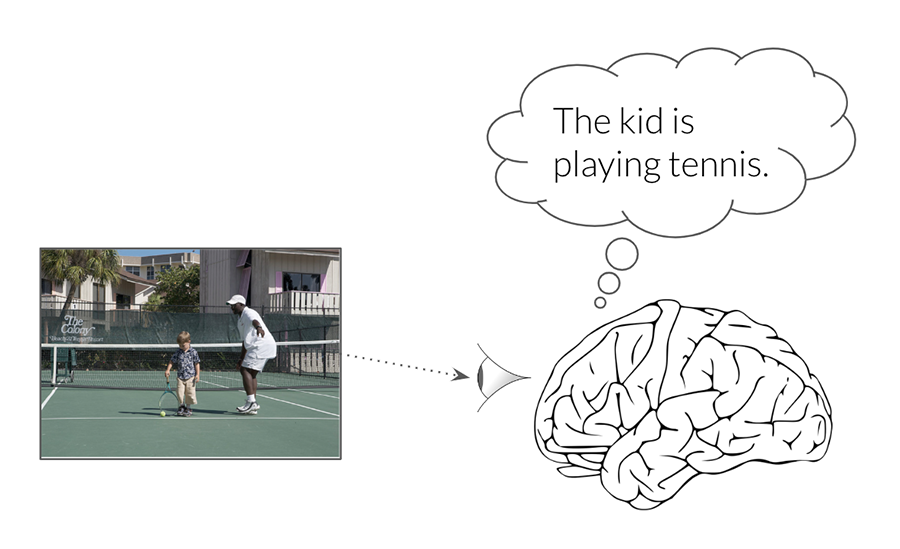

CLIP can take a large sample of subject images and match it with a large sample of semantic descriptions of the same subject. For example, it can match images of an apple with written descriptions of the apple's shape or color. While predictive models that use visual or semantic information have been used to understand how the brain processes either modality for some time, combining them to understand how visual processing in the brain leverages semantic information is a relatively new endeavor. The researchers in MLD, in collaboration with researchers at the University of Minnesota, have developed the model that bridges the gap between the two.

"Our results support the theory that, beyond object identity, human high-level visual representations reflect semantics and the relational structure of the visual world," said Aria Wang who recently earned a joint Ph.D in machine learning and neural computation at CMU and was the paper's first author.

This synergy between visual and semantic information is believed to be a key component in how the brain functions. Though many other researchers have begun using multimodal models for brain prediction, this paper is one of the first to apply them to the study of the brain and could serve as a detailed guide on where and how the CLIP models provide superior explanations for brain data and possibly lead to a better understanding of the brain.

Read more about the research in the paper, "Joint Natural Language and Image Pretraining Builds Better Models of Human Higher Visual Cortex."

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu