Next: Proof of Theorem 3

Up: Appendix A

Previous: Appendix A

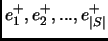

Since the hardness

results of Theorems 1 and 3 are stated for the two-classes case, we shall use the notation

![$\Delta_{(i)}=\vec{v}_{(i)}[1] - \vec{v}_{(i)}[0]$](img422.png) for some arbitrary rule

for some arbitrary rule

, where

, where

![$\vec{v}_{(i)}[0]$](img424.png) is the value for class

``-'' and

is the value for class

``-'' and

![$\vec{v}_{(i)}[1]$](img425.png) is the value for class ``+''. A positive value for

is the value for class ``+''. A positive value for

means that

means that  is in favor of class ``+'' whereas a negative value

gives a

is in favor of class ``+'' whereas a negative value

gives a  in favor of class ``-''. Value

in favor of class ``-''. Value  for

for  gives a

gives a  neutral with respect

to the classes. We use a reduction from the

neutral with respect

to the classes. We use a reduction from the  -Hard problem ``Minimum Cover'' [Garey Johnson1979]:

-Hard problem ``Minimum Cover'' [Garey Johnson1979]:

- Name : ``Minimum Cover''.

- Instance : A collection

of subsets of a finite set

of subsets of a finite set  . A positive integer

. A positive integer  ,

,  .

.

- Question : Does

contain a cover of size at most

contain a cover of size at most  , that is, a subset

, that is, a subset

with

with  , such that any element of

, such that any element of  belongs to at least one member of

belongs to at least one member of  ?

?

The reduction is constructed as follows : from a ``Minimum Cover'' instance we build a learning sample  such that if there exists a cover of size

such that if there exists a cover of size  of

of  , then there exists a decision committee with

, then there exists a decision committee with  literals consistent with

literals consistent with  , and, reciprocally, if there exists a decision committee with

, and, reciprocally, if there exists a decision committee with  literals consistent with

literals consistent with  , then there exists a cover of size

, then there exists a cover of size  of

of  . Hence, finding the smallest decision committee consistent with

. Hence, finding the smallest decision committee consistent with  is equivalent to finding the smallest

is equivalent to finding the smallest  for which there exists a solution to ``Minimum Cover'', and this is intractable if

for which there exists a solution to ``Minimum Cover'', and this is intractable if  .

.

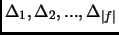

Let  denote the

denote the  element of

element of  , and

, and  the

the  element

of

element

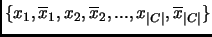

of  . We define a set of

. We define a set of  Boolean variables in one to one correspondence with the elements of

Boolean variables in one to one correspondence with the elements of  , which we

use to describe the examples of

, which we

use to describe the examples of  . The corresponding set of literals is

denoted

. The corresponding set of literals is

denoted

. The sample

. The sample  contains two

disjoint subsets : the set of positive examples

contains two

disjoint subsets : the set of positive examples  , and the set of

negative ones

, and the set of

negative ones  .

.  contains

contains  examples, denoted by

examples, denoted by

. We construct each positive example so that it encodes

the membership of the corresponding element of

. We construct each positive example so that it encodes

the membership of the corresponding element of  in the elements of

in the elements of  . More precisely,

. More precisely,

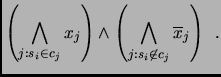

contains a single negative example, defined by:

contains a single negative example, defined by:

Suppose there exists a cover

Suppose there exists a cover  of

of  satisfying

satisfying

. We create a decision committee consisting of

. We create a decision committee consisting of  monomials, each

with one literal only and associated to a positive

monomials, each

with one literal only and associated to a positive  . Each monomial

codes one of the sets in

. Each monomial

codes one of the sets in  . The default class is ``-''. This decision committee is consistent with the examples of

. The default class is ``-''. This decision committee is consistent with the examples of

, otherwise some element of

, otherwise some element of  would not be covered. If there are only two values authorized for the vectors and they are

would not be covered. If there are only two values authorized for the vectors and they are  , we simply create a DC consisting of one monomial with negative literals associated to a negative

, we simply create a DC consisting of one monomial with negative literals associated to a negative  (the value for the negative class is greater than the one of the positive class); each of the negative literals codes one of the sets in

(the value for the negative class is greater than the one of the positive class); each of the negative literals codes one of the sets in  . The default class is ``+''.

. The default class is ``+''.

Suppose now that there exists a decision committee

Suppose now that there exists a decision committee  with at most

with at most  literals consistent with

literals consistent with  . Denote

. Denote

each monomial of

each monomial of  , in no specific order, and

, in no specific order, and

their associated values for

their associated values for  . The monomials of

. The monomials of  can belong to three types of subsets of monomials:

can belong to three types of subsets of monomials:

- monotonous monomials (without negative literals),

- monomials containing only negative literals,

- monomials containing positive and negative literals.

Let us call respectively  ,

,  ,

,  these three classes. Given that each monomial of

these three classes. Given that each monomial of  can be associated to a positive or a negative

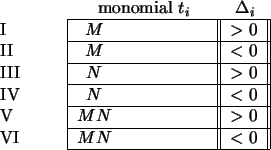

can be associated to a positive or a negative  , there exists on the whole six classes of rules, presented in Figure 5.

, there exists on the whole six classes of rules, presented in Figure 5.

Figure 5:

The six possible cases of rules.

|

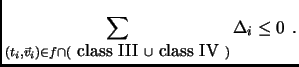

Any monomial of  containing at least one positive literal can only be satisfied by positive examples. Therefore, if there exists rules belonging to class II or VI, we can remove them without losing consistency. Furthermore, since

containing at least one positive literal can only be satisfied by positive examples. Therefore, if there exists rules belonging to class II or VI, we can remove them without losing consistency. Furthermore, since  contains only negative literals, if we remove their negative literals from all rules belonging to class V (making them go to class I), we do not lose consistency. As a consequence, we can suppose without loss of generality that all rules of

contains only negative literals, if we remove their negative literals from all rules belonging to class V (making them go to class I), we do not lose consistency. As a consequence, we can suppose without loss of generality that all rules of  are in class I, III, or IV.

are in class I, III, or IV.

We now treat independently two cases, depending on whether the default class of  is ``+'' or ``-''.

is ``+'' or ``-''.

- The default class is ``-''. Any positive example satisfies therefore a monomial in

. There can exist two types of positive examples: those satisfying at least one rule of class I, and those not satisfying any class I rule (therefore satisfying at least one rule of class III).

. There can exist two types of positive examples: those satisfying at least one rule of class I, and those not satisfying any class I rule (therefore satisfying at least one rule of class III).  satisfies all class III and IV rules. Therefore,

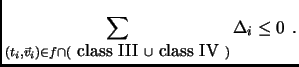

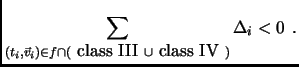

satisfies all class III and IV rules. Therefore,

|

|

|

(11) |

This shows that, if a positive example not satisfying any class I rules would satisfy all class IV rules, then it would be misclassified, which is impossible by the consistency hypothesis. This gives an important property, namely that any positive example not satisfying any class I rule cannot satisfy all class IV rules. Let us call P this property in what follows. We now show how to build a valid solution to ``Minimum Cover'' with at most  elements. For any positive example

elements. For any positive example  ,

,

- if

satisfies at least one class I rule, choose in

satisfies at least one class I rule, choose in  a subset of

a subset of  corresponding to a positive literal of some satisfied class I rule. This subset contains

corresponding to a positive literal of some satisfied class I rule. This subset contains  .

.

- if

does not satisfy any class I rule, there exists from P some class IV rule which is not satisfied. Among all negative literals of a class IV rule which is not satisfied by

does not satisfy any class I rule, there exists from P some class IV rule which is not satisfied. Among all negative literals of a class IV rule which is not satisfied by  , choose one which is positive in

, choose one which is positive in  (causing it not to satisfy the rule), and then choose the corresponding element of

(causing it not to satisfy the rule), and then choose the corresponding element of  . This subset of

. This subset of  contains

contains  .

.

Iterating the above procedure for all positive examples, we obtain a cover of  consisting of at most

consisting of at most  subsets of

subsets of  .

.

- The default class is ``+''.

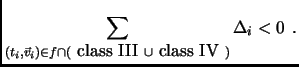

satisfies all class III and IV rules. Therefore,

satisfies all class III and IV rules. Therefore,

|

|

|

(12) |

Even if the inequality is now strict, it gives the same procedure for efficiently building the solution to ``Minimum Cover'' with at most  elements, by using the same argument as in the preceeding case.

elements, by using the same argument as in the preceeding case.

This ends the proof of Theorem 1.

Next: Proof of Theorem 3

Up: Appendix A

Previous: Appendix A

©2002 AI Access Foundation and Morgan Kaufmann Publishers. All rights reserved.

![]() for some arbitrary rule

for some arbitrary rule

![]() , where

, where

![]() is the value for class

``-'' and

is the value for class

``-'' and

![]() is the value for class ``+''. A positive value for

is the value for class ``+''. A positive value for

![]() means that

means that ![]() is in favor of class ``+'' whereas a negative value

gives a

is in favor of class ``+'' whereas a negative value

gives a ![]() in favor of class ``-''. Value

in favor of class ``-''. Value ![]() for

for ![]() gives a

gives a ![]() neutral with respect

to the classes. We use a reduction from the

neutral with respect

to the classes. We use a reduction from the ![]() -Hard problem ``Minimum Cover'' [Garey Johnson1979]:

-Hard problem ``Minimum Cover'' [Garey Johnson1979]: