|

We implemented a classical Blocks World domain with the two operators in Figure 2. This domain has two actions: stack that puts one block on top of another, and, unstack that places a block on the table to start a new tower. Plan quality in this domain is simply the number of steps. Optimal planning in this domain is NP-hard [38]. However, it is trivial to generate a correct, but suboptimal, plan in linear time using the naive algorithm: put all blocks on the table and build the desired towers from the bottom up. We compare three planners on this domain:

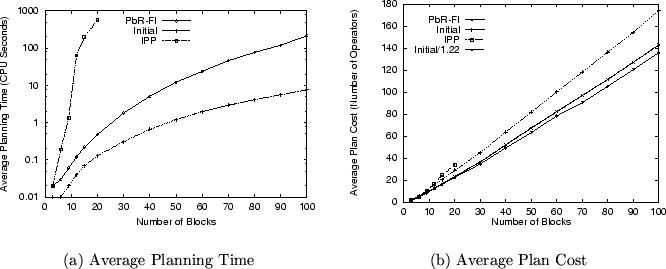

We generated random Blocks World problems scaling the number of blocks. The problem set consists of 25 random problems at 3, 6, 9, 12, 15, 20, 30, 40, 50, 60, 70, 80, 90, and 100 blocks for a total of 350 problems. The problems may have multiple towers in the initial state and in the goal state.

Figure 26(a) shows the average planning time of the 25 problems for each block quantity. IPP cannot solve problems with more than 20 blocks within the time limit of 1000 CPU seconds. The problem solving behavior of IPP was interesting. IPP either solved a given problem very fast or it timed out. For example, it was able to solve 11 out of the 25 20-block problems under 100 seconds, but it timed out at 1000 seconds for the remaining 14 problems. This seems to be the typical behavior of complete search algorithms [37]. The local search of PbR allows it to scale much better and solve all the problems.

Figure 26(b) shows the average plan cost as the number of blocks increases. PbR improves considerably the quality of the initial plans. The optimal quality is only known for very small problems, where PbR approximates it, but does not achieve it (we ran Sage for problems of less than 9 blocks). For larger plans we do not know the optimal cost. However, Slaney & Thiébaux [78] performed an extensive experimental analysis of Blocks World planning using a domain like ours. In their comparison among different approximation algorithms they found that our initial plan generator (unstack-stack) achieves empirically a quality around 1.22 the optimal for the range of problem sizes we have analyzed (Figure 7 in [78]). The value of our average initial plans divided by 1.22 suggests the quality of the optimal plans. The quality achieved by PbR is comparable with that value. In fact it is slightly better which may be due to the relatively small number of problems tested (25 per block size) or to skew in our random problem generator. Interestingly the plans found by IPP are actually of low quality. This is due to the fact that IPP produces shortest parallel plans. That means that the plans can be constructed in the fewest time steps, but IPP may introduce more actions in each time step than are required.

In summary, the experiments in this and the previous sections show that across a variety of domains PbR scales to large problems while still producing high-quality plans.