|

|

|

We have tested our automatic modeling alogrithms

on a large database of real and synthetic objects.

We have also demonstrated the performance over a wide

range of scales, sensors, and scene types. Finally, we have shown

that automatic modeling produces accurate 3D

models, limited mainly by the quality of the input data.

Here are some example automatically created

models. The objects shown here were scanned with a Minolta Vivid 700

laser scanner, which also records a registered color image of each 3D

view. The left-hand column for each objects shows the color image from

two input views, and the right-hand column shows a rendering of the

automatically constructed 3D model from approximately the same viewpoint.

The VRML models are in VRML 1.0 format and can be viewed with a VRML

viewer such as Cosmo player.

The models have been simplified to 2,000 to 5,000 triangles to keep

the file sizes small. For data sets of about 20 views, the automatic

modeling process takes about 25 minutes: 5 minutes to collect data and

20 minutes to create the model.

|

|

|

photograph of object

|

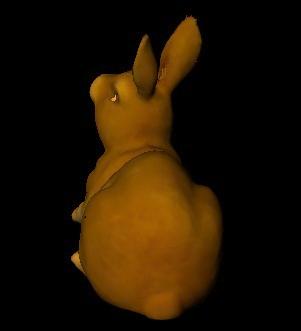

automatically created model

|

|

|

|

|

|

|

|

|

|

|

photograph of object

|

automatically created model

|

|

|

|

|

|

|

|

Dwarf - 25 views - vrml model

small (680

KB), large

(1.7 MB)

|

|

photograph of object

|

automatically created model

|

|

|

|

|

|

|

|

|

|

|

photograph of object

|

automatically created model

|

|

|

|

|

|

|

|

Some artifacts can be seen in the 3D models:

- A small ridge appears under the rabbit's

chin. This is caused by a depth anomoly in the Vivid scanner that

sometimes occurs when objects with horizontal concave ridges are scanned.

Most scanners do not have this problem.

- Some of the models contain holes, which

appear for several reasons. First, it is possible that part of the

object was not seen from any viewpoint. This problem can usually be

solved by taking additional data. Second, laser scanners have difficulty

with very dark surfaces and with very shiny surfaces because the laser

return is not strong enough. This can be seen in the eyes of the four

figures. Finally, some laser scanners have problems near occlusion

boundaries (i.e., discontinuous jumps in the range), causing the range

(and the resulting 3D mesh) to be inaccurate. This problem has not

been studied, and in order to eliminate this bad data, I remove the

boundary vertices of each view in a pre-processing step. The input

range images tend to have numerous small holes, which are enlarged

by this filtering process.

- The texturing of the models is not perfect.

This is due to the low-quality input images and to violations in the

assumptions of the algorithm I use for creating the texture maps (the

algorithm assumes Lambertian surfaces and no shadows).

Next: Large database

test results

|